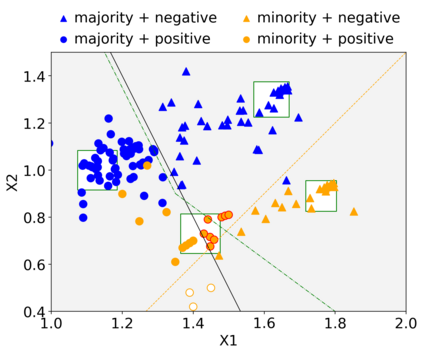

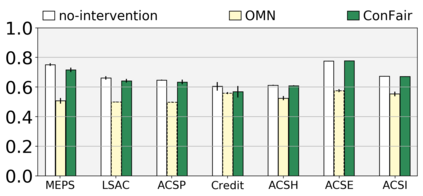

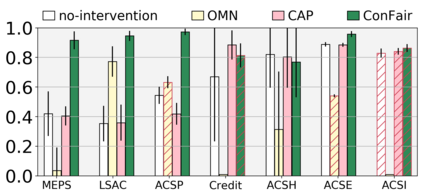

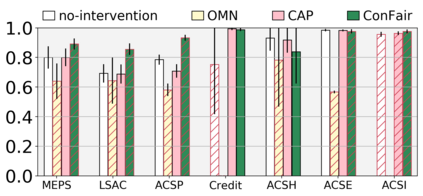

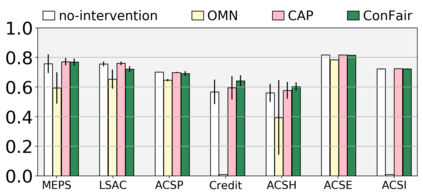

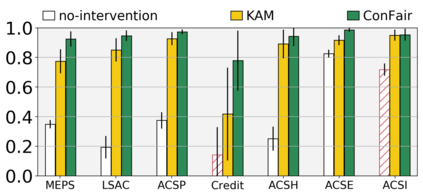

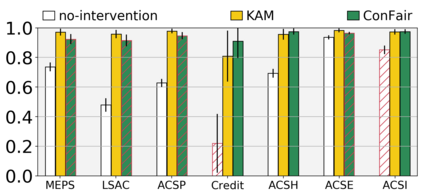

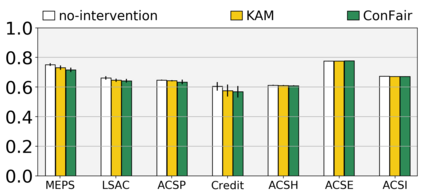

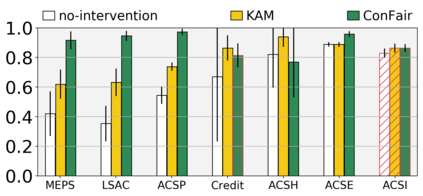

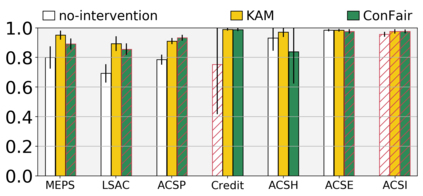

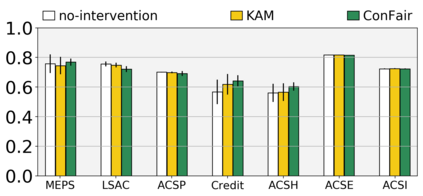

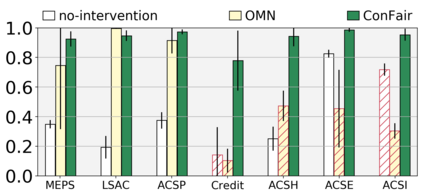

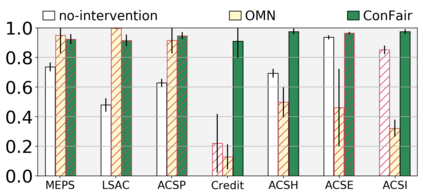

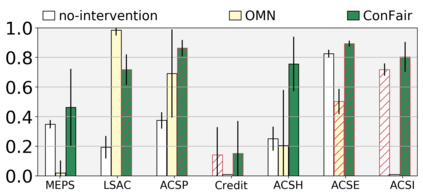

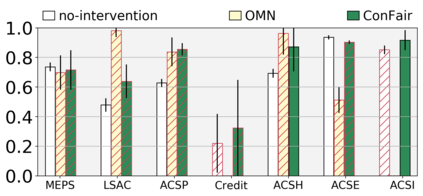

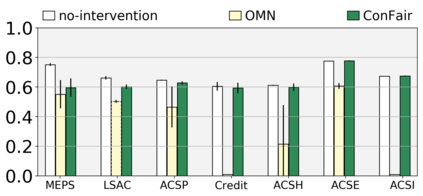

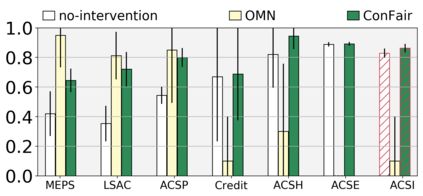

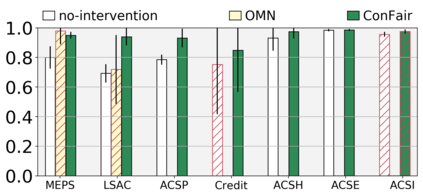

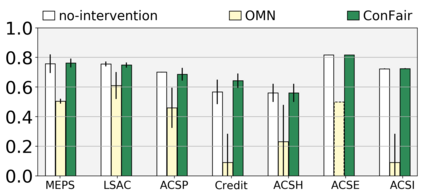

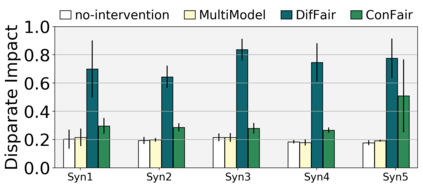

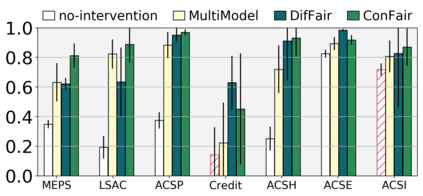

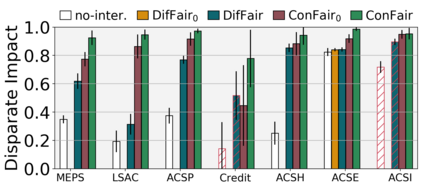

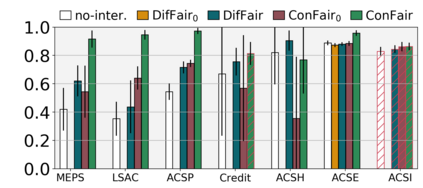

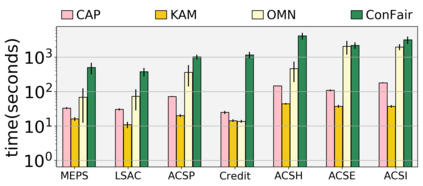

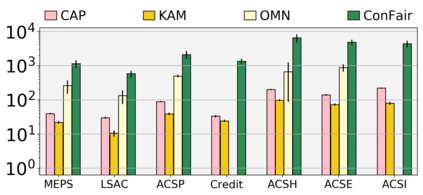

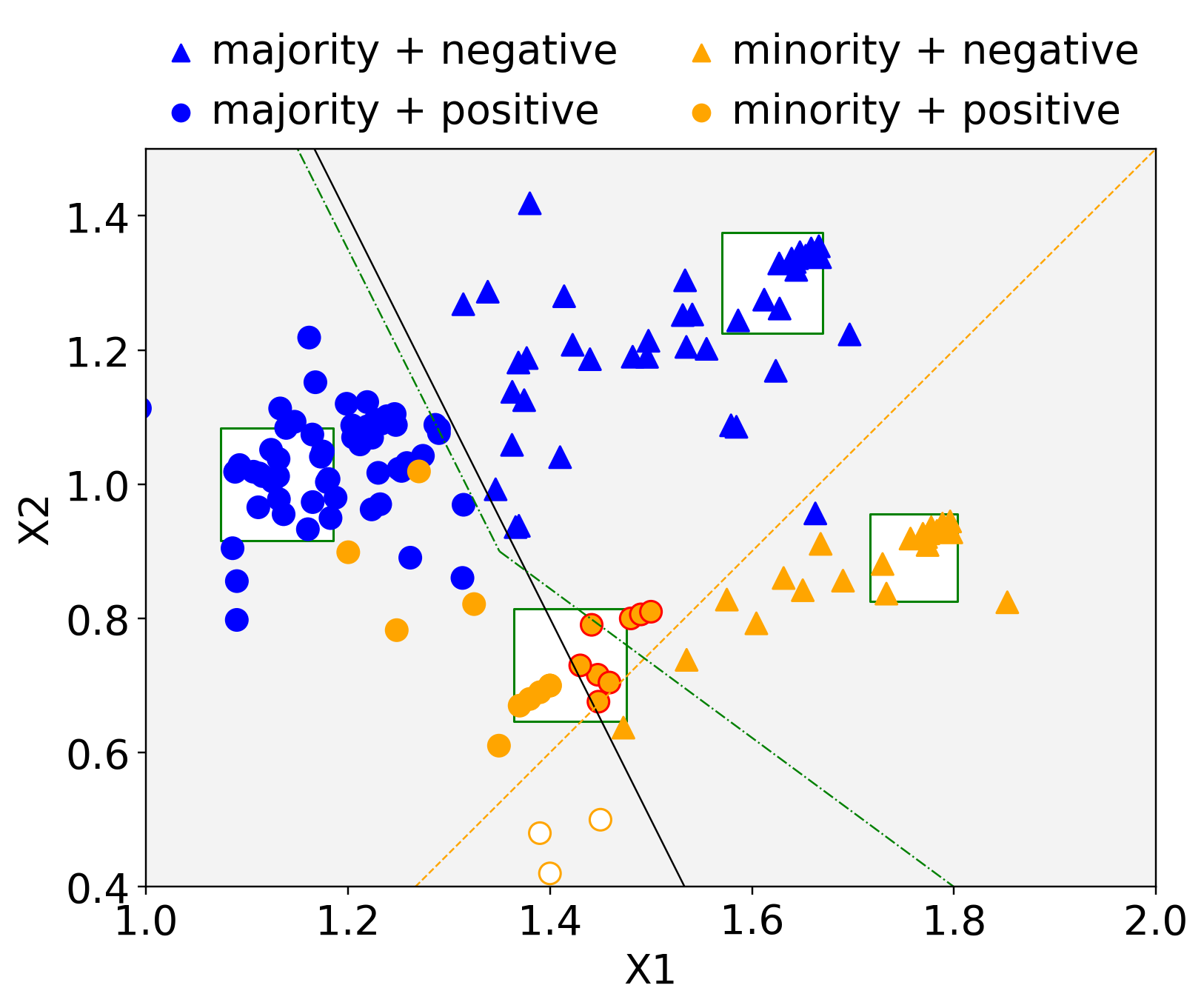

Machine Learning (ML) models are widely employed to drive many modern data systems. While they are undeniably powerful tools, ML models often demonstrate imbalanced performance and unfair behaviors. The root of this problem often lies in the fact that different subpopulations commonly display divergent trends: as a learning algorithm tries to identify trends in the data, it naturally favors the trends of the majority groups, leading to a model that performs poorly and unfairly for minority populations. Our goal is to improve the fairness and trustworthiness of ML models by applying only non-invasive interventions, i.e., without altering the data or the learning algorithm. We use a simple but key insight: the divergence of trends between different populations, and, consecutively, between a learned model and minority populations, is analogous to data drift, which indicates the poor conformance between parts of the data and the trained model. We explore two strategies (model-splitting and reweighing) to resolve this drift, aiming to improve the overall conformance of models to the underlying data. Both our methods introduce novel ways to employ the recently-proposed data profiling primitive of Conformance Constraints. Our experimental evaluation over 7 real-world datasets shows that both DifFair and ConFair improve the fairness of ML models.

翻译:机器学习(Machine Learning,简称ML)模型被广泛应用于许多现代数据系统中。虽然它们是无可否认的强大工具,但是机器学习模型常常表现出不平衡的性能和不公平的行为。这个问题的根源通常在于不同的子人群通常展现出不同的趋势:当一个学习算法试图识别数据中的趋势时,它自然倾向于优先考虑大多数人群的趋势,导致一个性能差劲、对少数族群公平性欠缺的模型。我们的目标是通过仅应用非侵入式的干预来改善机器学习模型的公平性和可靠性,即不改变数据或学习算法。我们使用一个简单但关键的见解:不同子人群之间和随后学习模型与少数群体之间的趋势分歧类似于数据漂移,这表明数据的某些部分与训练的模型之间的一致性较差。我们探讨了两种策略(模型分割和权重重调)来解决这种漂移,旨在提高模型整体对基础数据的一致性。两种方法都引入了一种新的数据分析原语——符合性约束,用于构建数据概要。我们在7个真实世界的数据集上进行了实验性评估,结果表明,DifFair和ConFair都可以提高机器学习模型的公平性。