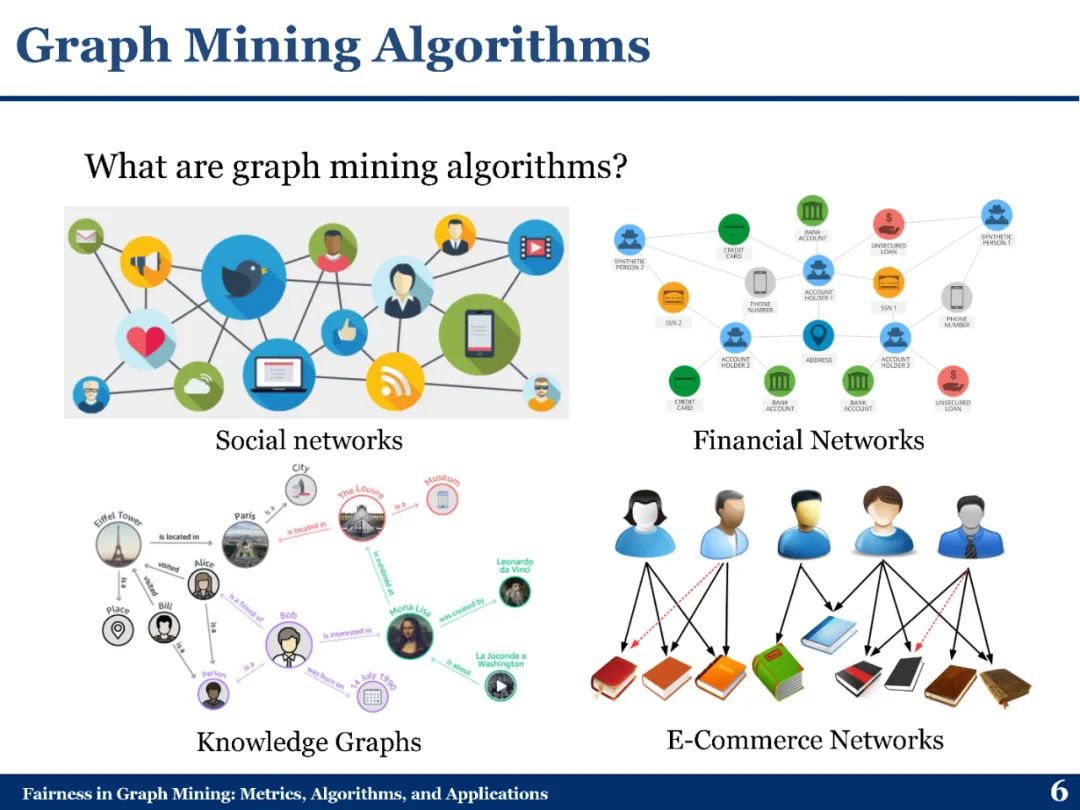

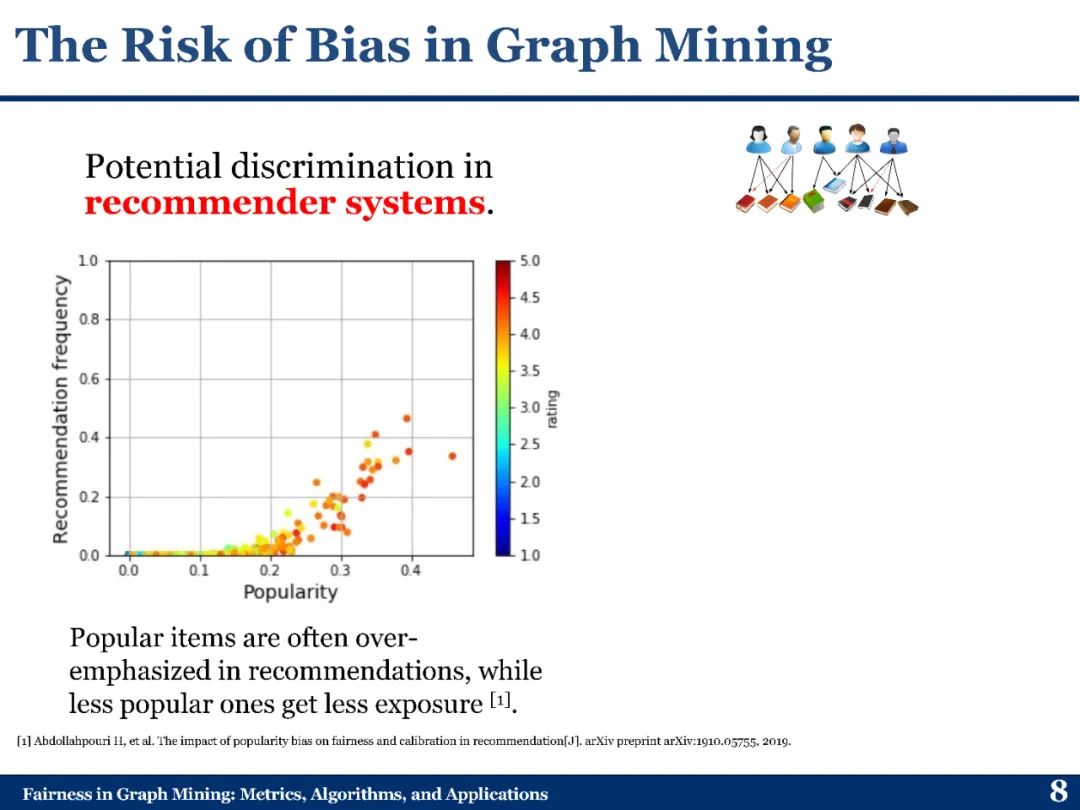

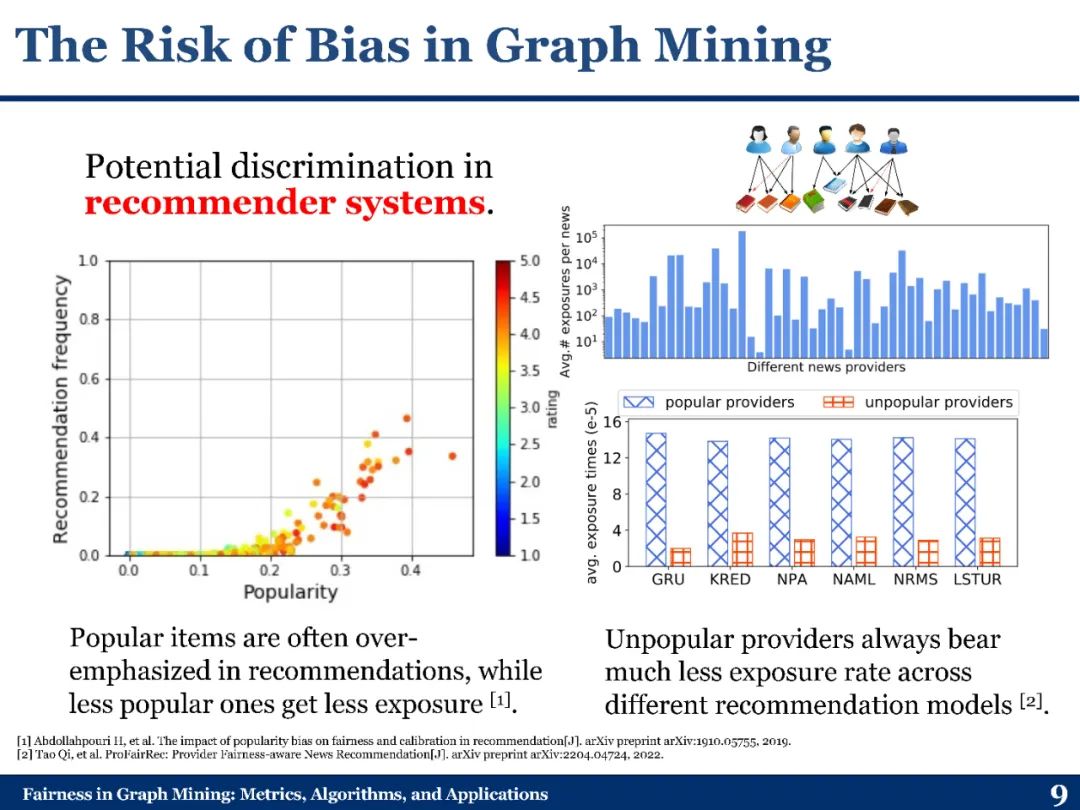

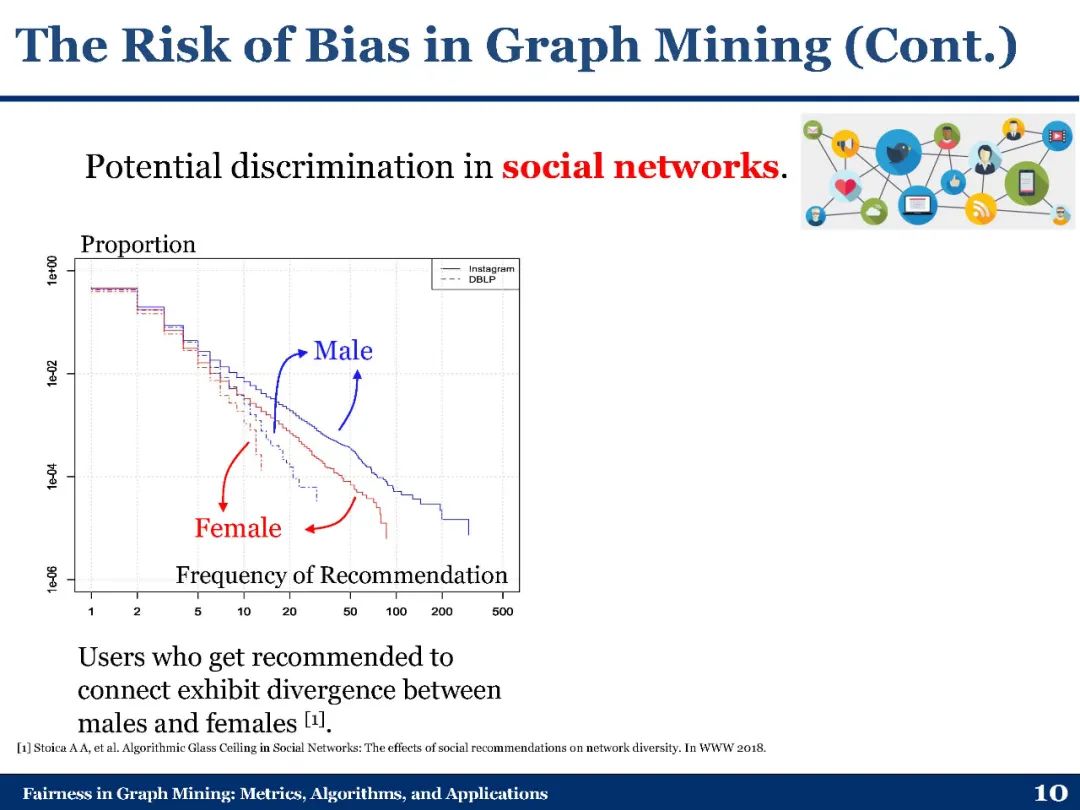

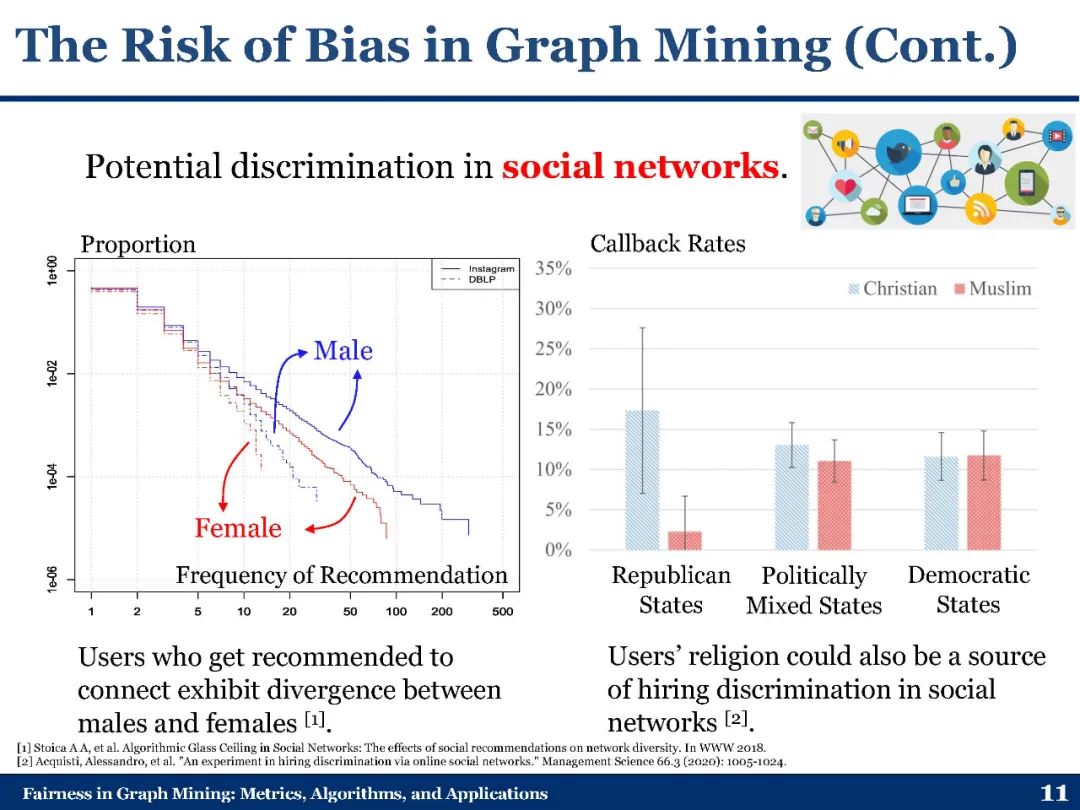

图数据在现实世界的各种应用中无处不在。为了更深入地理解这些图,图挖掘算法多年来发挥了重要作用。然而,大多数图挖掘算法缺乏对公平性的考虑。因此,它们可能对某些人口次群体或个人产生歧视性的结果。这种潜在的歧视导致社会越来越关注如何缓解图挖掘算法中表现出的偏见。本教程全面概述了在测量和减轻图挖掘算法中出现的偏差方面的最新研究进展。首先介绍了几个广泛使用的公平性概念和相应的指标。然后,对现有的去偏置图挖掘算法技术进行了有组织的总结。展示了不同的现实世界应用在去偏后如何受益于这些图挖掘算法。对当前的研究挑战和开放问题提出了见解,以鼓励进一步取得进展。

https://yushundong.github.io/ICDM_2022_tutorial.html

内容:

Part 1: 引言Introduction

Background and Motivation. * An overview of graph mining tasks that have been studied on algorithmic bias mitigation. * An overview of the applications which benefit from debiased graph mining algorithms.

Part 2:图挖掘公平性符号与度量 Fairness Notions and Metrics in Graph Mining

Why is it necessary to define fairness in different ways? * Group Fairness: graph mining algorithms should not render discriminatory predictions or decisions against individuals from any specific sensitive subgroup. * Individual Fairness: graph mining algorithms should render similar predictions for similar individuals. * Counterfactual Fairness: an individual should receive similar predictions when his/her features are perturbed in a counterfactual manner. * Degree-Related Fairness: nodes with different degree values in the graph should receive similar quality of predictions. * Application-Specific Fairness: fairness notions defined in specific real-world applications.

**Part 3: 图挖掘算法去偏见技术 **Techniques to Debias Graph Mining Algorithms

Optimization with regularization. * Optimization with constraint. * Adversarial learning. * Edge re-wiring. * Re-balancing. * Orthogonal projection.

Part 4: 真实世界应用场景 Real-World Application Scenarios

Recommender systems. * Applications based on knowledge graphs. * Other real-world applications, including candidate-job matching, criminal justice, transportation optimization, credit default prediction, etc.

Part 5: 总结 挑战与未来 Summary, Challenges, and Future Directions

Summary of presented fairness notions, metrics and debiasing techniques in graph mining. * Summary on current challenges and future directions. * Discussion with audience on which fairness notion, metric should be applied to their own application scenarios.

讲者: