We study the problem of crowdsourced PAC learning of threshold functions. This is a challenging problem and only recently have query-efficient algorithms been established under the assumption that a noticeable fraction of the workers are perfect. In this work, we investigate a more challenging case where the majority may behave adversarially and the rest behave as the Massart noise - a significant generalization of the perfectness assumption. We show that under the {semi-verified model} of Charikar et al. (2017), where we have (limited) access to a trusted oracle who always returns correct annotations, it is possible to PAC learn the underlying hypothesis class with a manageable amount of label queries. Moreover, we show that the labeling cost can be drastically mitigated via the more easily obtained comparison queries. Orthogonal to recent developments in semi-verified or list-decodable learning that crucially rely on data distributional assumptions, our PAC guarantee holds by exploring the wisdom of the crowd.

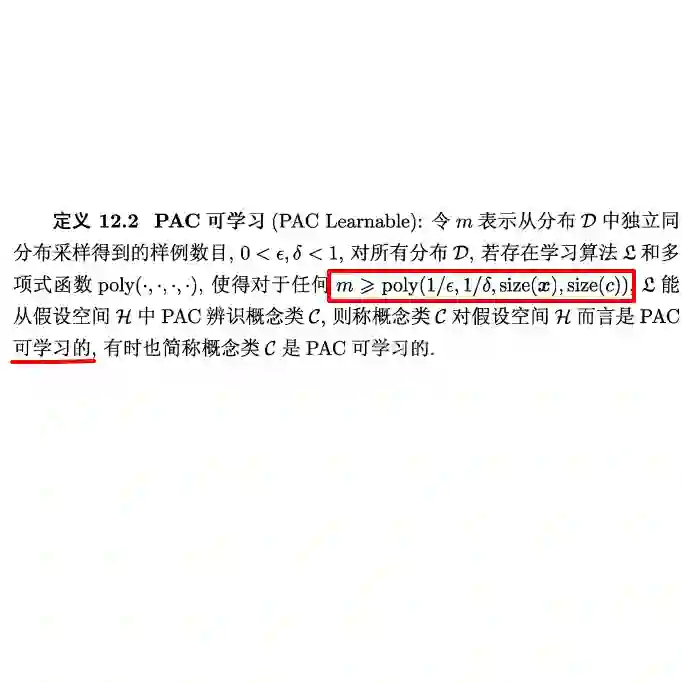

翻译:暂无翻译