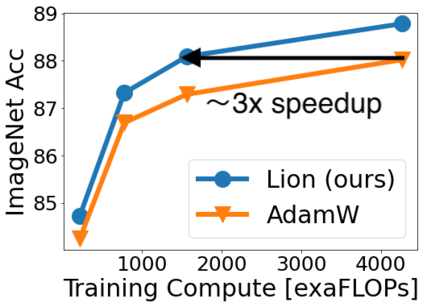

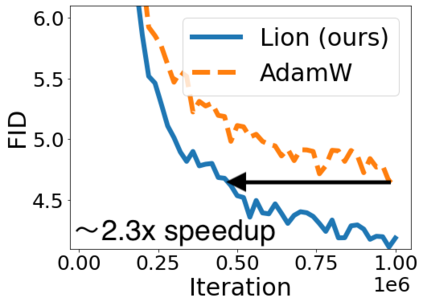

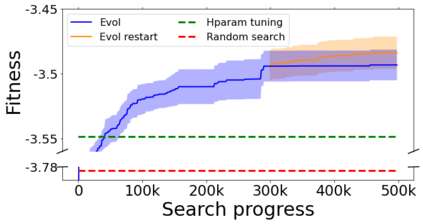

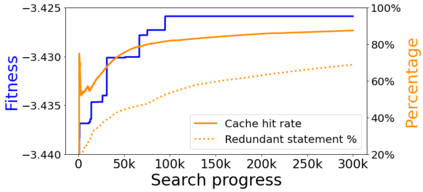

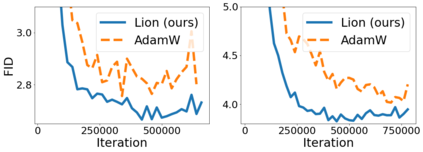

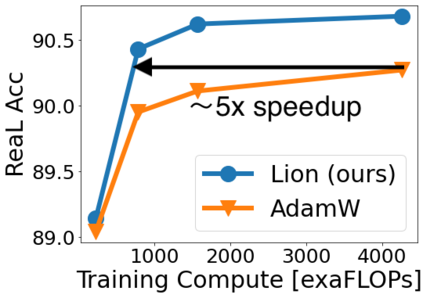

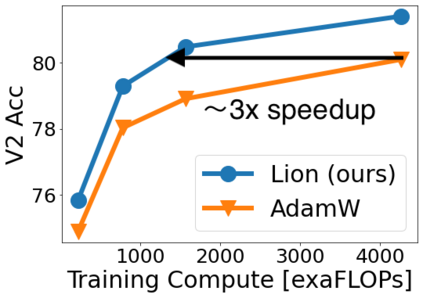

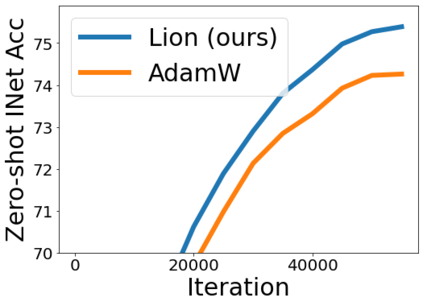

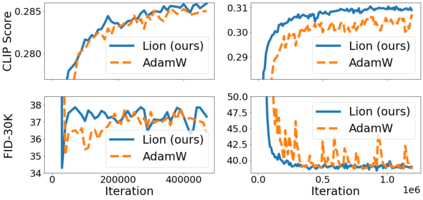

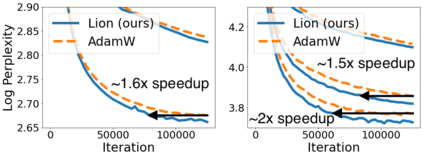

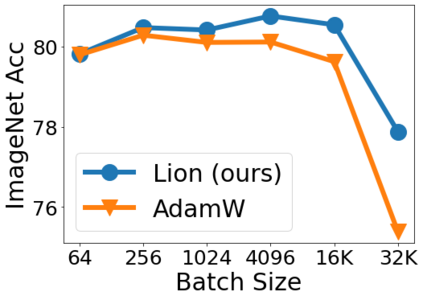

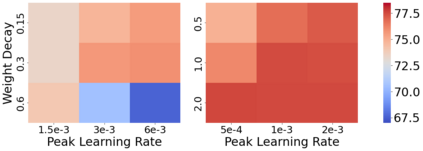

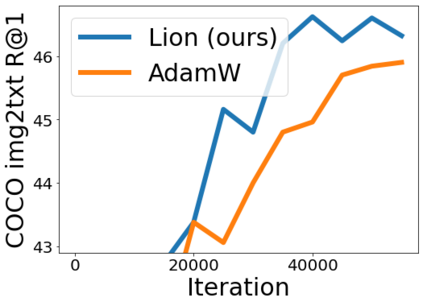

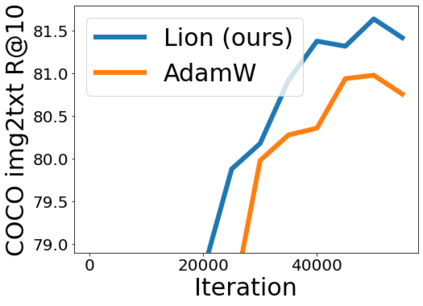

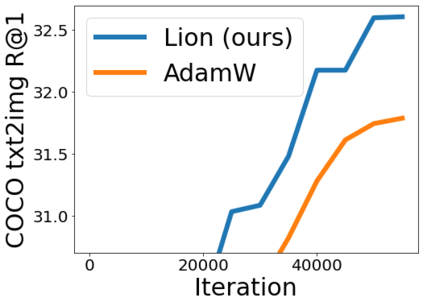

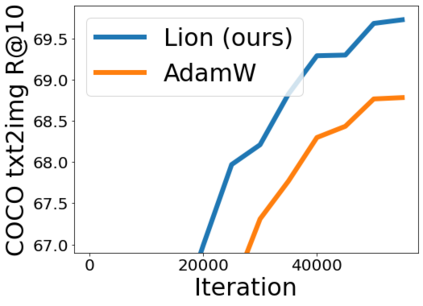

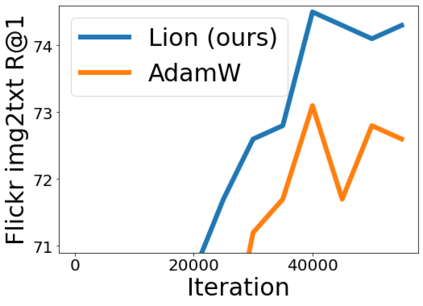

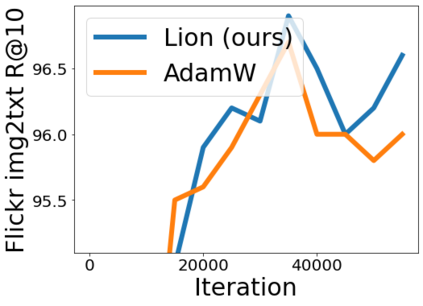

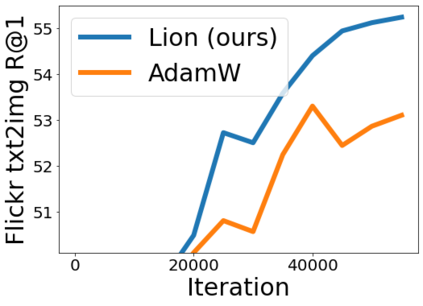

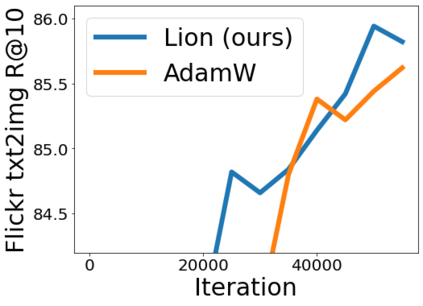

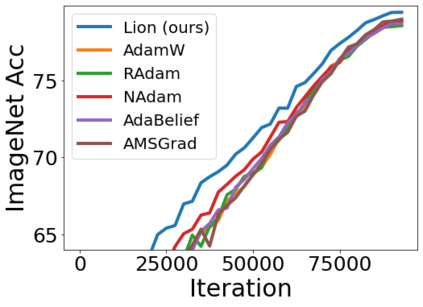

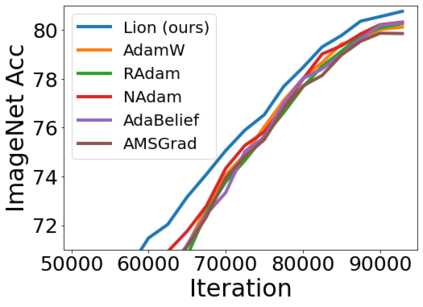

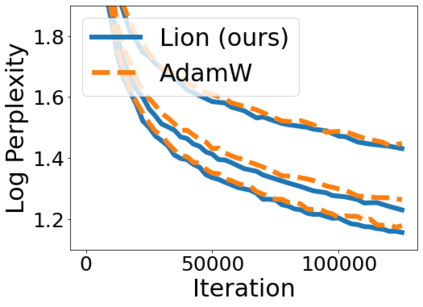

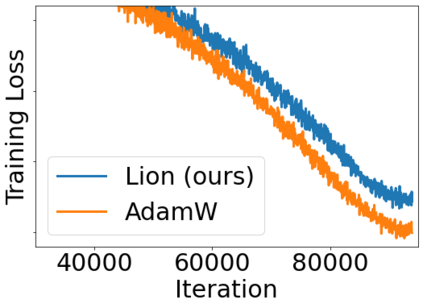

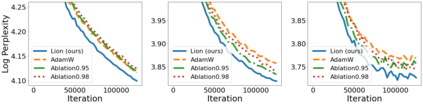

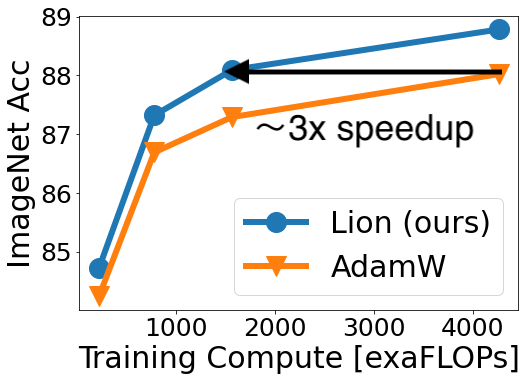

We present a method to formulate algorithm discovery as program search, and apply it to discover optimization algorithms for deep neural network training. We leverage efficient search techniques to explore an infinite and sparse program space. To bridge the large generalization gap between proxy and target tasks, we also introduce program selection and simplification strategies. Our method discovers a simple and effective optimization algorithm, $\textbf{Lion}$ ($\textit{Evo$\textbf{L}$ved S$\textbf{i}$gn M$\textbf{o}$me$\textbf{n}$tum}$). It is more memory-efficient than Adam as it only keeps track of the momentum. Different from adaptive optimizers, its update has the same magnitude for each parameter calculated through the sign operation. We compare Lion with widely used optimizers, such as Adam and Adafactor, for training a variety of models on different tasks. On image classification, Lion boosts the accuracy of ViT by up to 2% on ImageNet and saves up to 5x the pre-training compute on JFT. On vision-language contrastive learning, we achieve 88.3% $\textit{zero-shot}$ and 91.1% $\textit{fine-tuning}$ accuracy on ImageNet, surpassing the previous best results by 2% and 0.1%, respectively. On diffusion models, Lion outperforms Adam by achieving a better FID score and reducing the training compute by up to 2.3x. For autoregressive, masked language modeling, and fine-tuning, Lion exhibits a similar or better performance compared to Adam. Our analysis of Lion reveals that its performance gain grows with the training batch size. It also requires a smaller learning rate than Adam due to the larger norm of the update produced by the sign function. Additionally, we examine the limitations of Lion and identify scenarios where its improvements are small or not statistically significant. The implementation of Lion is publicly available.

翻译:我们提出一种方法来将算法发现作为程序搜索, 并将其应用于发现深神经网络培训的优化算法。 我们利用高效的搜索技术来探索无限和稀少的程序空间。 为了缩小代理任务和目标任务之间的大一般化差距, 我们还引入了程序选择和简化战略。 我们的方法发现了一个简单而有效的优化算法, $\ textbf{Lion}$ ($\textit{Evo$\ textbf{L}$美元) 用于培训各种任务。 在图像分类方面, 狮子将 ViT的精度提高到2%, 在图像网络上将精细化的模型提高到5x, 在JFT上将精细化。 与适应性优化的优化规则不同, 它的更新与通过签名操作计算得出的每项参数的大小相同。 我们将狮子与广泛使用的优化算法(如Adam和Adafafactor) 用于培训不同任务的各种模型进行比较。 在图像分类方面, 狮子会将其精度提高到2%, 通过图像网络将精细化的精度提高到5x前的缩化模型在JFFFT值上进行更精确的比较的比较的比较。 。 在视觉分析中, 我们的精度上, 直微的精度上, 直观地显示的精化的精细地显示的精细地显示的精度 。