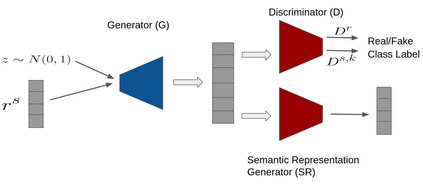

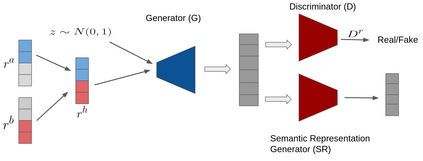

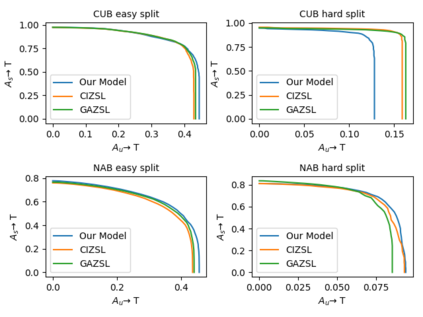

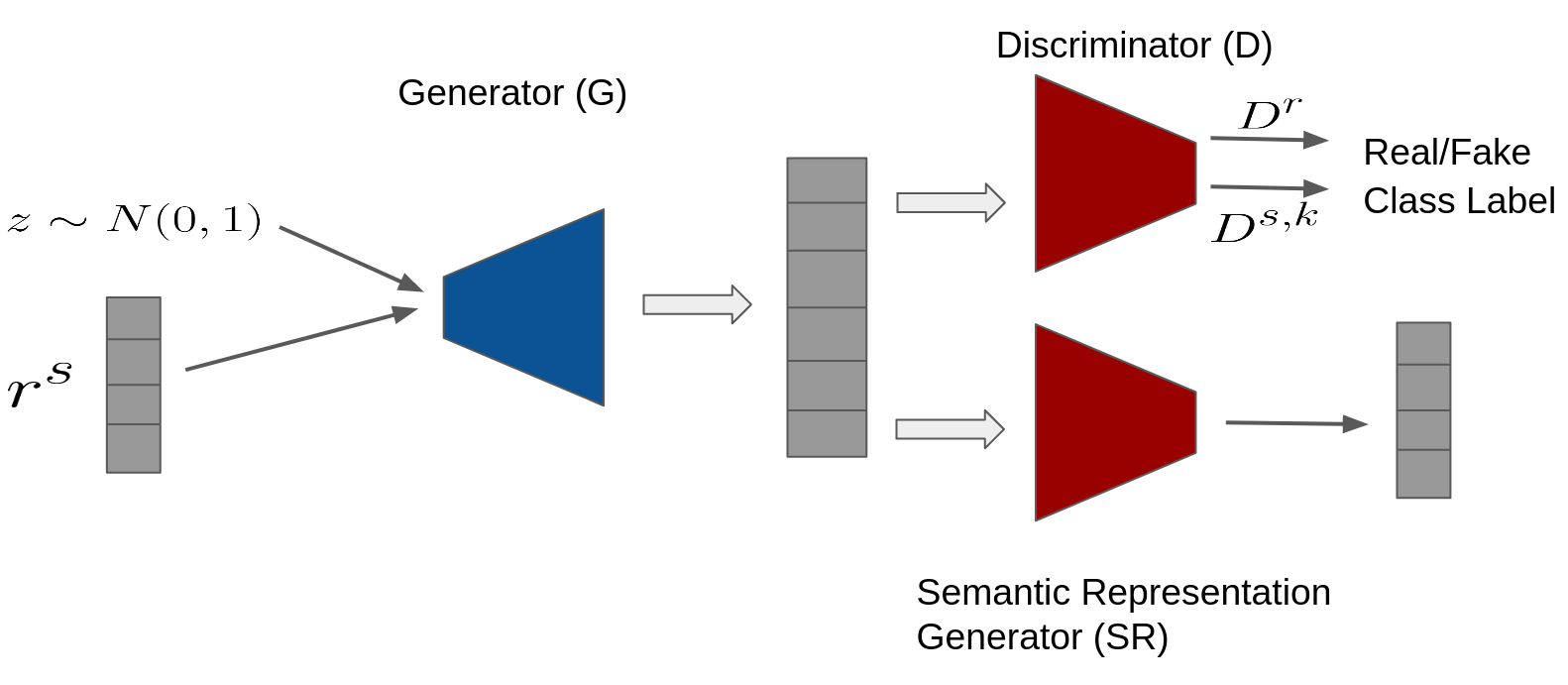

The purpose of generative Zero-shot learning (ZSL) is to learning from seen classes, transfer the learned knowledge, and create samples of unseen classes from the description of these unseen categories. To achieve better ZSL accuracies, models need to better understand the descriptions of unseen classes. We introduce a novel form of regularization that encourages generative ZSL models to pay more attention to the description of each category. Our empirical results demonstrate improvements over the performance of multiple state-of-the-art models on the task of generalized zero-shot recognition and classification when trained on textual description-based datasets like CUB and NABirds and attribute-based datasets like AWA2, aPY and SUN.

翻译:基因零光学习(ZSL)的目的是从可见的班级中学习,传授学到的知识,并从这些不可见类别的描述中建立看不见的班级样本。为了更好地实现ZSL的精度,模型需要更好地理解对不可见类的描述。我们引入一种新的正规化形式,鼓励基因化ZSL模型更多地注意对每一类的描述。我们的经验结果表明,在对CUB和NABirds等基于文字描述的数据集以及AW2、APY和SUN等基于属性的数据集进行培训时,在普遍零光识别和分类任务方面,多种最先进的模型的性能有所改进。