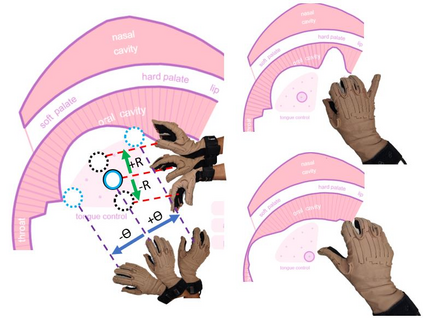

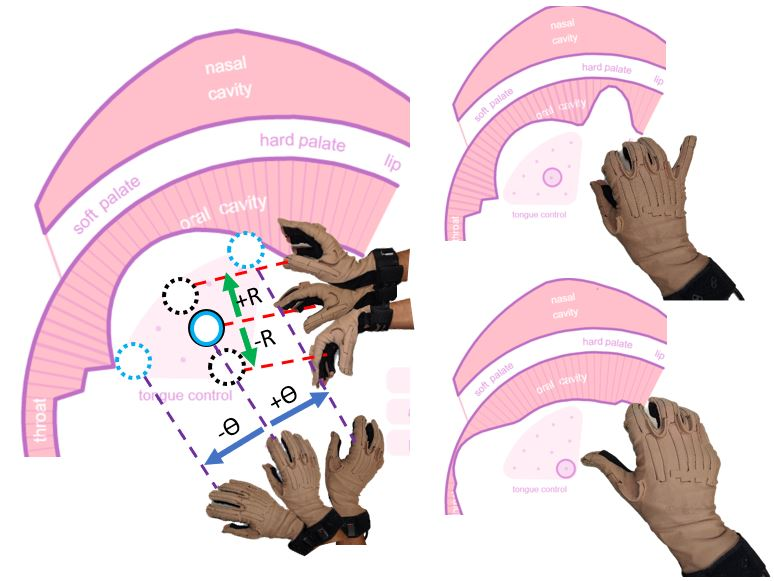

This work presents our advancements in controlling an articulatory speech synthesis engine, \textit{viz.}, Pink Trombone, with hand gestures. Our interface translates continuous finger movements and wrist flexion into continuous speech using vocal tract area-function based articulatory speech synthesis. We use Cyberglove II with 18 sensors to capture the kinematic information of the wrist and the individual fingers, in order to control a virtual tongue. The coordinates and the bending values of the sensors are then utilized to fit a spline tongue model that smoothens out the noisy values and outliers. Considering the upper palate as fixed and the spline model as the dynamically moving lower surface (tongue) of the vocal tract, we compute 1D area functional values that are fed to the Pink Trombone, generating continuous speech sounds. Therefore, by learning to manipulate one's wrist and fingers, one can learn to produce speech sounds just through one's hands, without the need for using the vocal tract.

翻译:这项工作展示了我们在控制动脉语音合成引擎(\textit{viz.}) Pink Trombone 上的进展, 带有手势。 我们的界面将连续的手指移动和手腕伸缩转换成连续的语音, 使用声控片区域功能的动脉功能声波合成。 我们使用网络球二, 配有18个传感器, 以捕捉手腕和手指的感官信息, 以控制虚拟舌头。 然后, 传感器的坐标和弯曲值被用来适应一个滑动的舌头模型, 以平息吵闹的值和外缘值。 考虑到上方和样样模型是固定的, 我们计算1D区域功能值, 用于粉红特罗姆本, 产生连续的语音声音。 因此, 通过学会操纵手腕和手指, 人们可以学会通过手来产生语音声音, 不需要使用声控 。

相关内容

Source: Apple - iOS 8