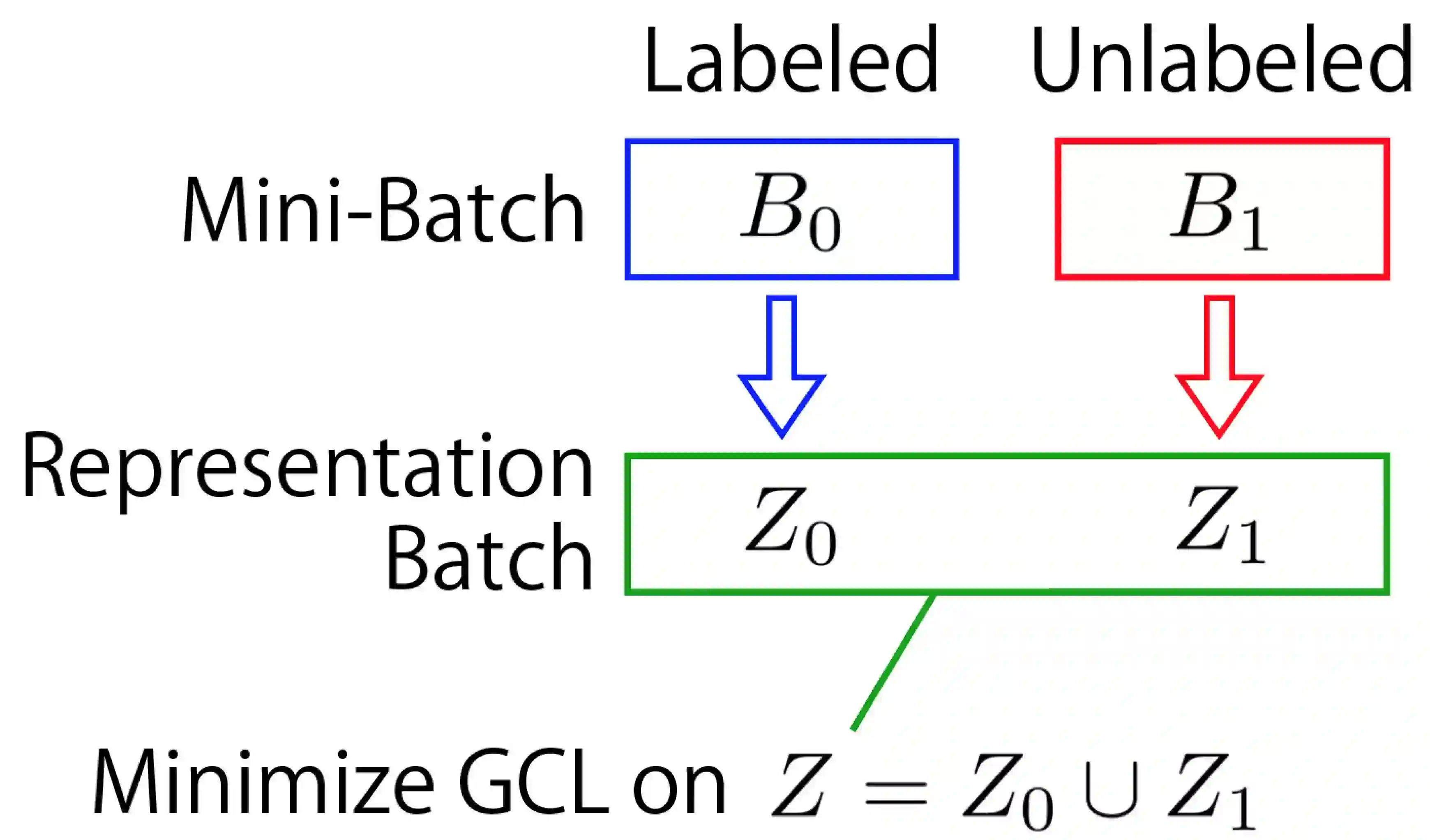

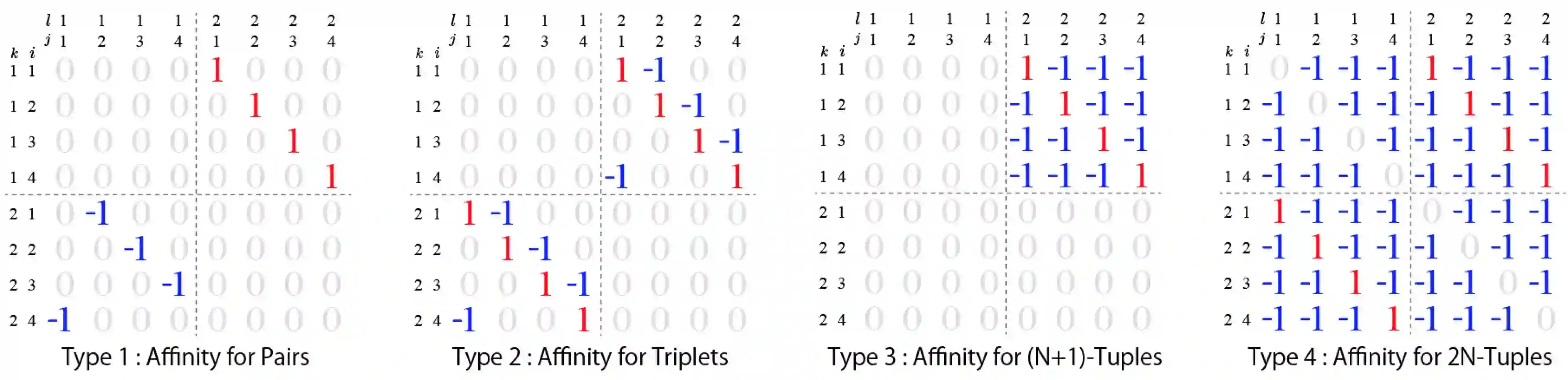

This paper introduces a semi-supervised contrastive learning framework and its application to text-independent speaker verification. The proposed framework employs generalized contrastive loss (GCL). GCL unifies losses from two different learning frameworks, supervised metric learning and unsupervised contrastive learning, and thus it naturally determines the loss for semi-supervised learning. In experiments, we applied the proposed framework to text-independent speaker verification on the VoxCeleb dataset. We demonstrate that GCL enables the learning of speaker embeddings in three manners, supervised learning, semi-supervised learning, and unsupervised learning, without any changes in the definition of the loss function.

翻译:本文介绍了一个半监督的对比学习框架,并将其应用于文本独立的演讲者核查。拟议框架采用了普遍的对比性损失(GCL)。GCL统一了两个不同的学习框架、监督的衡量学习和不受监督的对比学习的损失,因此自然地确定了半监督学习的损失。在实验中,我们应用了拟议的框架,对VoxCeleb数据集进行文本独立的演讲者核查。我们证明GCL能够以三种方式学习演讲者嵌入,监督的学习、半监督的学习和不受监督的学习,对损失功能的定义没有任何改变。