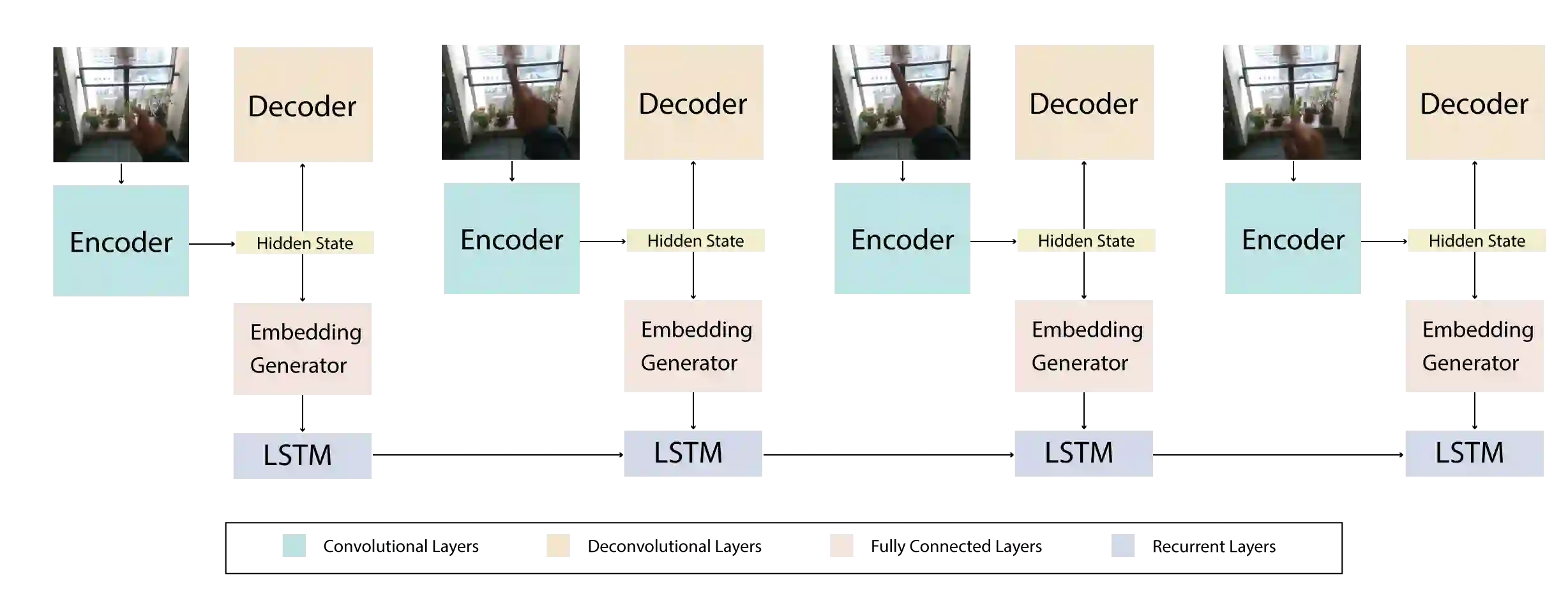

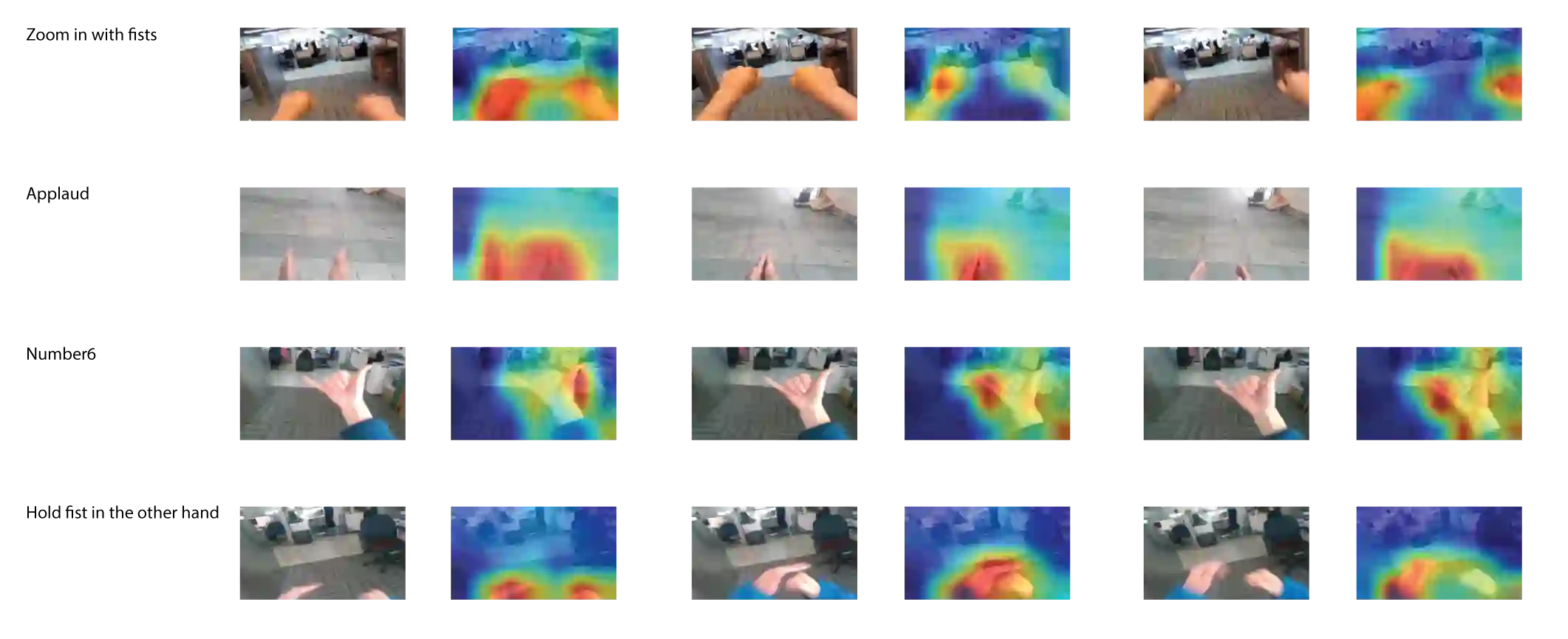

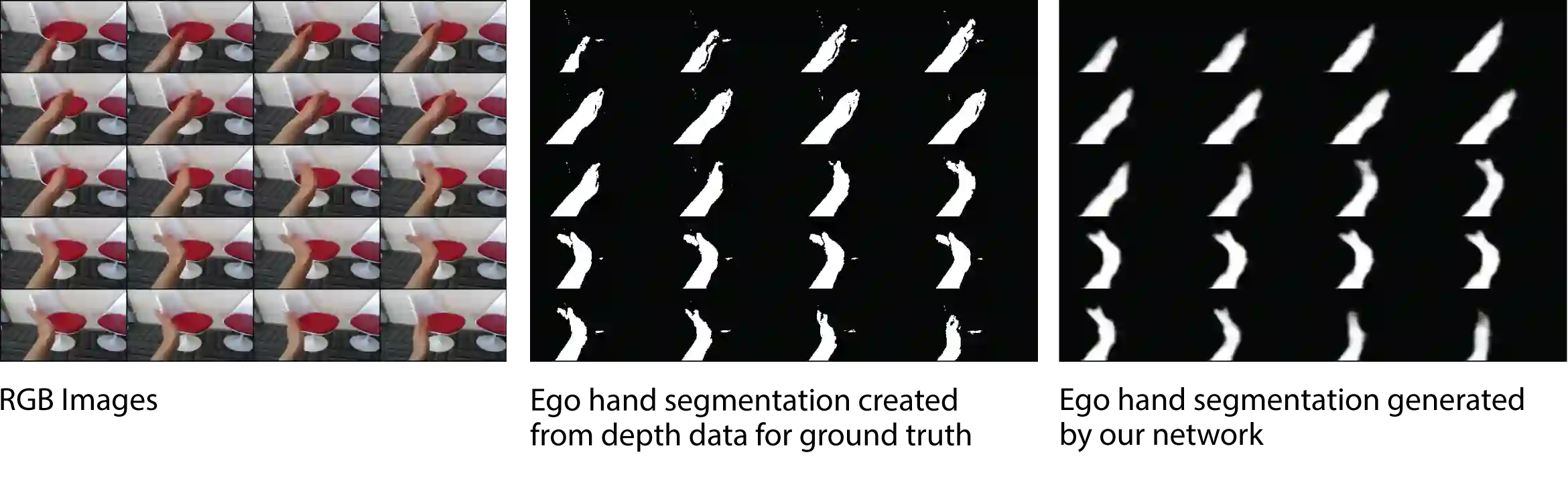

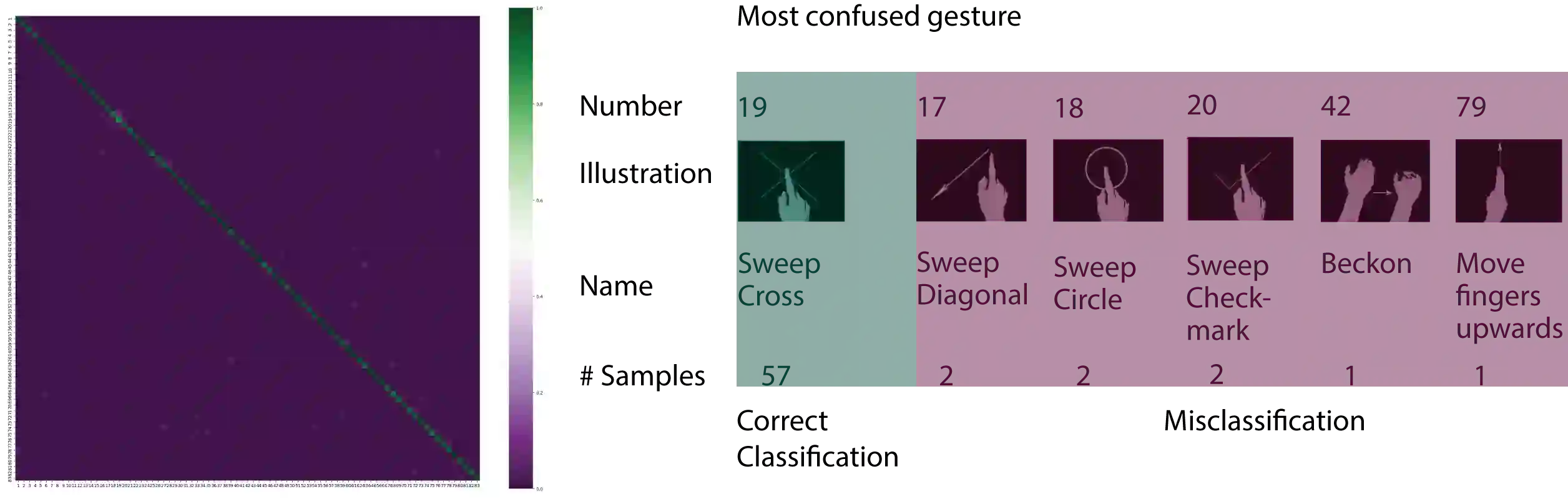

Ego hand gestures can be used as an interface in AR and VR environments. While the context of an image is important for tasks like scene understanding, object recognition, image caption generation and activity recognition, it plays a minimal role in ego hand gesture recognition. An ego hand gesture used for AR and VR environments conveys the same information regardless of the background. With this idea in mind, we present our work on ego hand gesture recognition that produces embeddings from RBG images with ego hands, which are simultaneously used for ego hand segmentation and ego gesture recognition. To this extent, we achieved better recognition accuracy (96.9%) compared to the state of the art (92.2%) on the biggest ego hand gesture dataset available publicly. We present a gesture recognition deep neural network which recognises ego hand gestures from videos (videos containing a single gesture) by generating and recognising embeddings of ego hands from image sequences of varying length. We introduce the concept of simultaneous segmentation and recognition applied to ego hand gestures, present the network architecture, the training procedure and the results compared to the state of the art on the EgoGesture dataset

翻译:在AR和 VR 环境中, 手势的自我手势可以用作一个界面。 虽然图像的背景对于像场景理解、 对象识别、 图像字幕生成和活动识别等任务很重要, 但是在自我手势识别中作用很小。 用于AR和 VR 环境的自我手势可以传递同样的信息, 而不管背景如何。 有了这个想法, 我们展示了我们的自我手势识别工作, 使RBG 图像与自我手相嵌入自我手势, 同时用于自我手势分割和自我手势识别。 在这方面, 我们比公开提供的最大自我手势数据集的艺术状态(92.2%) 实现了更好的识别准确度(96.9%) 。 我们展示了一种与EgoGestur 数据设置的艺术状态相比, 通过生成和识别不同长度图像序列中的自手的嵌入式( 包含单一手势的视频) 。 我们引入了同时分割和识别自我手势的概念, 展示了网络结构、 培训程序和结果与艺术状态相比, 我们展示了EgoGesture 数据设置的艺术状态, 我们展示了一种手势势势感。