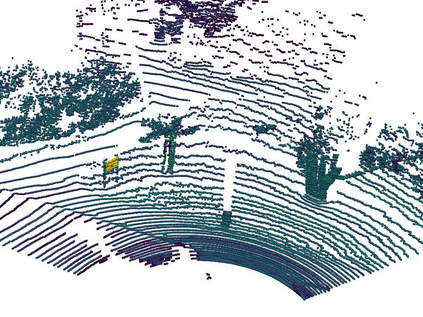

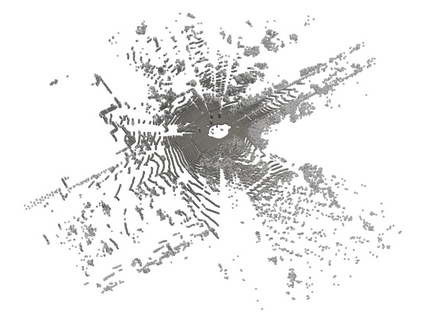

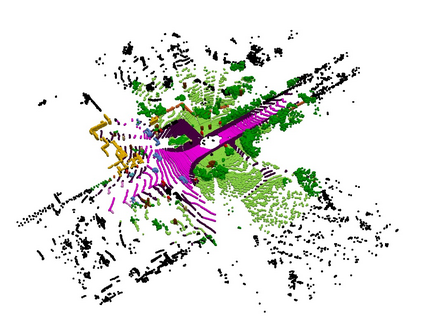

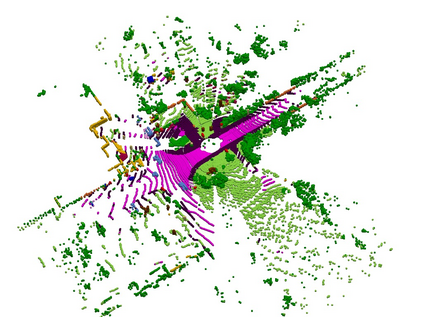

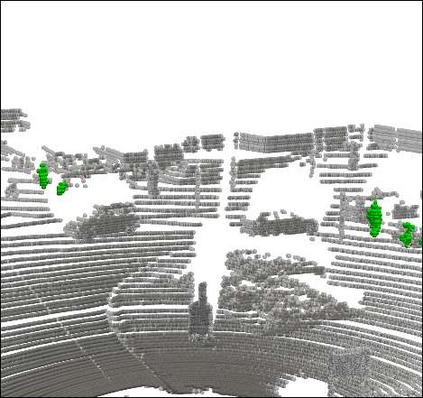

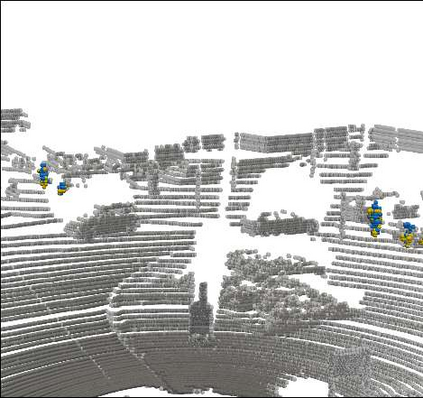

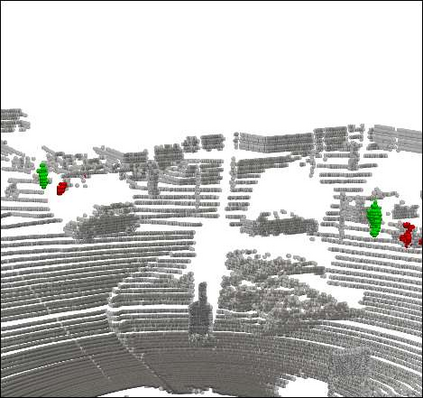

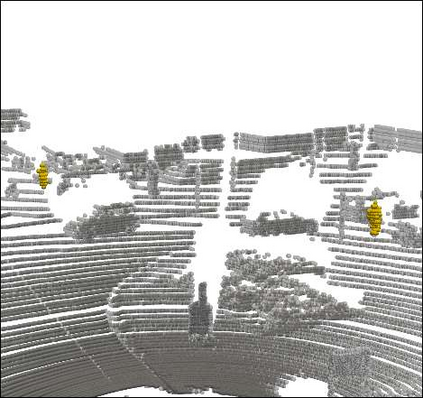

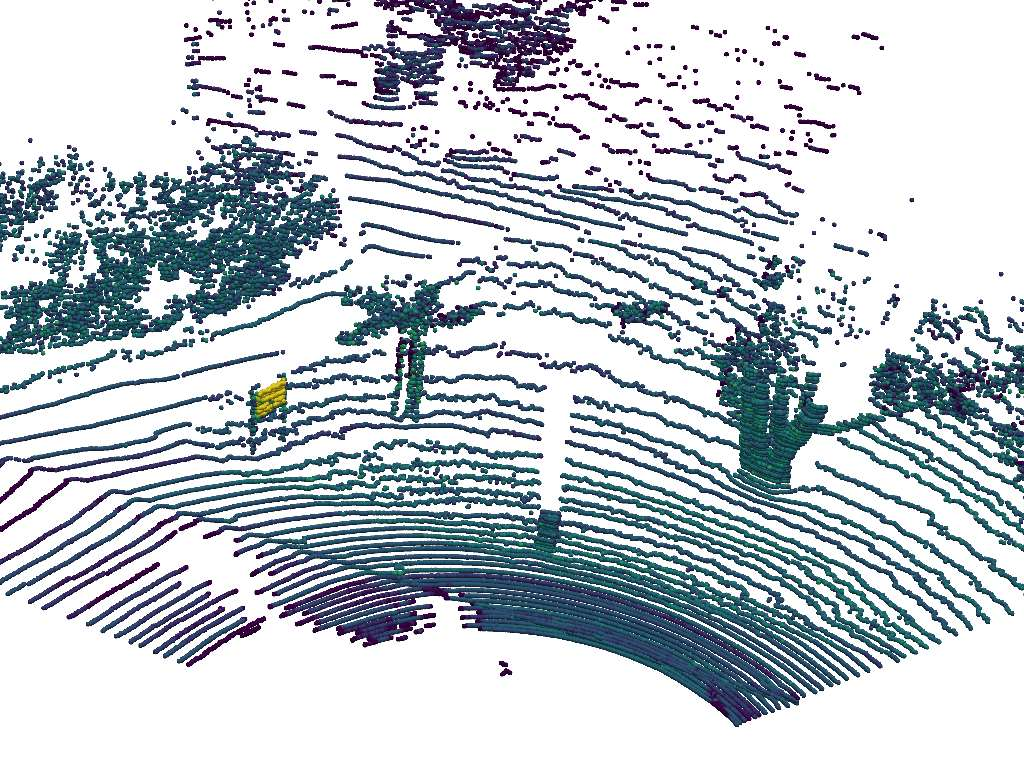

3D point cloud semantic segmentation is one of the fundamental tasks for 3D scene understanding and has been widely used in the metaverse applications. Many recent 3D semantic segmentation methods learn a single prototype (classifier weights) for each semantic class, and classify 3D points according to their nearest prototype. However, learning only one prototype for each class limits the model's ability to describe the high variance patterns within a class. Instead of learning a single prototype for each class, in this paper, we propose to use an adaptive number of prototypes to dynamically describe the different point patterns within a semantic class. With the powerful capability of vision transformer, we design a Number-Adaptive Prototype Learning (NAPL) model for point cloud semantic segmentation. To train our NAPL model, we propose a simple yet effective prototype dropout training strategy, which enables our model to adaptively produce prototypes for each class. The experimental results on SemanticKITTI dataset demonstrate that our method achieves 2.3% mIoU improvement over the baseline model based on the point-wise classification paradigm.

翻译:3D 点云的语义分解是 3D 场景理解的基本任务之一, 并被广泛用于 逆向 应用 。 许多最近 3D 点语义分解方法为每个语义类学习单一原型( 分类器重量), 并根据最近的原型对 3D 点点进行分类 。 然而, 学习每类只有一个原型, 限制了模型描述某类差异型的能力 。 本文中, 我们提议使用一个适应性的原型数来动态描述语义类的不同点模式 。 由于视觉变异器的强大能力, 我们设计了一个用于点云语义分解的 数字- 适应性原型( NAPL ) 学习模型 。 为了培训我们的NAPL 模型, 我们建议了一个简单而有效的原型辍学培训策略, 使模型能够适应每个阶级的原型。 SmanticKTI 数据设置的实验结果显示, 我们的方法比基于近点分类模式的基准模型改进了2.3% MIU 。