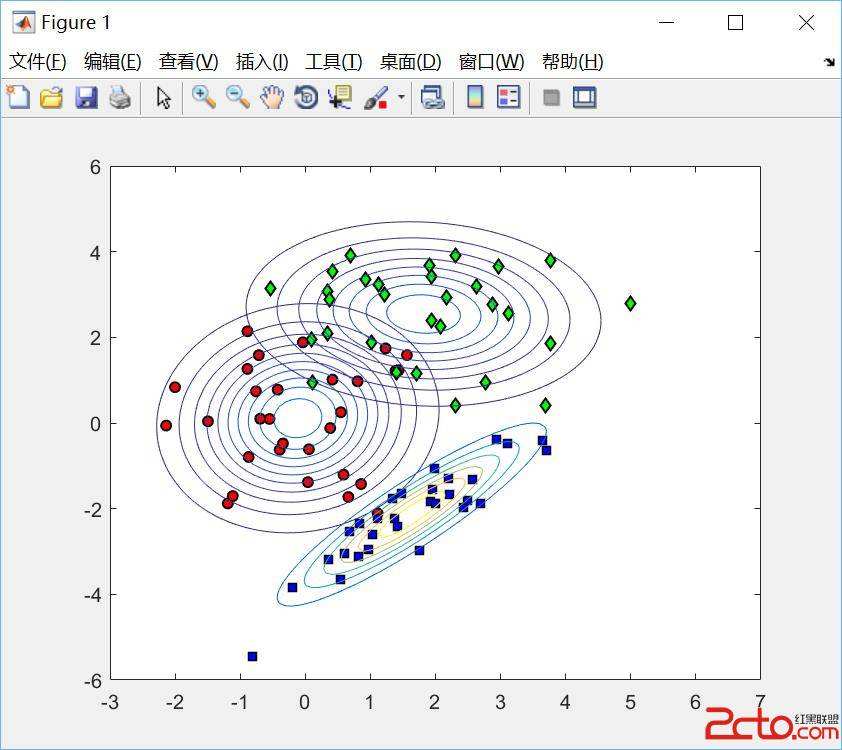

We propose an algorithm and a new method to tackle the classification problems. We propose a multi-output neural tree (MONT) algorithm, which is an evolutionary learning algorithm trained by the non-dominated sorting genetic algorithm (NSGA)-III. Since evolutionary learning is stochastic, a hypothesis found in the form of MONT is unique for each run of evolutionary learning, i.e., each hypothesis (tree) generated bears distinct properties compared to any other hypothesis both in topological space and parameter-space. This leads to a challenging optimisation problem where the aim is to minimise the tree-size and maximise the classification accuracy. Therefore, the Pareto-optimality concerns were met by hypervolume indicator analysis. We used nine benchmark classification learning problems to evaluate the performance of the MONT. As a result of our experiments, we obtained MONTs which are able to tackle the classification problems with high accuracy. The performance of MONT emerged better over a set of problems tackled in this study compared with a set of well-known classifiers: multilayer perceptron, reduced-error pruning tree, naive Bayes classifier, decision tree, and support vector machine. Moreover, the performances of three versions of MONT's training using genetic programming, NSGA-II, and NSGA-III suggest that the NSGA-III gives the best Pareto-optimal solution.

翻译:我们提出了一种算法和新的方法来解决分类问题。我们提出了一种多输出神经树(MONT)算法(MONT)算法(MONT),这是一种由非主流分类基因算法(NSGA)-III所培训的进化学习算法。由于进化学习是随机的,一种以MONT为形式的假设是每个进化学习过程所独有的,也就是说,产生的每个假设(树)与在地形空间和参数空间方面的任何其他假设相比,都具有不同的属性。这导致了一个具有挑战性的优化问题,其目的是最大限度地减少树的大小并最大限度地提高分类准确性。因此,对Pareto最优化的关切通过超量指标分析得到了解决。我们用九种基准分类学习问题来评价MONT的绩效。作为我们实验的结果,我们获得了能够以高度精确的方式解决分类问题的MONTT,与一系列众所周知的分类方法相比,这一系列研究所处理的问题表现得更好:多层次的跨层、缩小的螺旋曲直径、缩小的螺旋曲线曲线曲线曲线的精度的精确度的精确度的精确度问题。 因此, III的Bay Bay Bay-Bayles-III 和NSGAAAAAAAADR-DRDS-S-DARDS-DS-DS-DS-S-DS-S-S-S-DS-S-S-S-S-S-DAR-S-S-DAR-S-S-S-S-S-DAR-S-S-S-S-DAR-S-S-S-S-S-DAR-V-DAR-V-V-S-V-V-S-V-S-V-S-S-S-S-S-S-S-S-V-V-S-S-V-V-S-S-S-S-S-S-S-S-VGA-III-S-V-S-S-S-S-V-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-V-S-S-S-S-S-S-S-S-S-