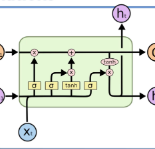

Disentanglement is a problem in which multiple conversations occur in the same channel simultaneously, and the listener should decide which utterance is part of the conversation he will respond to. We propose a new model, named Dialogue BERT (DialBERT), which integrates local and global semantics in a single stream of messages to disentangle the conversations that mixed together. We employ BERT to capture the matching information in each utterance pair at the utterance-level, and use a BiLSTM to aggregate and incorporate the context-level information. With only a 3% increase in parameters, a 12% improvement has been attained in comparison to BERT, based on the F1-Score. The model achieves a state-of-the-art result on the a new dataset proposed by IBM and surpasses previous work by a substantial margin.

翻译:分解是一个问题, 在同一频道同时发生多个对话, 听众应该决定哪个话语是他将回答的谈话的一部分。 我们提出了一个新模式, 名为“ 对话框 ” ( DialBERT ), 将本地和全球语义整合到一串信息中, 以解析混合的谈话。 我们使用 BERT 来捕捉每对话语层的匹配信息, 并使用 BILSTM 来汇总和包含上下文级信息。 参数只增加了3%, 与 BERT 相比, 在 F1- Score 的基础上实现了12%的改进。 该模式在IBM 提出的新数据集上取得了最新的结果, 并大大超过了先前的工作 。