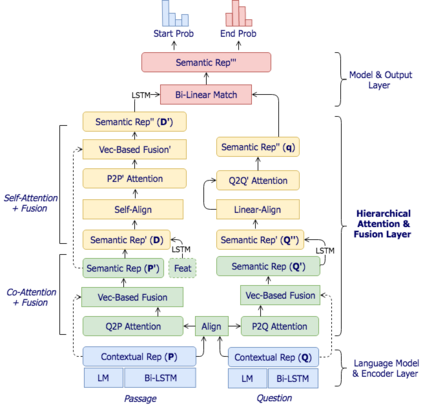

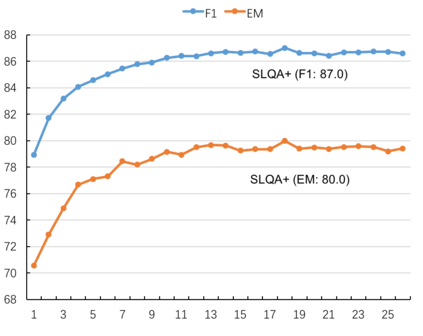

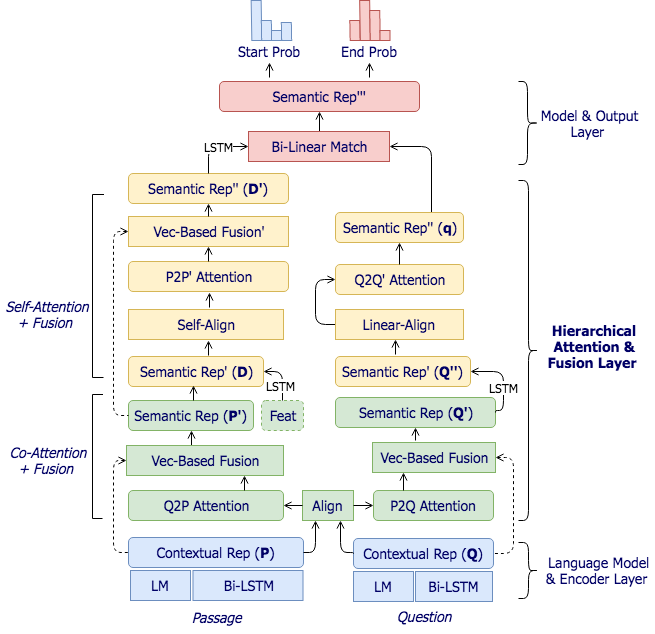

This paper describes a novel hierarchical attention network for reading comprehension style question answering, which aims to answer questions for a given narrative paragraph. In the proposed method, attention and fusion are conducted horizontally and vertically across layers at different levels of granularity between question and paragraph. Specifically, it first encode the question and paragraph with fine-grained language embeddings, to better capture the respective representations at semantic level. Then it proposes a multi-granularity fusion approach to fully fuse information from both global and attended representations. Finally, it introduces a hierarchical attention network to focuses on the answer span progressively with multi-level softalignment. Extensive experiments on the large-scale SQuAD and TriviaQA datasets validate the effectiveness of the proposed method. At the time of writing the paper (Jan. 12th 2018), our model achieves the first position on the SQuAD leaderboard for both single and ensemble models. We also achieves state-of-the-art results on TriviaQA, AddSent and AddOne-Sent datasets.

翻译:本文描述了阅读理解风格解答新颖的分级关注网络,目的是回答特定叙述段落的问题。 在拟议方法中,关注和融合在水平上和垂直跨层次的层次上,在问题和段落之间的颗粒度不同层次上进行。具体地说,它首先将问题和段落编码成细微语言嵌入,以更好地在语义层次上反映各自的表达方式。然后,它提出了一种多级融合方法,以充分融合来自全球和出席的表达式的信息。最后,它引入了一个分级关注网络,以多级软调整的方式逐步关注答案的跨度。关于大规模 SQuAD 和 TriviaQA 数据集的广泛实验证实了拟议方法的有效性。在撰写论文时(Jan. 12th 2018),我们的模型在SQuAD领导板上就单一模型和共同模型取得了第一个位置。我们还取得了TriviaQA、AddSent和Addione-Sent数据集的最新结果。