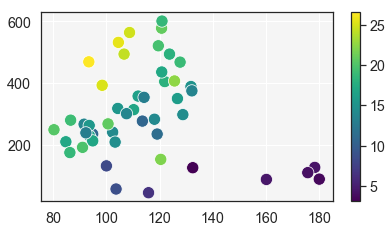

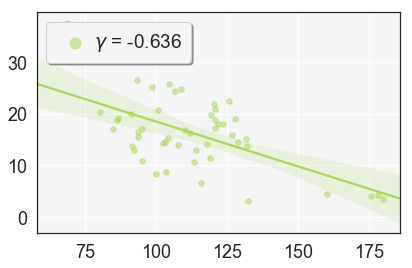

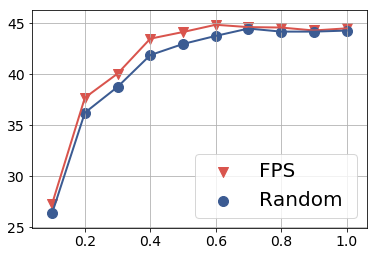

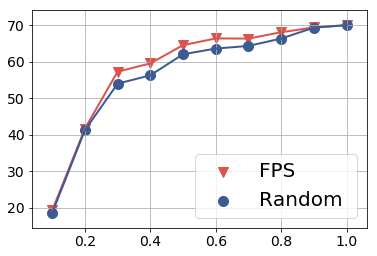

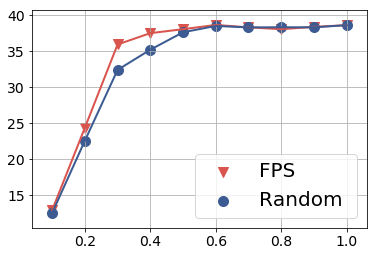

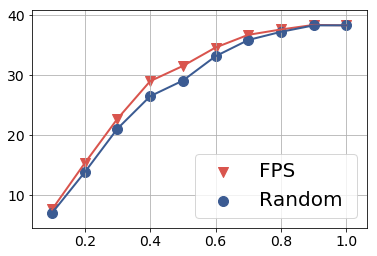

We consider a scenario where we have access to the target domain, but cannot afford on-the-fly training data annotation, and instead would like to construct an alternative training set from a large-scale data pool such that a competitive model can be obtained. We propose a search and pruning (SnP) solution to this training data search problem, tailored to object re-identification (re-ID), an application aiming to match the same object captured by different cameras. Specifically, the search stage identifies and merges clusters of source identities which exhibit similar distributions with the target domain. The second stage, subject to a budget, then selects identities and their images from the Stage I output, to control the size of the resulting training set for efficient training. The two steps provide us with training sets 80\% smaller than the source pool while achieving a similar or even higher re-ID accuracy. These training sets are also shown to be superior to a few existing search methods such as random sampling and greedy sampling under the same budget on training data size. If we release the budget, training sets resulting from the first stage alone allow even higher re-ID accuracy. We provide interesting discussions on the specificity of our method to the re-ID problem and particularly its role in bridging the re-ID domain gap. The code is available at https://github.com/yorkeyao/SnP.

翻译:我们考虑这样一种情况,即我们可以访问目标领域,但是无法承担实时训练数据注释的费用,而希望从大规模数据池中构建备选培训集,以获得具有竞争力的模型。我们针对物体再识别(re-ID)提出了一种搜索和修剪(SnP)解决方案,该应用旨在匹配由不同摄像机捕获的相同物体。具体来说,搜索阶段识别并合并源身份的簇,这些身份与目标领域展现出类似的分布。第二阶段在预算范围内,从第一阶段的输出中选择身份及其图像,以控制结果培训集的大小,以便进行有效训练。这两个步骤为我们提供了比源池小80%的培训集,同时实现了相似甚至更高的re-ID准确性。在训练数据大小相同的预算下,这些培训集也被证明优于一些现有的搜索方法,例如随机抽样和贪婪抽样。如果我们释放预算,仅从第一阶段产生的训练集就可以获得更高的re-ID准确性。我们就我们的方法对于re-ID问题的特异性以及尤其在弥合re-ID领域差距方面的作用进行了有趣的讨论。代码可在https://github.com/yorkeyao/SnP下载。