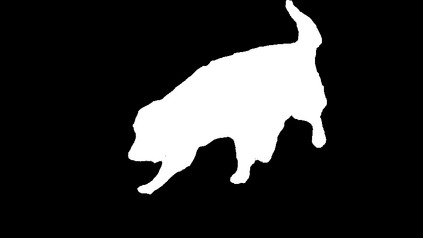

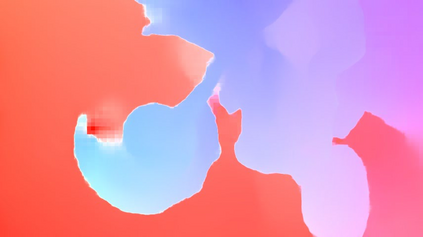

Unsupervised video object segmentation (VOS) aims to detect the most salient object in a video sequence at the pixel level. In unsupervised VOS, most state-of-the-art methods leverage motion cues obtained from optical flow maps in addition to appearance cues to exploit the property that salient objects usually have distinctive movements compared to the background. However, as they are overly dependent on motion cues, which may be unreliable in some cases, they cannot achieve stable prediction. To reduce this motion dependency of existing two-stream VOS methods, we propose a novel motion-as-option network that optionally utilizes motion cues. Additionally, to fully exploit the property of the proposed network that motion is not always required, we introduce a collaborative network learning strategy. On all the public benchmark datasets, our proposed network affords state-of-the-art performance with real-time inference speed.

翻译:未受监督的视频物体分割图(VOS)旨在检测像素级视频序列中最突出的物体。在未受监督的VOS中,大多数最先进的方法利用光学流图获得的运动提示,外加外观提示来利用显要物体通常与背景相比有不同运动的属性。然而,由于它们过于依赖运动提示,有时可能不可靠,因此无法实现稳定的预测。为减少现有双流VOS方法的这种运动依赖性,我们提议了一个可选择使用运动提示的新型运动可选网络。此外,为了充分利用拟议网络中不需要运动的财产,我们引入了协作网络学习战略。在所有公共基准数据集中,我们提议的网络以实时推导速度提供最新技术表现。