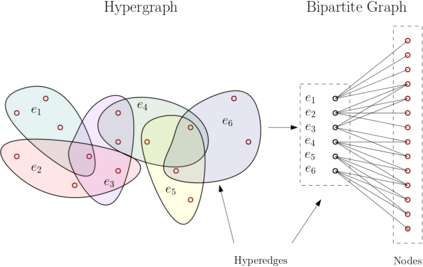

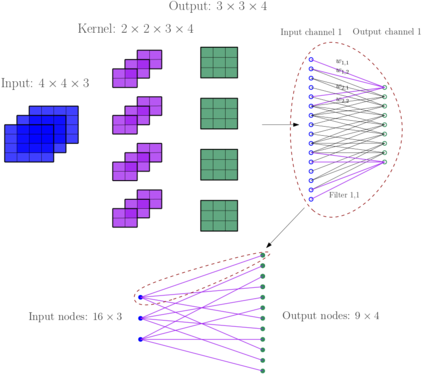

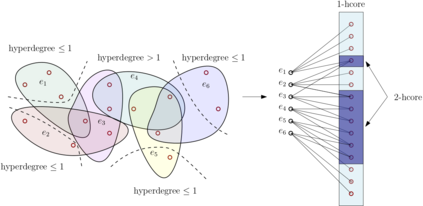

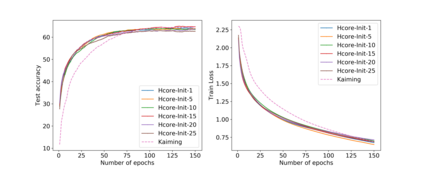

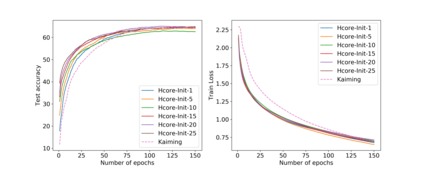

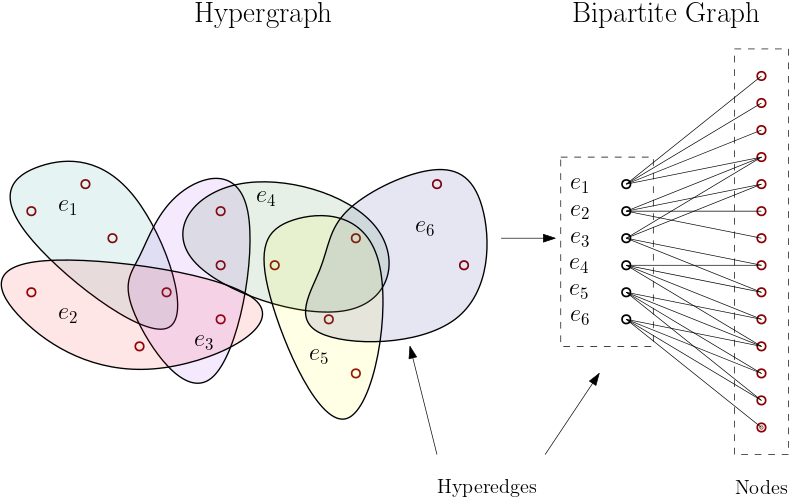

Neural networks are the pinnacle of Artificial Intelligence, as in recent years we witnessed many novel architectures, learning and optimization techniques for deep learning. Capitalizing on the fact that neural networks inherently constitute multipartite graphs among neuron layers, we aim to analyze directly their structure to extract meaningful information that can improve the learning process. To our knowledge graph mining techniques for enhancing learning in neural networks have not been thoroughly investigated. In this paper we propose an adapted version of the k-core structure for the complete weighted multipartite graph extracted from a deep learning architecture. As a multipartite graph is a combination of bipartite graphs, that are in turn the incidence graphs of hypergraphs, we design k-hypercore decomposition, the hypergraph analogue of k-core degeneracy. We applied k-hypercore to several neural network architectures, more specifically to convolutional neural networks and multilayer perceptrons for image recognition tasks after a very short pretraining. Then we used the information provided by the hypercore numbers of the neurons to re-initialize the weights of the neural network, thus biasing the gradient optimization scheme. Extensive experiments proved that k-hypercore outperforms the state-of-the-art initialization methods.

翻译:人造智能网络是人工智能的顶峰,正如近年来我们目睹了许多新结构、学习和最优化的深层学习技术。利用神经网络本身就构成神经层的多部分图,我们的目标是直接分析其结构以提取有意义的信息,从而改进学习过程。对于我们用于加强神经网络学习的知识图解挖掘技术,还没有进行彻底调查。在本文中,我们为从深层学习结构中提取的完整加权多部分图提出了修改版的K核心结构。多部分图是双部分图的组合,而双部分图则反过来是高层图的发件图,我们设计了K-Hypercore脱腐化,即K-cent-degeneracy的高度模拟图。我们应用了K-hycorecremeal 来对数个神经网络结构,更具体地说,我们用K-hycorecream网络网络和多层感官感官感应来完成一个非常短的图像识别任务。然后,我们利用了由神经数提供的多部分图作为两部分图的组合的组合组合组合组合组合组合,以重新将高重塑顶部网络的重量的模型,从而证明了模型。