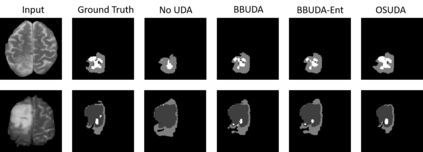

Unsupervised domain adaptation (UDA) has been widely used to transfer knowledge from a labeled source domain to an unlabeled target domain to counter the difficulty of labeling in a new domain. The training of conventional solutions usually relies on the existence of both source and target domain data. However, privacy of the large-scale and well-labeled data in the source domain and trained model parameters can become the major concern of cross center/domain collaborations. In this work, to address this, we propose a practical solution to UDA for segmentation with a black-box segmentation model trained in the source domain only, rather than original source data or a white-box source model. Specifically, we resort to a knowledge distillation scheme with exponential mixup decay (EMD) to gradually learn target-specific representations. In addition, unsupervised entropy minimization is further applied to regularization of the target domain confidence. We evaluated our framework on the BraTS 2018 database, achieving performance on par with white-box source model adaptation approaches.

翻译:未受监督的域适应(UDA)被广泛用于将知识从标签源域向无标签目标域转移,以克服在新域内标签的困难。常规解决方案的培训通常取决于源数据和目标域数据的存在。然而,源域内大规模和标签良好的数据隐私和经过培训的模型参数可能成为交叉中心/域协作的主要关切。为了解决这一问题,我们向UDA提出了一个实际解决方案,用于分离仅由在源域内培训的黑盒分割模型,而不是原始源数据或白箱源模型。具体地说,我们采用具有指数性混杂衰变(EMD)的知识蒸馏方案,以逐步学习特定目标表示方式。此外,未加控制的最小化还被进一步应用于目标域信任的正规化。我们评估了我们在 BRATS 2018 数据库中的框架,实现了与白箱源模型适应方法相同的业绩。