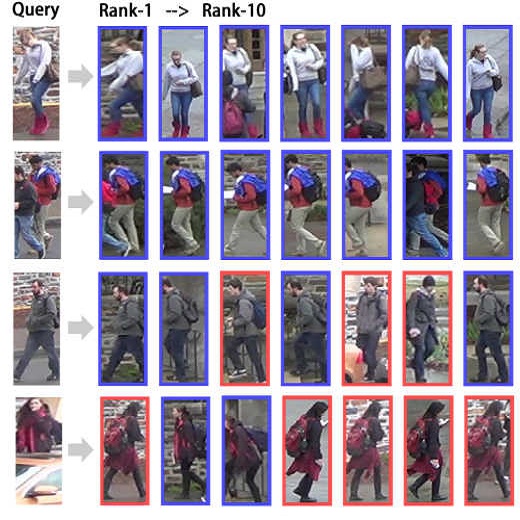

Partial person re-identification (re-id) is a challenging problem, where only some partial observations (images) of persons are available for matching. However, few studies have offered a flexible solution of how to identify an arbitrary patch of a person image. In this paper, we propose a fast and accurate matching method to address this problem. The proposed method leverages Fully Convolutional Network (FCN) to generate certain-sized spatial feature maps such that pixel-level features are consistent. To match a pair of person images of different sizes, hence, a novel method called Deep Spatial feature Reconstruction (DSR) is further developed to avoid explicit alignment. Specifically, DSR exploits the reconstructing error from popular dictionary learning models to calculate the similarity between different spatial feature maps. In that way, we expect that the proposed FCN can decrease the similarity of coupled images from different persons and increase that of coupled images from the same person. Experimental results on two partial person datasets demonstrate the efficiency and effectiveness of the proposed method in comparison with several state-of-the-art partial person re-id approaches. Additionally, it achieves competitive results on a benchmark person dataset Market1501 with the Rank-1 accuracy being 83.58%.

翻译:部分人重新识别(重新定位)是一个具有挑战性的问题,因为只有部分观察(图像)的人可以进行匹配,然而,很少有研究为如何识别个人图像的任意补丁提供了灵活的解决方案。在本文件中,我们建议了一种快速和准确的匹配方法来解决这一问题。拟议方法利用全演网络生成一定大小的空间特征地图,使像素级特征一致。为了匹配不同大小的一对个人图像,因此,进一步开发了称为深空间特征重建(DSR)的新颖方法,以避免明确匹配。具体地说,DSR利用流行字典学习模型的重建错误来计算不同空间特征地图之间的相似性。我们期望拟议的FCN能够减少不同人的相配图像的相似性,提高同一个人的相配图像的相似性。两个部分人的数据集的实验结果显示拟议方法与若干项州级的局部人重新定位方法相比的效率和效力。此外,DSR还利用流行字典学习模型的错误来计算不同空间特征地图之间的相似性。我们期望拟议的FCN能够降低来自不同人的相配相图像的相似性。