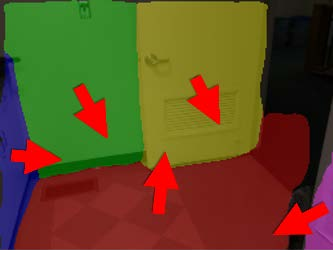

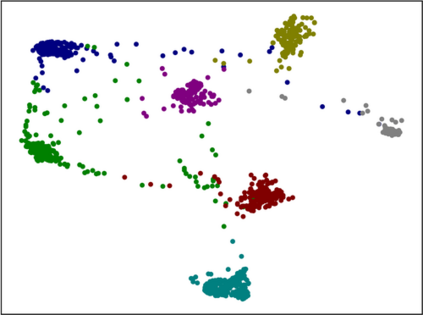

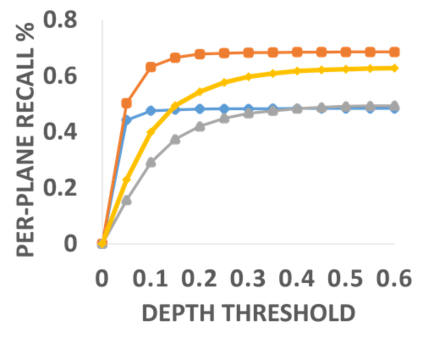

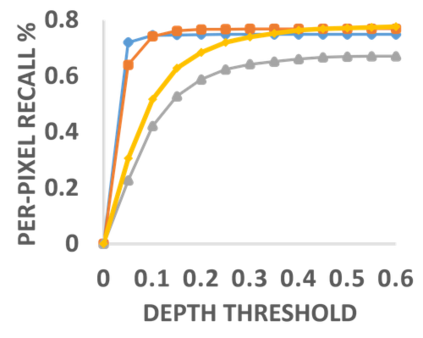

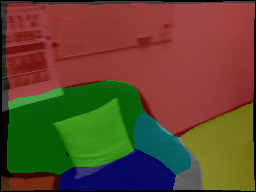

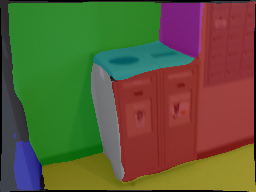

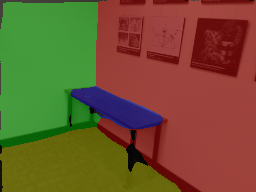

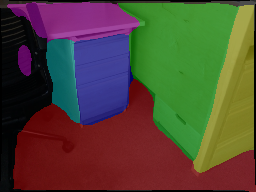

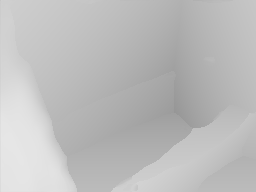

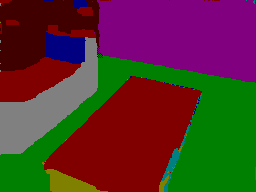

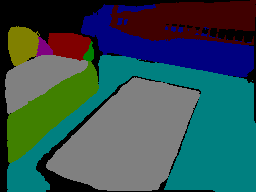

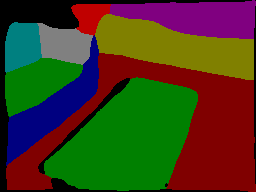

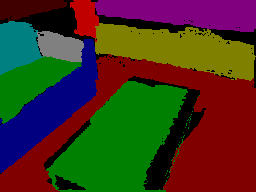

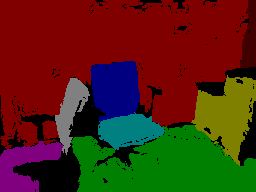

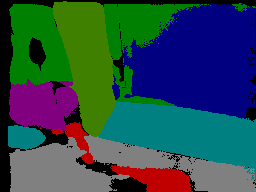

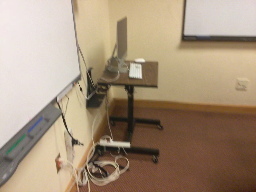

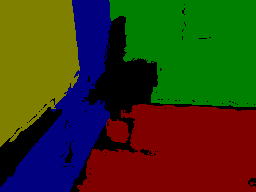

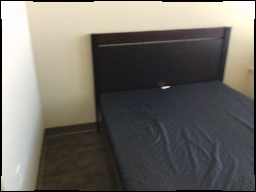

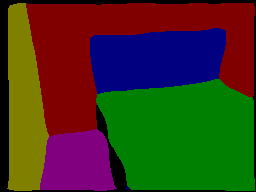

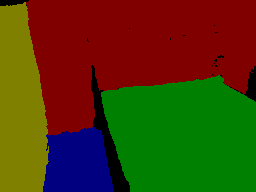

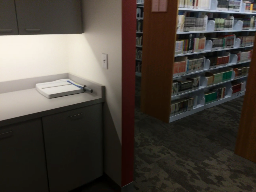

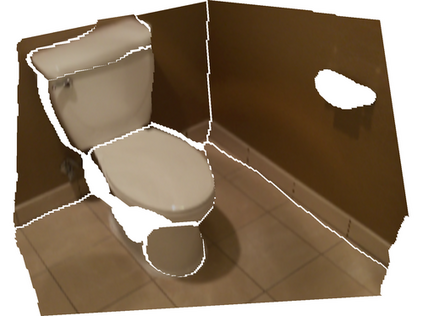

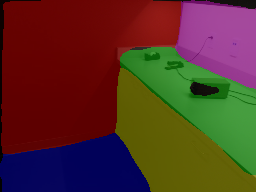

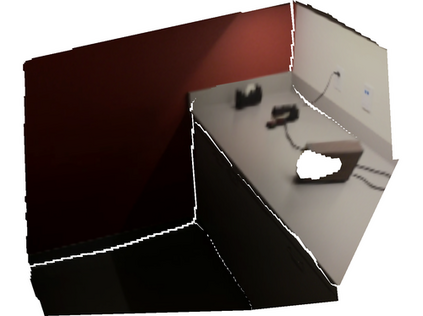

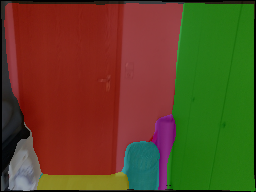

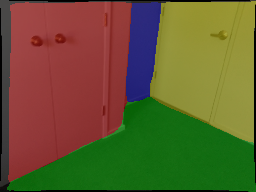

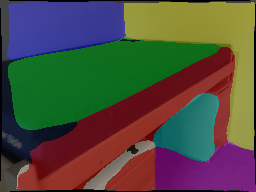

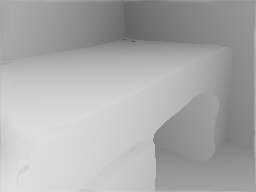

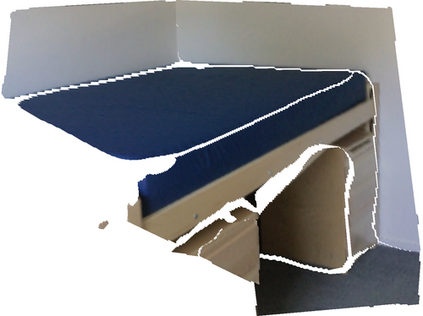

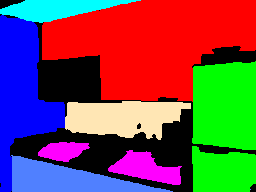

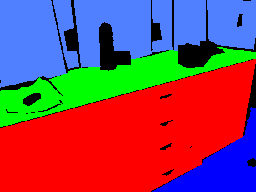

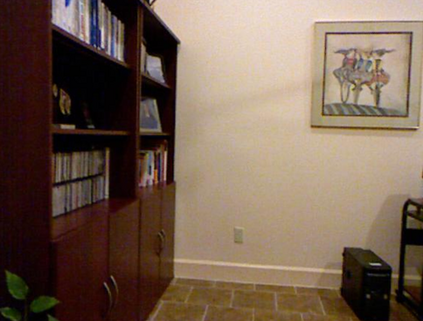

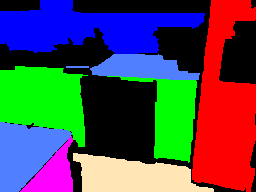

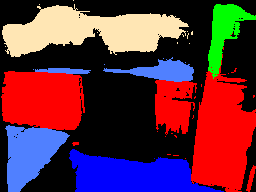

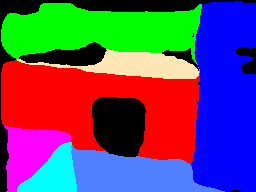

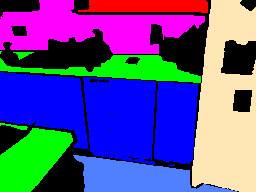

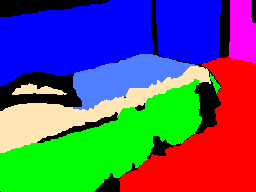

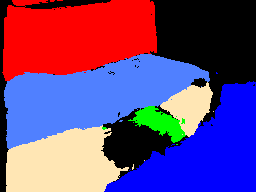

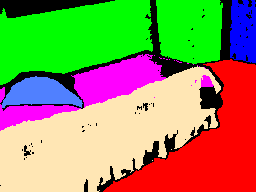

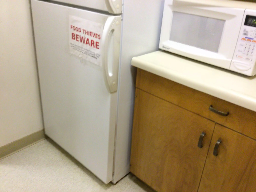

Single-image piece-wise planar 3D reconstruction aims to simultaneously segment plane instances and recover 3D plane parameters from an image. Most recent approaches leverage convolutional neural networks (CNNs) and achieve promising results. However, these methods are limited to detecting a fixed number of planes with certain learned order. To tackle this problem, we propose a novel two-stage method based on associative embedding, inspired by its recent success in instance segmentation. In the first stage, we train a CNN to map each pixel to an embedding space where pixels from the same plane instance have similar embeddings. Then, the plane instances are obtained by grouping the embedding vectors in planar regions via an efficient mean shift clustering algorithm. In the second stage, we estimate the parameter for each plane instance by considering both pixel-level and instance-level consistencies. With the proposed method, we are able to detect an arbitrary number of planes. Extensive experiments on public datasets validate the effectiveness and efficiency of our method. Furthermore, our method runs at 30 fps at the testing time, thus could facilitate many real-time applications such as visual SLAM and human-robot interaction. Code is available at https://github.com/svip-lab/PlanarReconstruction.

翻译:单图像平面平面 3D 重建旨在同时分割平面实例,并从图像中恢复 3D 平面参数。 多数最新方法利用了 convolution 神经网络(CNNs) 并取得了有希望的结果。 然而,这些方法仅限于探测固定数目的具有一定学习顺序的飞机。 为了解决这个问题,我们提出了一个基于关联嵌入的新颖的两阶段方法,其依据是最近成功分解的实例。 在第一阶段,我们训练了CNN 将每个像素映射到一个嵌入空间的嵌入空间的像素。 然后,这些平面实例是通过高效的中位移式组合组合在平面区域中的嵌入矢量获得的。 在第二阶段,我们通过考虑平面层层和实例层的组合来估计每个平面的参数。 根据拟议方法,我们能够检测任意的飞机数量。 在公共数据集上进行广泛的实验,验证了我们的方法的有效性和效率。 此外,我们的方法在30英尺的测试时间运行, 从而可以促进许多实时应用,例如视觉/ REDREDRA 。