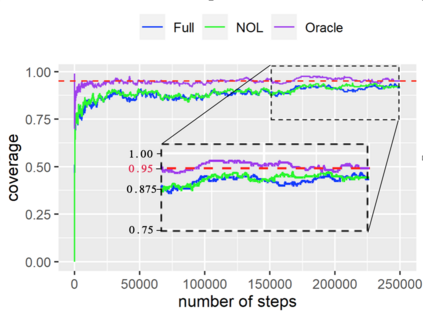

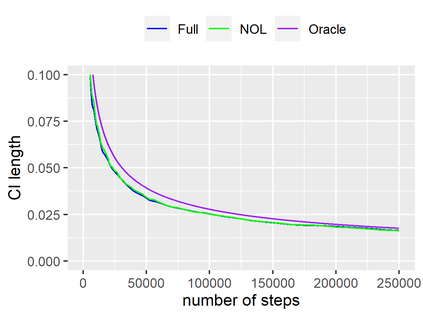

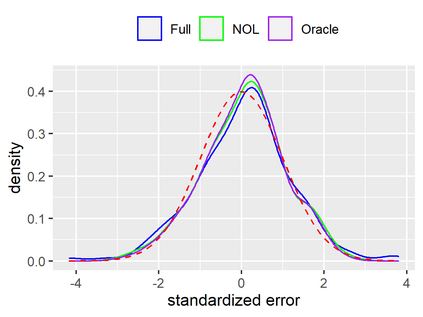

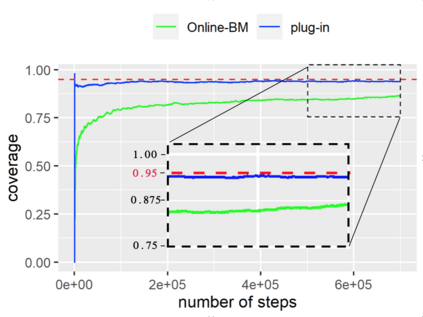

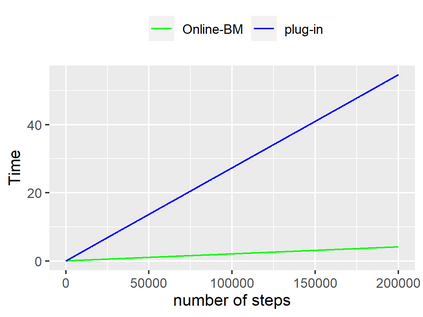

The stochastic gradient descent (SGD) algorithm is widely used for parameter estimation, especially for huge data sets and online learning. While this recursive algorithm is popular for computation and memory efficiency, quantifying variability and randomness of the solutions has been rarely studied. This paper aims at conducting statistical inference of SGD-based estimates in an online setting. In particular, we propose a fully online estimator for the covariance matrix of averaged SGD iterates (ASGD) only using the iterates from SGD. We formally establish our online estimator's consistency and show that the convergence rate is comparable to offline counterparts. Based on the classic asymptotic normality results of ASGD, we construct asymptotically valid confidence intervals for model parameters. Upon receiving new observations, we can quickly update the covariance matrix estimate and the confidence intervals. This approach fits in an online setting and takes full advantage of SGD: efficiency in computation and memory.

翻译:参数估算广泛使用随机梯度下移算法(SGD), 特别是大型数据集和在线学习。 虽然这种递归算法对计算和内存效率很受欢迎, 但很少研究这些解决方案的可变性和随机性。 本文旨在对在线环境中基于 SGD 的估计数进行统计推论。 特别是, 我们提议只使用 SGD 的迭代词, 才能对平均 SGD 代数( ASGD) 的共变量矩阵进行完全在线估算。 我们正式建立了我们的在线估测器的一致性, 并显示趋同率与离线对应方相当。 根据ASGD 的典型的非现常态常态常态结果, 我们为模型参数构建了无现有效信任间隔。 收到新观察后, 我们就可以快速更新常数矩阵估计和信任间隔。 这种方法符合在线设置, 并充分利用 SGD: 计算和记忆的效率 。