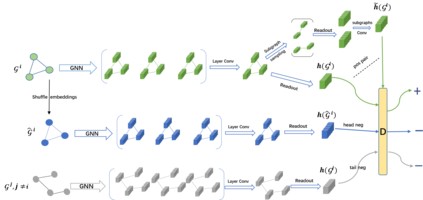

Graph Neural Networks have shown tremendous potential on dealing with garph data and achieved outstanding results in recent years. In some research areas, labelling data are hard to obtain for technical reasons, which necessitates the study of unsupervised and semi-superivsed learning on graphs. Therefore, whether the learned representations can capture the intrinsic feature of the original graphs will be the issue in this area. In this paper, we introduce a self-supervised learning method to enhance the representations of graph-level learned by Graph Neural Networks. To fully capture the original attributes of the graph, we use three information aggregators: attribute-conv, layer-conv and subgraph-conv to gather information from different aspects. To get a comprehensive understanding of the graph structure, we propose an ensemble-learning like subgraph method. And to achieve efficient and effective contrasive learning, a Head-Tail contrastive samples construction method is proposed to provide more abundant negative samples. By virtue of all proposed components which can be generalized to any Graph Neural Networks, in unsupervised case, we achieve new state of the art results in several benchmarks. We also evaluate our model on semi-supervised learning tasks and make a fair comparison to state of the art semi-supervised methods.

翻译:神经网图显示处理合成数据的巨大潜力,近年来取得了杰出的成果。在一些研究领域,由于技术原因很难获得标签数据。在一些研究领域,由于技术原因,很难获得标签数据,因此需要研究在图形上进行未经监督和半监督的学习。因此,这方面的问题将是,所学的演示图能否捕捉原始图形的内在特征。在本文件中,我们引入了一种自监督的学习方法,以加强图神经网图所学图形水平的表达方式。为了充分捕捉该图的原始属性,我们使用三个信息聚合器:属性conv、层conv和子conv,从不同方面收集信息。为了全面了解图形结构,我们建议采用像子绘图法那样的共通制学习方法。为了实现高效率和高效的反向学习,我们建议了一种头向导对比样本构建方法,以提供更丰富的负面样本。根据所有拟议的组成部分,可以概括到任何图表神经网,在未经监督的情况下,我们用新的状态来收集不同方面的信息。为了全面理解图形结构结构结构结构结构,我们用新的状态,在几个基准中,我们用新的状态上进行新的高级的半级的模型学习。还评估。