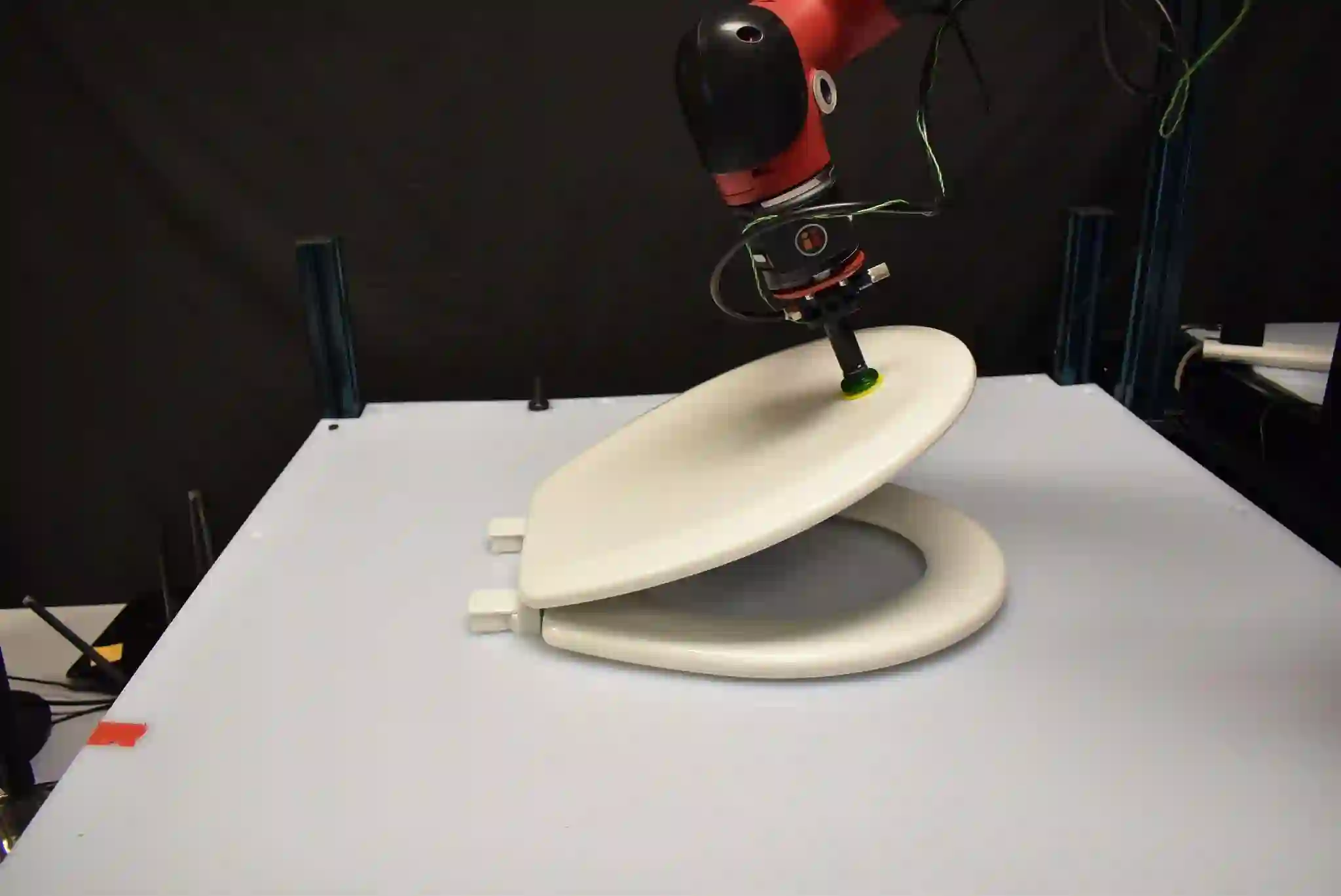

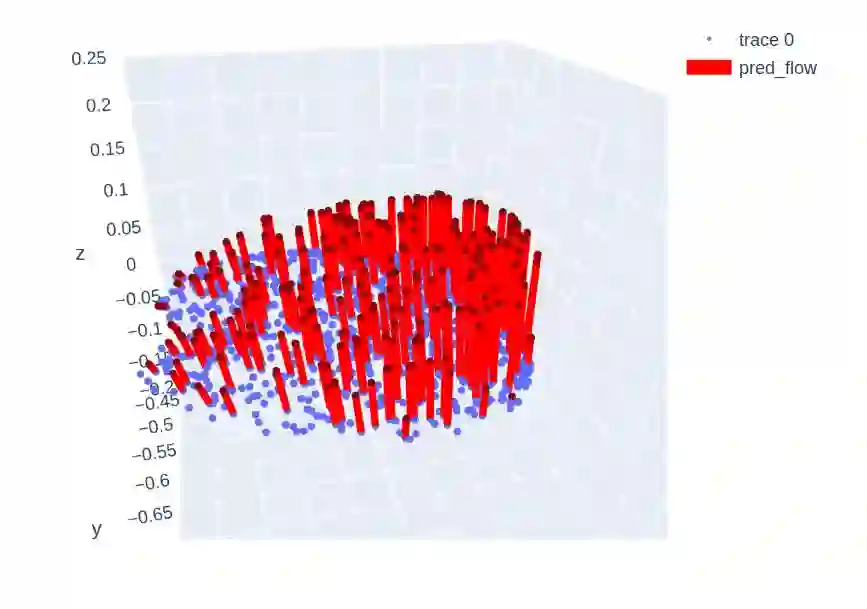

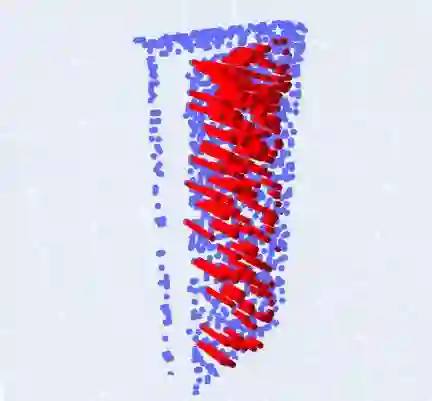

We explore a novel method to perceive and manipulate 3D articulated objects that generalizes to enable a robot to articulate unseen classes of objects. We propose a vision-based system that learns to predict the potential motions of the parts of a variety of articulated objects to guide downstream motion planning of the system to articulate the objects. To predict the object motions, we train a neural network to output a dense vector field representing the point-wise motion direction of the points in the point cloud under articulation. We then deploy an analytical motion planner based on this vector field to achieve a policy that yields maximum articulation. We train the vision system entirely in simulation, and we demonstrate the capability of our system to generalize to unseen object instances and novel categories in both simulation and the real world, deploying our policy on a Sawyer robot with no finetuning. Results show that our system achieves state-of-the-art performance in both simulated and real-world experiments.

翻译:我们探索一种新颖的方法来观察和操控3D显形物体,该方法一般地使机器人能够表达各种不可见的物体类别。我们提议了一个基于视觉的系统,用来预测各种显形物体部分的潜在运动动向,以指导系统下游运动规划以表达物体;为了预测物体运动动向,我们训练一个神经网络,以输出一个稠密的矢量场,代表正在表达的点云点点点的点向运动方向。然后,我们在这个矢量场上部署一个分析运动规划仪,以达成一个能够产生最大表达效果的政策。我们完全在模拟方面对视觉系统进行培训,我们展示了我们的系统在模拟和现实世界中推广看不见物体实例和新类别的能力,在没有微调的索伊机器人上运用了我们的政策。结果显示,我们的系统在模拟和现实世界实验中都取得了最先进的性能。