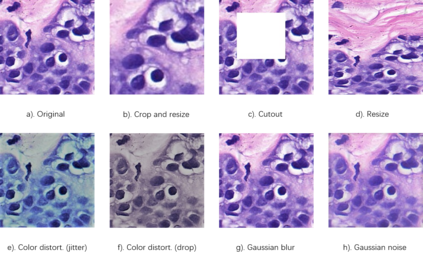

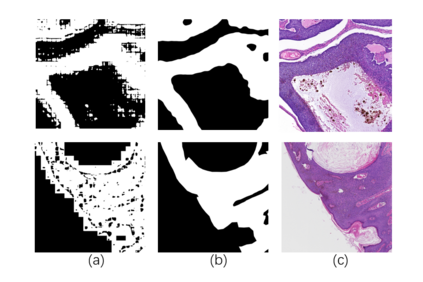

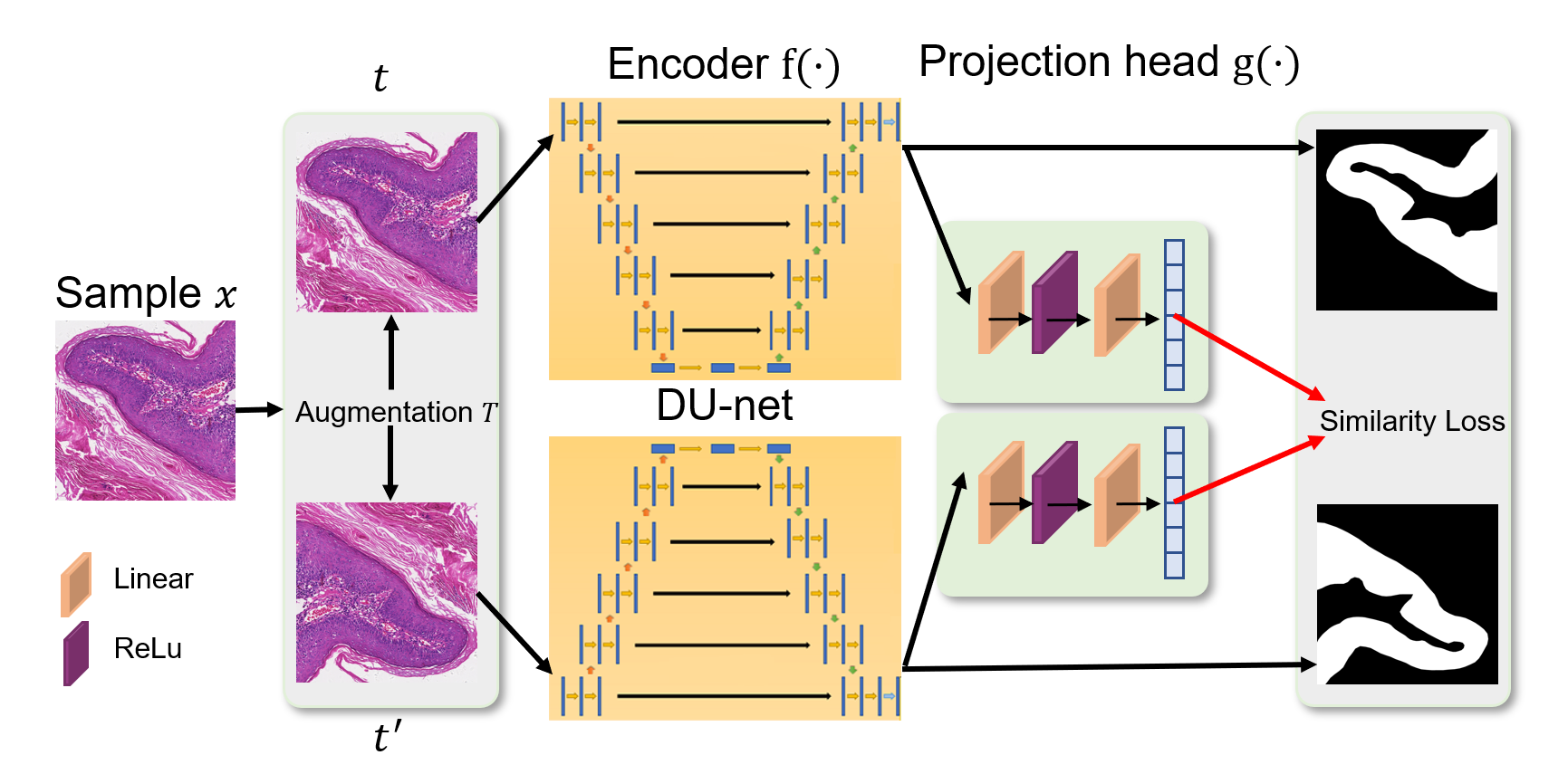

In this paper, we introduce an unsupervised cancer segmentation framework for histology images. The framework involves an effective contrastive learning scheme for extracting distinctive visual representations for segmentation. The encoder is a Deep U-Net (DU-Net) structure that contains an extra fully convolution layer compared to the normal U-Net. A contrastive learning scheme is developed to solve the problem of lacking training sets with high-quality annotations on tumour boundaries. A specific set of data augmentation techniques are employed to improve the discriminability of the learned colour features from contrastive learning. Smoothing and noise elimination are conducted using convolutional Conditional Random Fields. The experiments demonstrate competitive performance in segmentation even better than some popular supervised networks.

翻译:在本文中,我们为组织图象引入了一个不受监督的癌症分解框架。这个框架包含一个有效的对比性学习计划,用于为分解提取独特的视觉表现。编码器是一个深U-Net(DU-Net)结构,与正常的U-Net相比,包含一个额外的完全变化层。我们开发了一个对比性学习计划,以解决缺乏具有肿瘤界限高质量说明的成套培训的问题。采用了一套特定的数据增强技术,以改善从对比性学习中学习的彩色特征的不相容性。使用进化条件随机场进行平滑和消噪。实验显示,在分解方面的竞争表现比一些受监督的网络还要好。