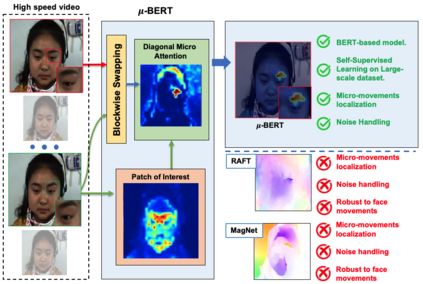

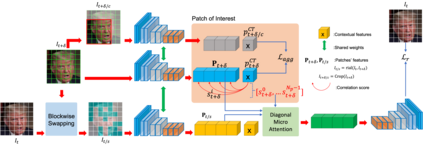

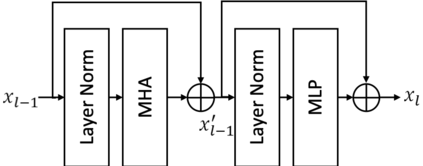

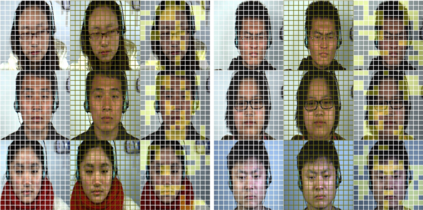

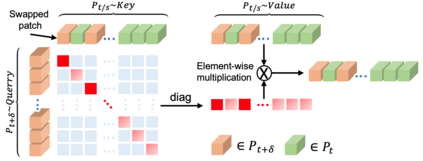

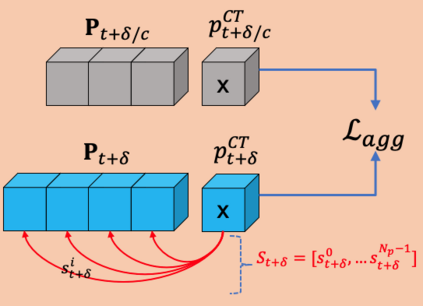

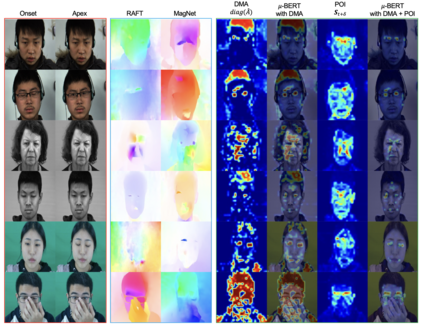

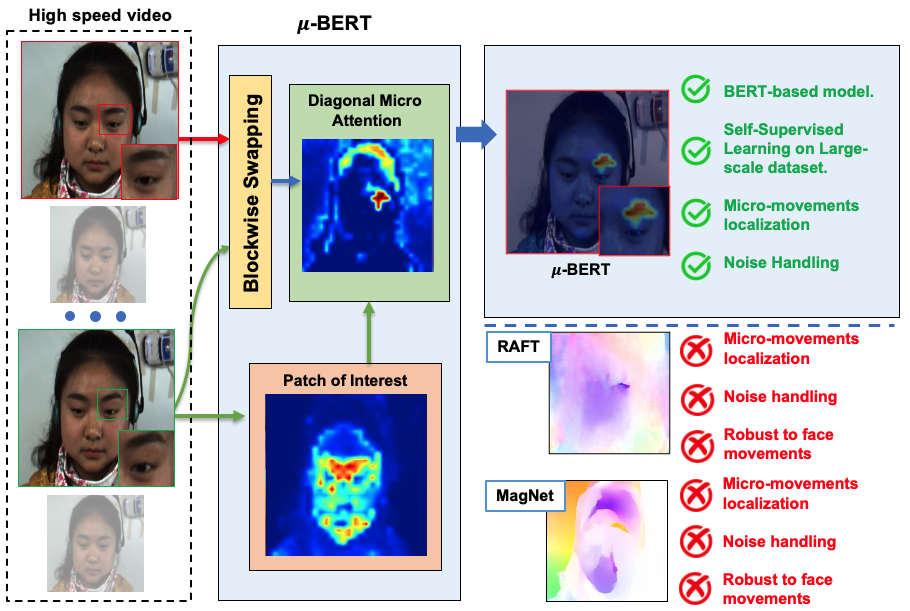

Micro-expression recognition is one of the most challenging topics in affective computing. It aims to recognize tiny facial movements difficult for humans to perceive in a brief period, i.e., 0.25 to 0.5 seconds. Recent advances in pre-training deep Bidirectional Transformers (BERT) have significantly improved self-supervised learning tasks in computer vision. However, the standard BERT in vision problems is designed to learn only from full images or videos, and the architecture cannot accurately detect details of facial micro-expressions. This paper presents Micron-BERT ($\mu$-BERT), a novel approach to facial micro-expression recognition. The proposed method can automatically capture these movements in an unsupervised manner based on two key ideas. First, we employ Diagonal Micro-Attention (DMA) to detect tiny differences between two frames. Second, we introduce a new Patch of Interest (PoI) module to localize and highlight micro-expression interest regions and simultaneously reduce noisy backgrounds and distractions. By incorporating these components into an end-to-end deep network, the proposed $\mu$-BERT significantly outperforms all previous work in various micro-expression tasks. $\mu$-BERT can be trained on a large-scale unlabeled dataset, i.e., up to 8 million images, and achieves high accuracy on new unseen facial micro-expression datasets. Empirical experiments show $\mu$-BERT consistently outperforms state-of-the-art performance on four micro-expression benchmarks, including SAMM, CASME II, SMIC, and CASME3, by significant margins. Code will be available at \url{https://github.com/uark-cviu/Micron-BERT}

翻译:面部微表情识别是情感计算中最具挑战性的问题之一。它旨在识别人类难以在短时间内(即0.25至0.5秒)感知的微小面部动作。最近,深度双向变压器(BERT)的预训练在计算机视觉中显着改进了自监督学习任务。然而,视觉问题中的标准BERT仅设计用于从完整的图像或视频中学习,且架构无法准确检测到微表情的细节。本文提出了一种新的面部微表情识别方法:微米BERT($\mu$-BERT)。该方法可以基于两个关键思想自动以无监督的方式捕捉这些运动。首先,我们采用对角线微注意力(DMA)来检测两个帧之间的微小差异。其次,我们引入了新的感兴趣区域(PoI)模块,以本地化和突出显示微表情感兴趣区域,并同时减少嘈杂的背景和干扰。通过将这些组件纳入端到端的深度网络中,所提出的$\mu$-BERT在各种微表情任务中显著优于先前的所有工作。$\mu$-BERT可以在大规模未标记的数据集上学习,即高达800万张图像,并在新的未见过的面部微表情数据集上获得高精度。实证实验表明,$\mu$-BERT在四个微表情基准(包括SAMM、CASME II、SMIC和CASME3)上始终显著优于最先进的性能。代码将在\url{https://github.com/uark-cviu/Micron-BERT}上发布。