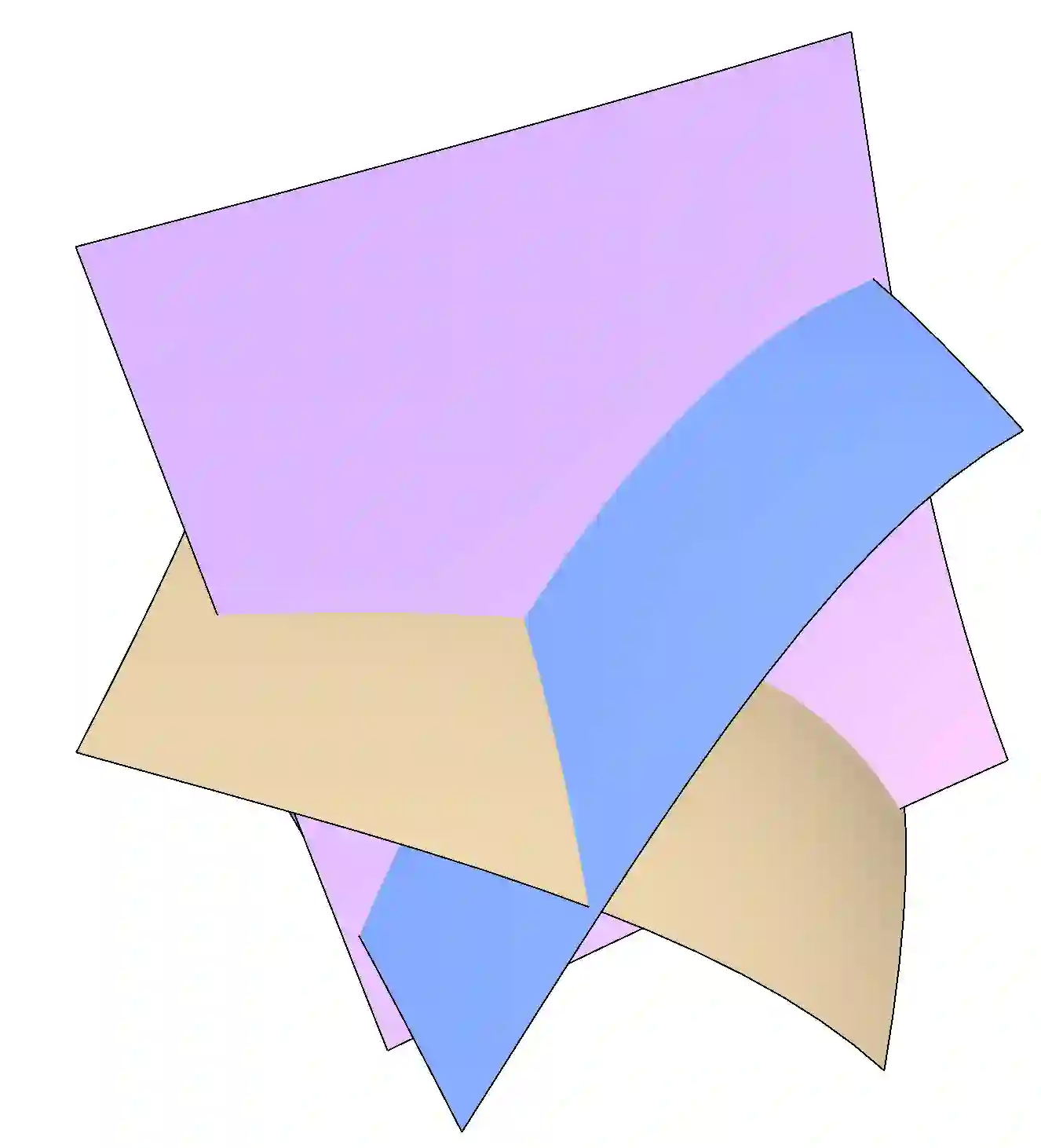

In this paper, targeting to understand the underlying explainable factors behind observations and modeling the conditional generation process on these factors, we propose a new task, disentanglement of diffusion probabilistic models (DPMs), to take advantage of the remarkable modeling ability of DPMs. To tackle this task, we further devise an unsupervised approach named DisDiff. For the first time, we achieve disentangled representation learning in the framework of diffusion probabilistic models. Given a pre-trained DPM, DisDiff can automatically discover the inherent factors behind the image data and disentangle the gradient fields of DPM into sub-gradient fields, each conditioned on the representation of each discovered factor. We propose a novel Disentangling Loss for DisDiff to facilitate the disentanglement of the representation and sub-gradients. The extensive experiments on synthetic and real-world datasets demonstrate the effectiveness of DisDiff.

翻译:本文旨在了解观察背后的基本可解释因素,并将有条件生成过程建模于这些因素之上,我们提出了一个新的任务,即分离扩散概率模型(DPMs),以利用DPMs非凡的建模能力。为了完成这项任务,我们进一步设计了一个名为DisDiff的不受监督的方法。我们第一次在扩散概率模型的框架内实现了分解的代言学习。鉴于事先经过培训的DPM,DisDiff可以自动发现图像数据背后的内在因素,并将DPM的梯度字段分解成次梯度字段,每个以每个发现要素的表示为条件。我们提出了一个新的脱钩脱钩的脱钩方法,以便利代表方和子梯度的脱钩。合成和真实世界数据集的广泛实验显示了Diff的功效。