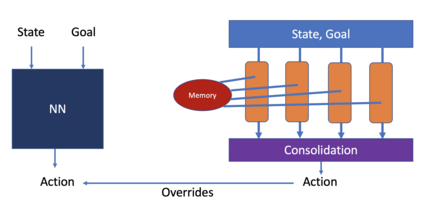

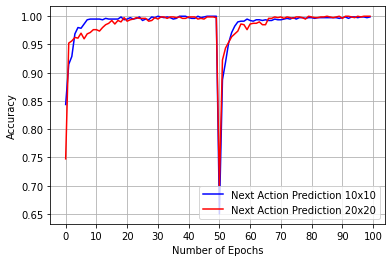

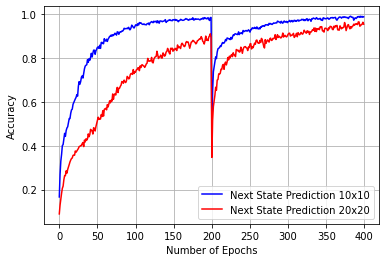

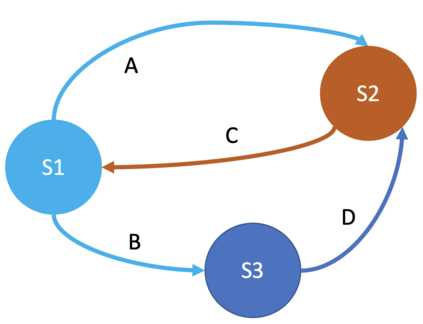

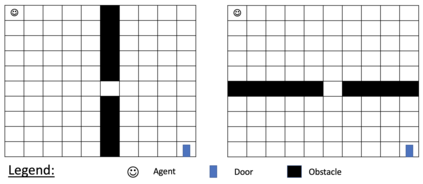

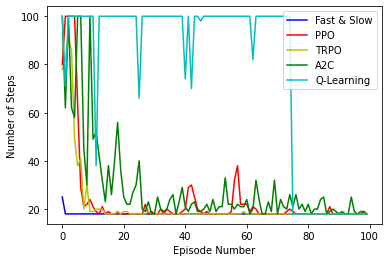

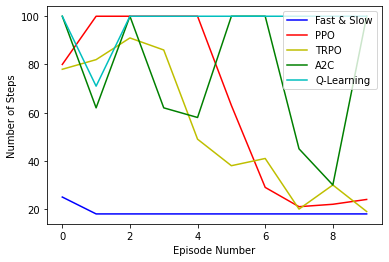

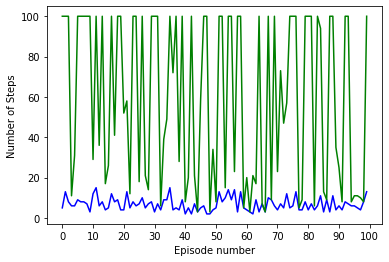

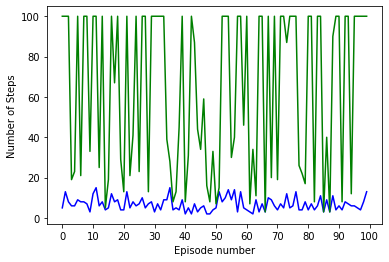

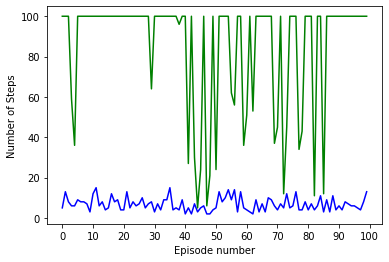

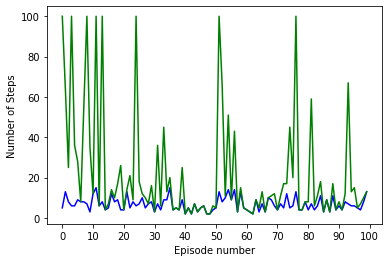

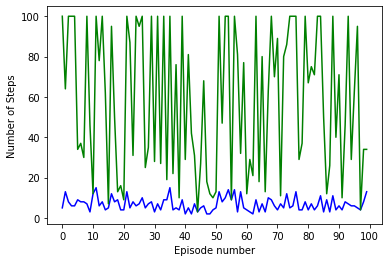

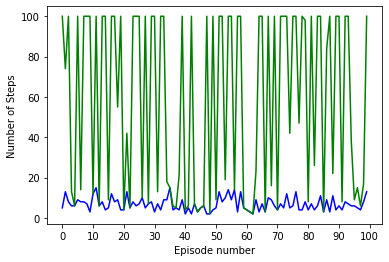

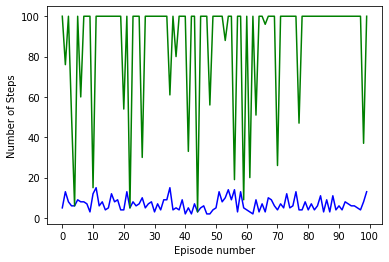

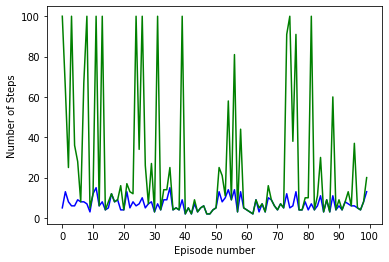

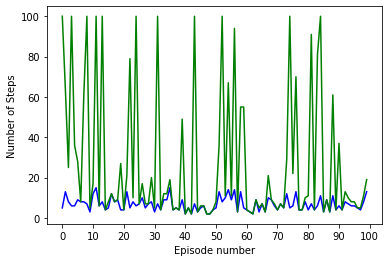

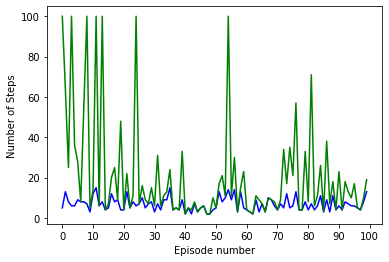

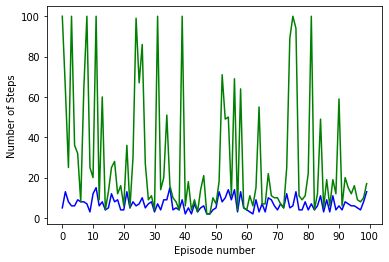

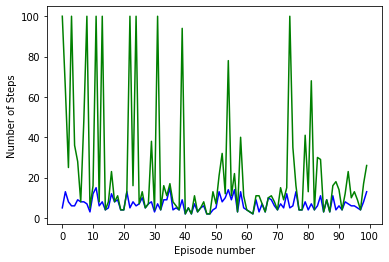

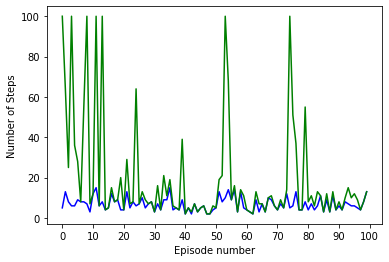

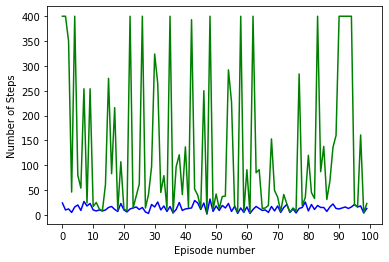

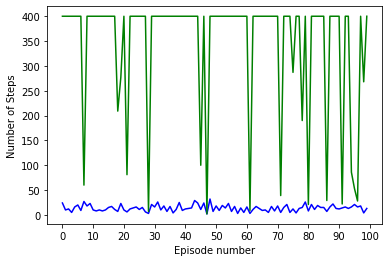

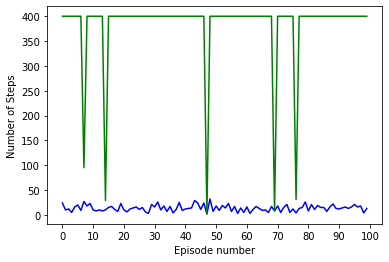

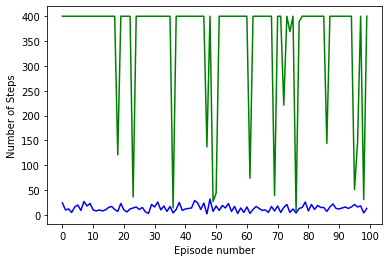

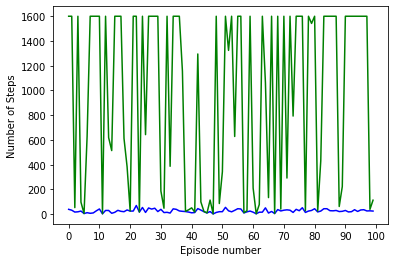

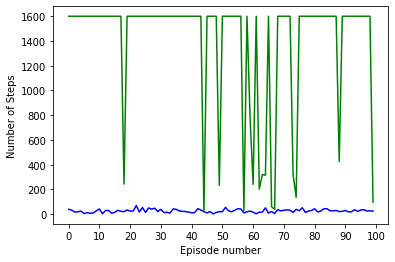

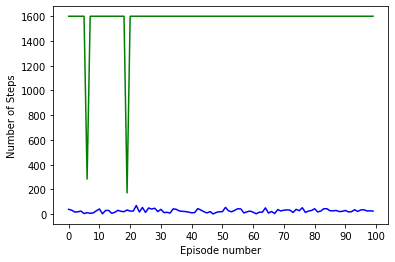

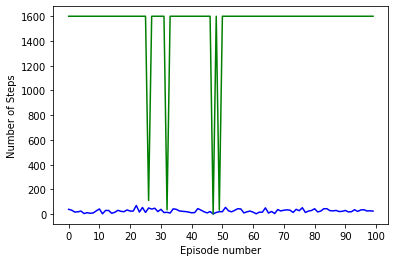

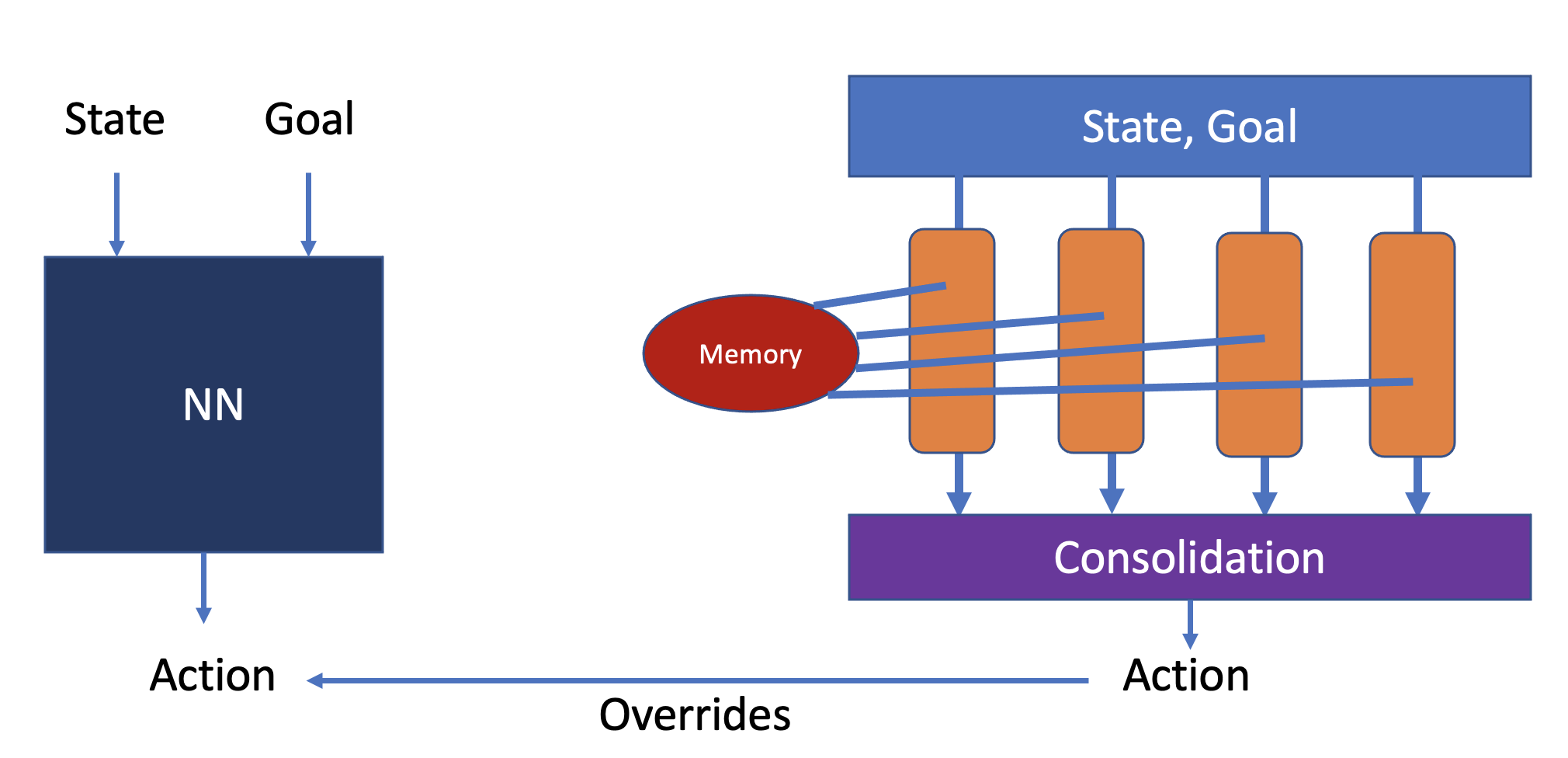

Model-based next state prediction and state value prediction are slow to converge. To address these challenges, we do the following: i) Instead of a neural network, we do model-based planning using a parallel memory retrieval system (which we term the slow mechanism); ii) Instead of learning state values, we guide the agent's actions using goal-directed exploration, by using a neural network to choose the next action given the current state and the goal state (which we term the fast mechanism). The goal-directed exploration is trained online using hippocampal replay of visited states and future imagined states every single time step, leading to fast and efficient training. Empirical studies show that our proposed method has a 92% solve rate across 100 episodes in a dynamically changing grid world, significantly outperforming state-of-the-art actor critic mechanisms such as PPO (54%), TRPO (50%) and A2C (24%). Ablation studies demonstrate that both mechanisms are crucial. We posit that the future of Reinforcement Learning (RL) will be to model goals and sub-goals for various tasks, and plan it out in a goal-directed memory-based approach.

翻译:为了应对这些挑战,我们做了以下工作:(一) 不像神经网络那样,我们使用平行的记忆检索系统(我们称之为缓慢机制)来进行基于模型的规划;(二) 我们不学习国家价值观,而是利用一个神经网络来指导代理人的行动,根据当前状态和目标状态选择下一个行动(我们称之为快速机制 ) 。目标导向的探索是利用所访问国家和未来想象国家的时空重播进行在线培训,最终进行快速有效的培训。经验性研究表明,在动态变化的电网世界中,我们拟议的方法在100个阶段中达到92%的解决率,大大超过最先进的行为者批评机制,如PPO(54%)、TRPO(50%)和A2C(24% ) 。 调整研究表明,这两个机制都至关重要。我们假设,“加强学习”(RL)的未来将是各种任务的模型目标和子目标,并将它规划成一个目标导向的记忆方法。