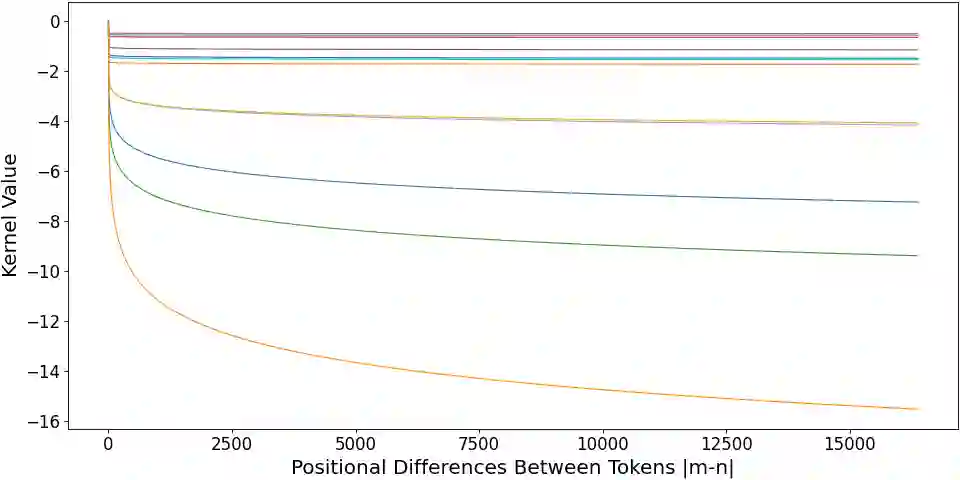

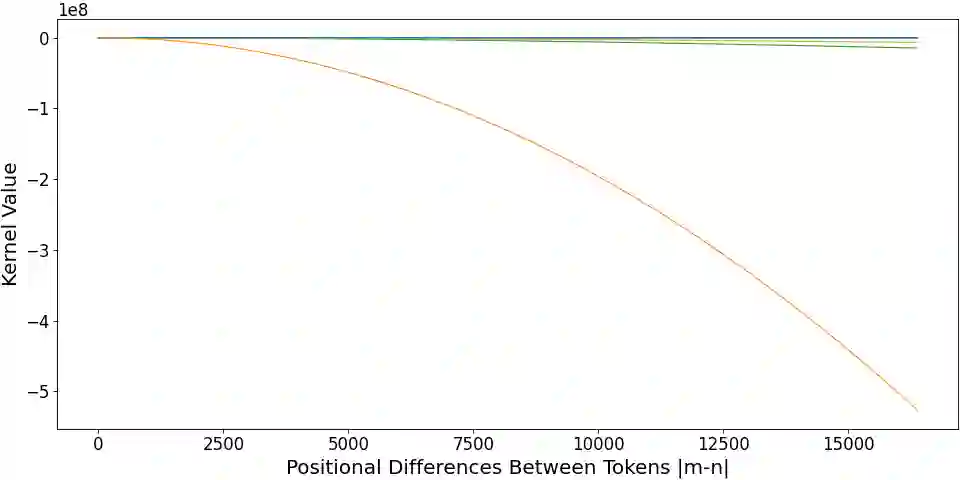

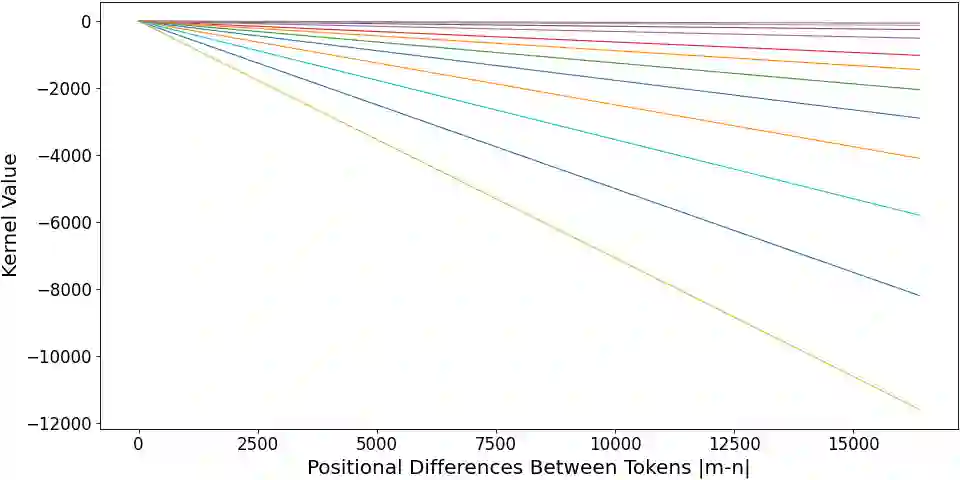

Relative positional embeddings (RPE) have received considerable attention since RPEs effectively model the relative distance among tokens and enable length extrapolation. We propose KERPLE, a framework that generalizes relative position embedding for extrapolation by kernelizing positional differences. We achieve this goal using conditionally positive definite (CPD) kernels, a class of functions known for generalizing distance metrics. To maintain the inner product interpretation of self-attention, we show that a CPD kernel can be transformed into a PD kernel by adding a constant offset. This offset is implicitly absorbed in the Softmax normalization during self-attention. The diversity of CPD kernels allows us to derive various RPEs that enable length extrapolation in a principled way. Experiments demonstrate that the logarithmic variant achieves excellent extrapolation performance on three large language modeling datasets. Our implementation and pretrained checkpoints are released at~\url{https://github.com/chijames/KERPLE.git}.

翻译:相对位置嵌入器(RPE)受到相当的重视,因为RPE有效模拟了物证之间的相对距离,并能够进行长度外推。我们提议了KERPLE,这是一个通过定位差异内插将相对位置嵌入外推法的通用框架。我们利用有条件的肯定(CPD)内核来实现这一目标,这是一个通用距离测量标准已知的功能类别。为了保持对自我注意的内在产品解释,我们表明,通过增加一个常数抵消,CPD内核可以转换成PD内核。这一抵消在自省期间的软体正常化中被隐含吸收。CPD内核的多样性使我们能够获得各种RPE,从而能够以有原则的方式进行长度外推。实验表明,对数变量在三种大语言模型数据集上取得了极好的外推效果。我们的实施和预先训练的检查站在<url{https://github.com/chijame/KERPLE.git}公布。