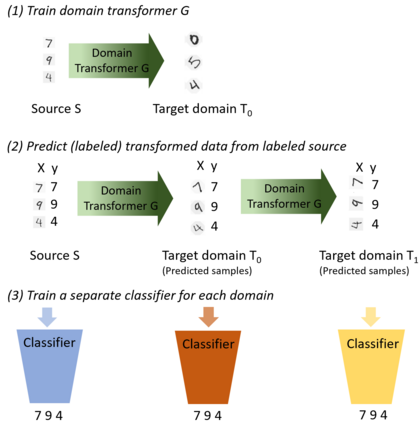

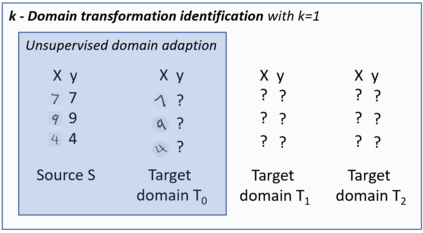

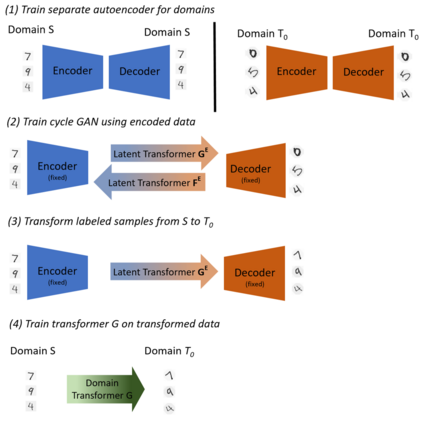

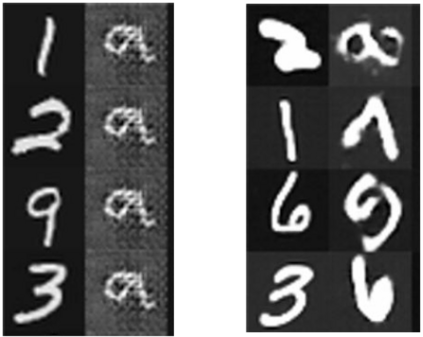

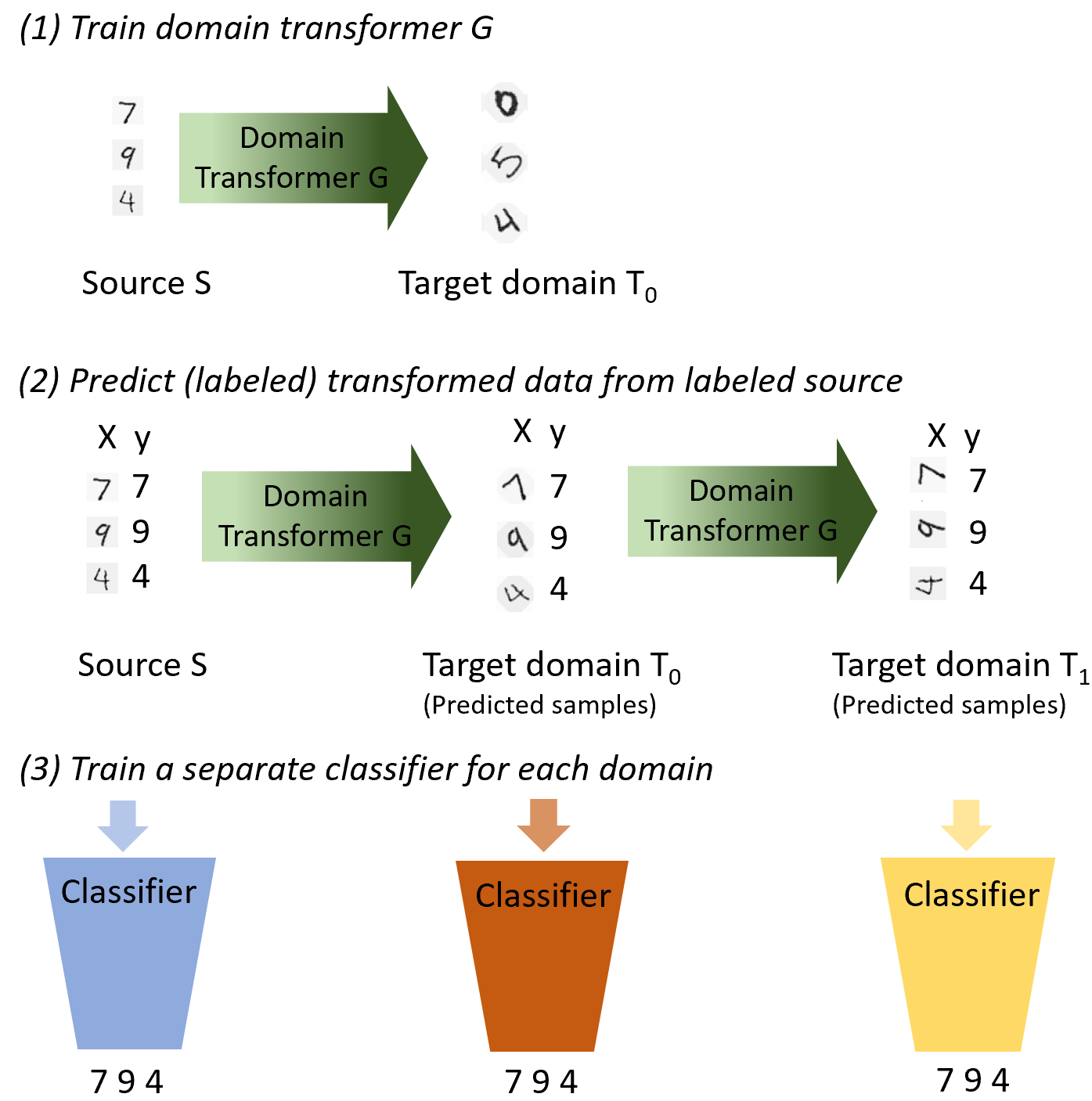

The data distribution commonly evolves over time leading to problems such as concept drift that often decrease classifier performance. Current techniques are not adequate for this problem because they either require detailed knowledge of the transformation or are not suited for anticipating unseen domains but can only adapt to domains, where data samples are available. We seek to predict unseen data (and their labels) allowing us to tackle challenges s a non-constant data distribution in a proactive manner rather than detecting and reacting to already existing changes that might already have led to errors. To this end, we learn a domain transformer in an unsupervised manner that allows generating data of unseen domains. Our approach first matches independently learned latent representations of two given domains obtained from an auto-encoder using a Cycle-GAN. In turn, a transformation of the original samples can be learned that can be applied iteratively to extrapolate to unseen domains. Our evaluation of CNNs on image data confirms the usefulness of the approach. It also achieves very good results on the well-known problem of unsupervised domain adaption, where only labels but no samples have to be predicted. Code is available at https://github.com/JohnTailor/DoTra.

翻译:数据分布通常会随着时间变化而演变,导致问题,例如概念漂移,往往会降低分类的性能。当前技术不足以解决问题,因为它们要么需要详细了解变换过程,要么不适合预测看不见的域,但只能适应有数据样品的领域。我们力求预测未见的数据(及其标签),使我们能够以主动积极的方式应对非恒定的数据分配方面的挑战,而不是探测和应对可能已经导致错误的现有变化。为此,我们学习了一个域变变变变器,这种变换器不受监督,可以生成未知域的数据。我们的方法首先独立地匹配了利用循环GAN从自动编码器获取的两个域的潜在表达方式。反过来,可以学习原始样品的变换,可以迭接地用于外推到隐蔽域。我们对图像数据的CNN的评估证实了这种方法的效用。它还在已知的未超超域变换问题上取得了非常好的结果,在那里只有标签,但没有样品可以预测。代码可在 https://githor/Doubcom查阅 https://giuthor/Douthor.com查阅。