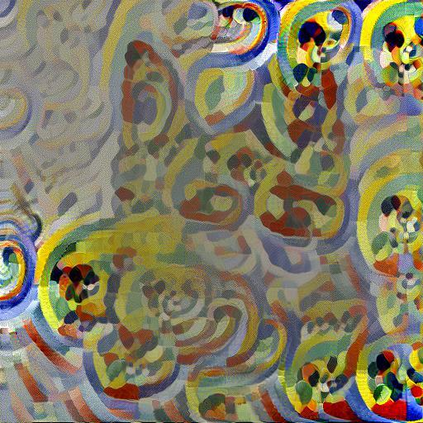

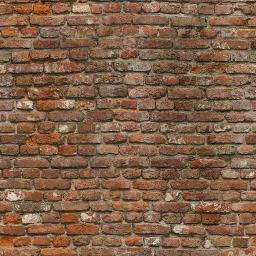

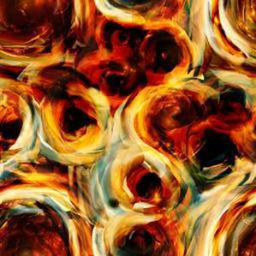

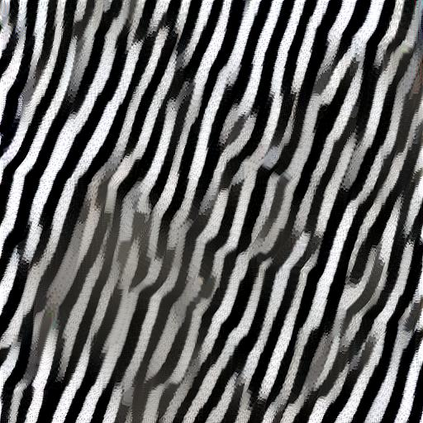

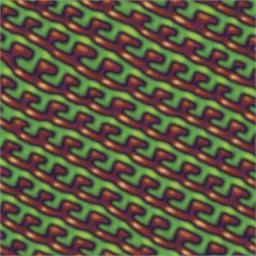

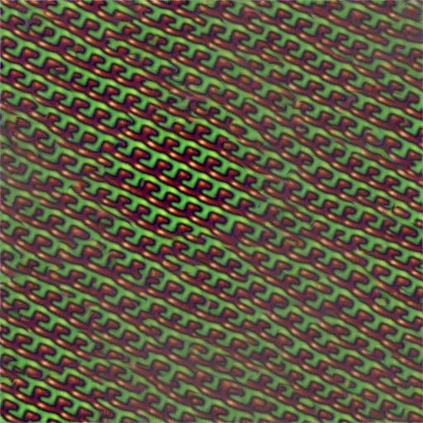

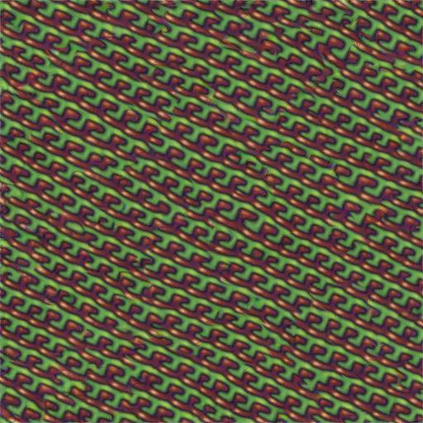

We address the problem of computing a textural loss based on the statistics extracted from the feature activations of a convolutional neural network optimized for object recognition (e.g. VGG-19). The underlying mathematical problem is the measure of the distance between two distributions in feature space. The Gram-matrix loss is the ubiquitous approximation for this problem but it is subject to several shortcomings. Our goal is to promote the Sliced Wasserstein Distance as a replacement for it. It is theoretically proven,practical, simple to implement, and achieves results that are visually superior for texture synthesis by optimization or training generative neural networks.

翻译:我们根据从为物体识别而优化的进化神经网络(如VGG-19)功能激活中提取的统计数据中得出的统计数据来计算质体损失的问题。基本的数学问题是测量地物空间两个分布之间的距离。格拉姆矩阵损失是这一问题的无处不在的近似值,但存在若干缺点。我们的目标是推广Sliced Wasserstein距离,以此替代它。在理论上,它证明是实用的,易于执行,并且通过优化或培训基因神经网络取得对质质合成具有视觉优越性的结果。