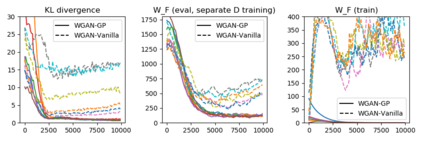

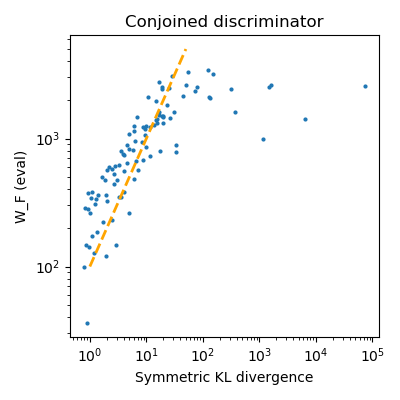

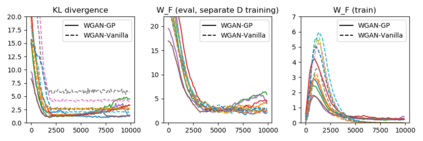

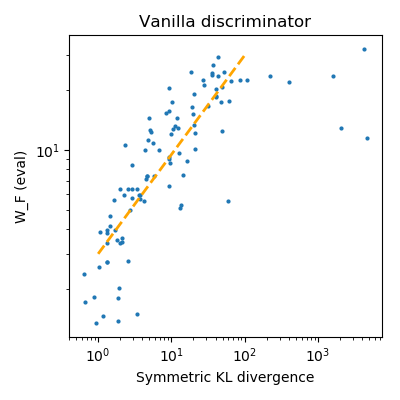

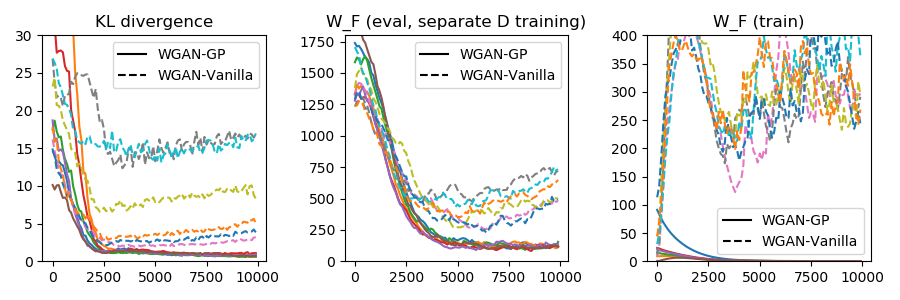

While Generative Adversarial Networks (GANs) have empirically produced impressive results on learning complex real-world distributions, recent work has shown that they suffer from lack of diversity or mode collapse. The theoretical work of Arora et al.~\cite{AroraGeLiMaZh17} suggests a dilemma about GANs' statistical properties: powerful discriminators cause overfitting, whereas weak discriminators cannot detect mode collapse. In contrast, we show in this paper that GANs can in principle learn distributions in Wasserstein distance (or KL-divergence in many cases) with polynomial sample complexity, if the discriminator class has strong distinguishing power against the particular generator class (instead of against all possible generators). For various generator classes such as mixture of Gaussians, exponential families, and invertible neural networks generators, we design corresponding discriminators (which are often neural nets of specific architectures) such that the Integral Probability Metric (IPM) induced by the discriminators can provably approximate the Wasserstein distance and/or KL-divergence. This implies that if the training is successful, then the learned distribution is close to the true distribution in Wasserstein distance or KL divergence, and thus cannot drop modes. Our preliminary experiments show that on synthetic datasets the test IPM is well correlated with KL divergence, indicating that the lack of diversity may be caused by the sub-optimality in optimization instead of statistical inefficiency.

翻译:虽然创世顶端网络(GANs)在学习复杂的现实世界分布上取得了经验上令人印象深刻的成果,但最近的工作表明,它们缺乏多样性或模式崩溃。Arora et al. ⁇ cite{AroraGeLiMaZh17} 的理论工作表明,GANs统计属性存在两难:强大的歧视者导致过度适应,而弱化的区别者无法察觉模式崩溃。相比之下,我们在本文件中显示,GANs原则上可以学习瓦瑟斯坦距离(或在许多情况下KL-diverence)的分布,具有多元样本复杂性,如果歧视者阶级对特定发电机类(而不是所有可能的生成者)有着强烈的区别。对于高斯人、指数家庭以及不可忽略的神经网络生成者等各类不同,我们设计相应的歧视者(通常是特定建筑的神经网 ) 。 与此形成对比的是, 由歧视者引领引的综合概率Metric(IP), 以及当时的瓦瑟尔斯坦距离和/或KL-Diverrrrgence的深度差异, 等样本分布具有很强的区别性,这意味着,因此无法在卡路面上进行精确分配。这意味着,因此,我们的统计分析分析的精确分配不能显示,因此在卡斯特度上进行成功的分析分析分析,因此,因此,我们的数据流流流流流流流流流流流流的分流的分流的分布是显示,因此,因此,我们的分流的分流的分流的分。