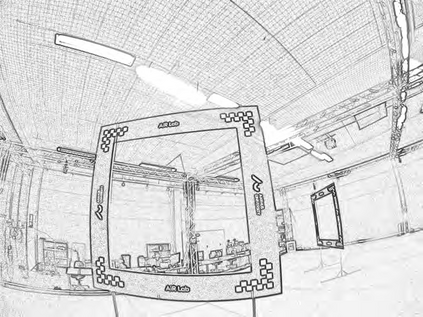

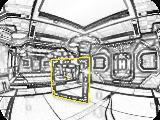

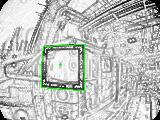

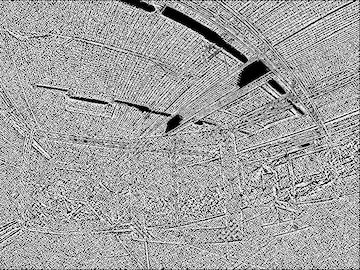

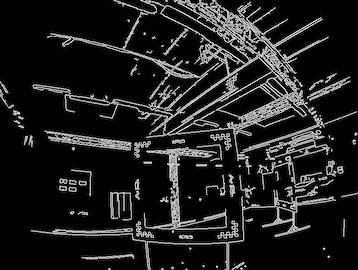

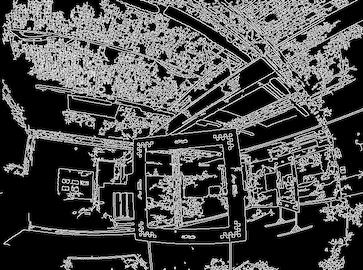

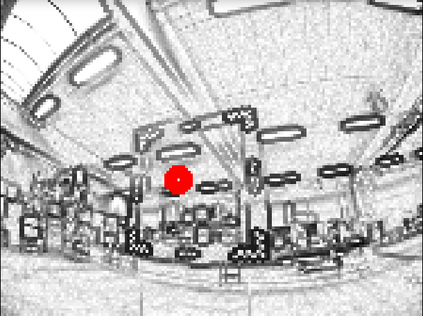

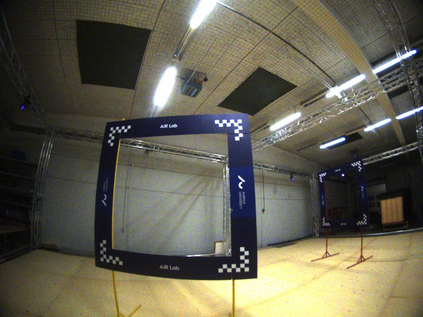

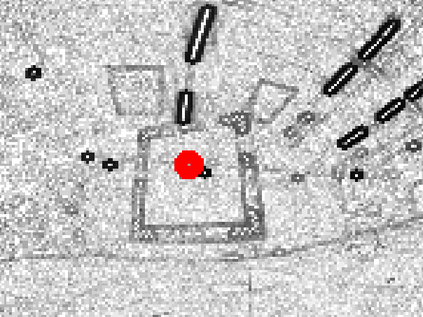

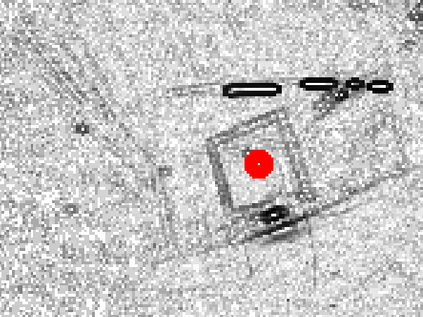

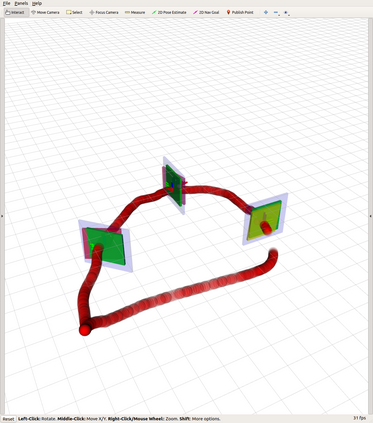

In autonomous and mobile robotics, one of the main challenges is the robust on-the-fly perception of the environment, which is often unknown and dynamic, like in autonomous drone racing. In this work, we propose a novel deep neural network-based perception method for racing gate detection -- PencilNet -- which relies on a lightweight neural network backbone on top of a pencil filter. This approach unifies predictions of the gates' 2D position, distance, and orientation in a single pose tuple. We show that our method is effective for zero-shot sim-to-real transfer learning that does not need any real-world training samples. Moreover, our framework is highly robust to illumination changes commonly seen under rapid flight compared to state-of-art methods. A thorough set of experiments demonstrates the effectiveness of this approach in multiple challenging scenarios, where the drone completes various tracks under different lighting conditions.

翻译:在自主和移动机器人中,主要挑战之一是对环境的强力即时感知,环境通常不为人知,而且动态性很强,就像在自动无人驾驶飞行器赛跑中。在这项工作中,我们提议了一种新型的深神经网络感知方法,用于赛门探测 -- -- PencilNet -- -- 它依靠铅笔过滤器顶部的轻量神经网络主干。这种方法统一了对门的2D位置、距离和方向的预测。我们表明,我们的方法对于不需要任何真实世界训练样本的零射速模拟实际转移学习是有效的。此外,我们的框架非常强大,能够防止在快速飞行中常见的与最先进的方法相比的照明变化。一系列彻底的实验表明,在多种具有挑战性的情况下,无人机在不同光条件下完成不同轨道的飞行,这种方法的有效性。