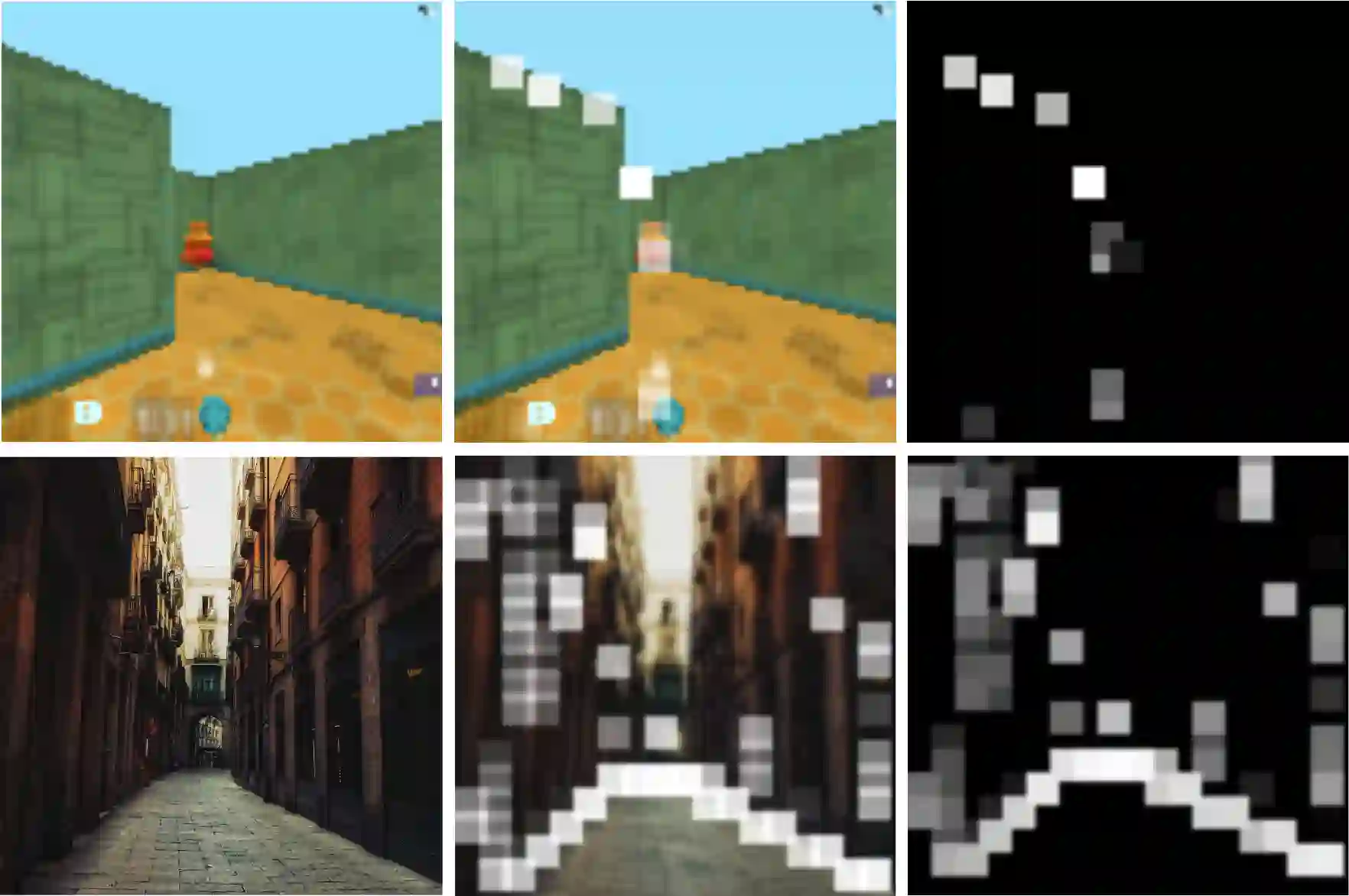

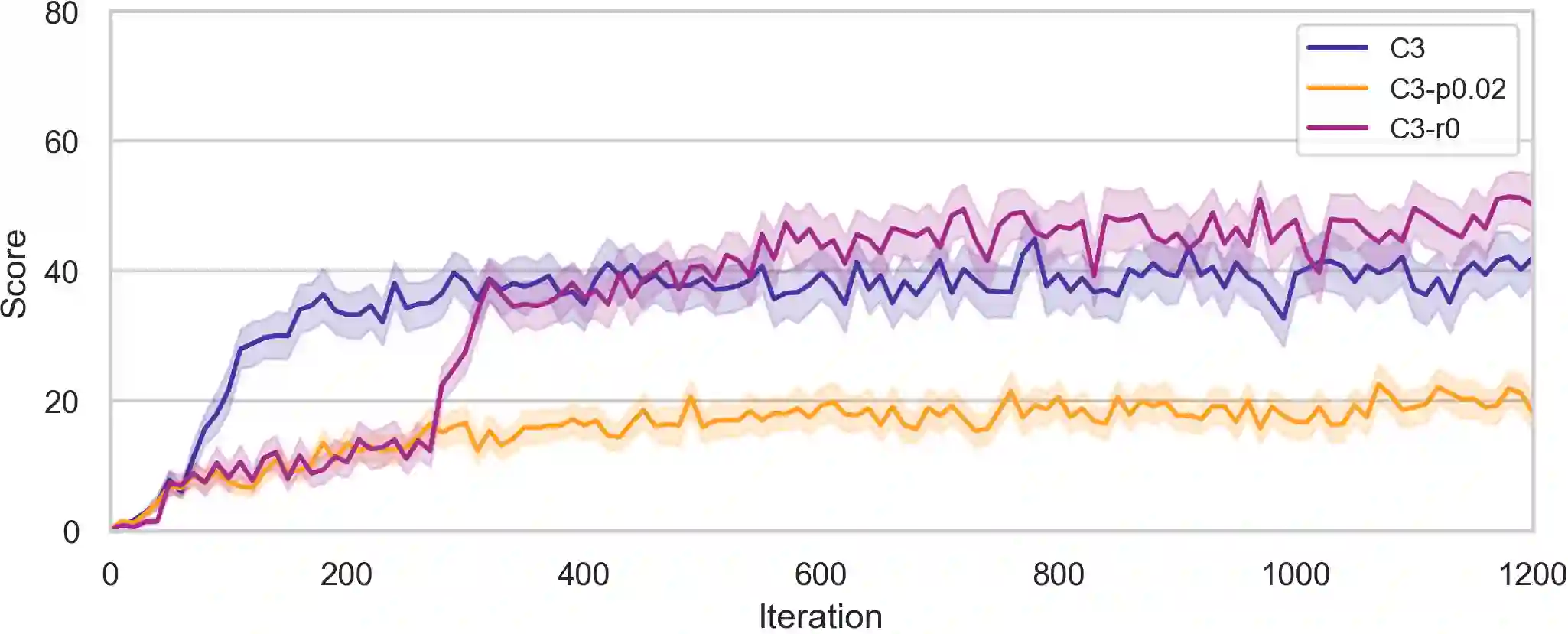

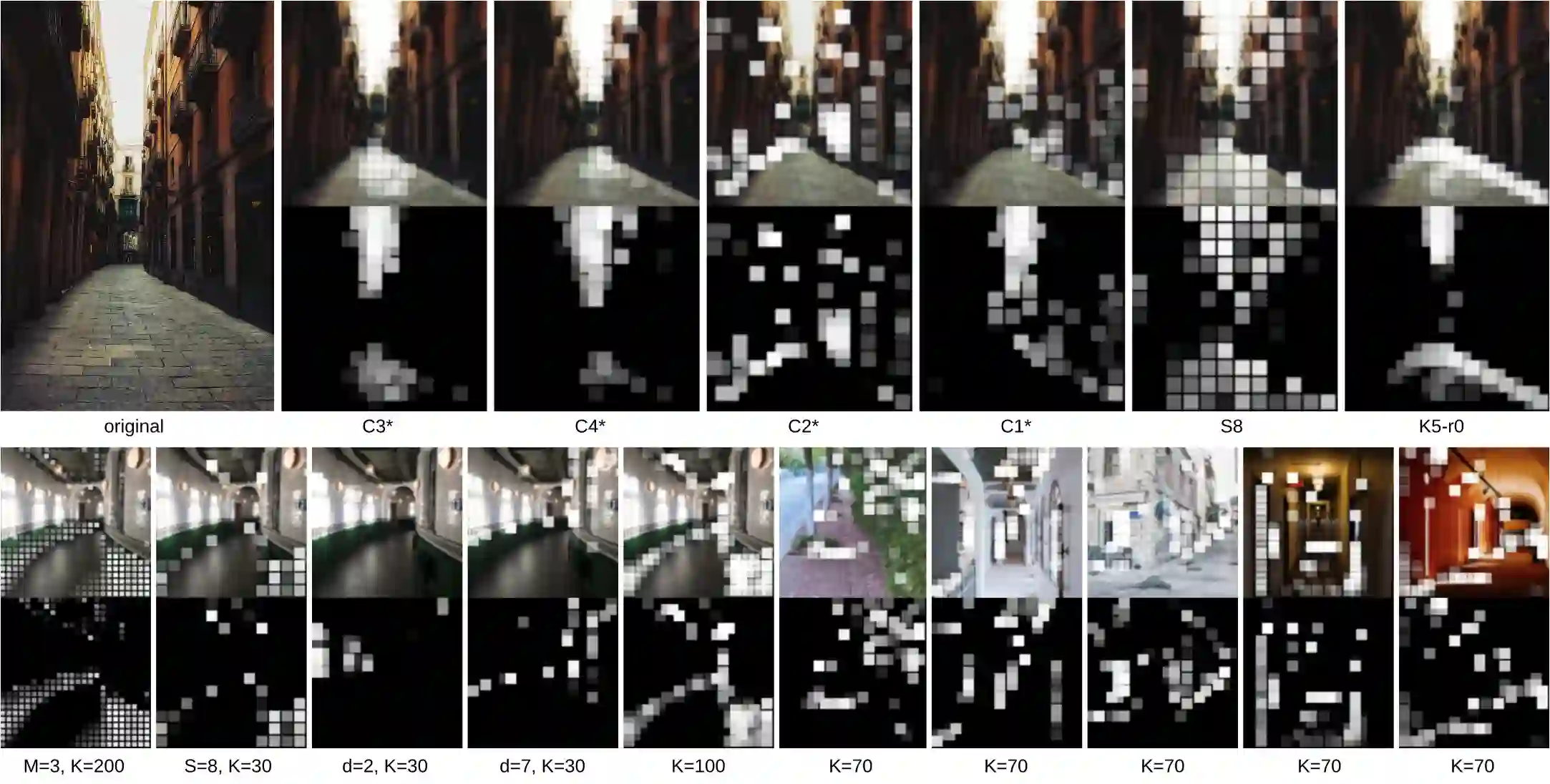

Vision guided navigation requires processing complex visual information to inform task-orientated decisions. Applications include autonomous robots, self-driving cars, and assistive vision for humans. A key element is the extraction and selection of relevant features in pixel space upon which to base action choices, for which Machine Learning techniques are well suited. However, Deep Reinforcement Learning agents trained in simulation often exhibit unsatisfactory results when deployed in the real-world due to perceptual differences known as the $\textit{reality gap}$. An approach that is yet to be explored to bridge this gap is self-attention. In this paper we (1) perform a systematic exploration of the hyperparameter space for self-attention based navigation of 3D environments and qualitatively appraise behaviour observed from different hyperparameter sets, including their ability to generalise; (2) present strategies to improve the agents' generalisation abilities and navigation behaviour; and (3) show how models trained in simulation are capable of processing real world images meaningfully in real time. To our knowledge, this is the first demonstration of a self-attention based agent successfully trained in navigating a 3D action space, using less than 4000 parameters.

翻译:引导视野的导航需要处理复杂的视觉信息,以通报面向任务的决定。应用包括自主机器人、自驾驶汽车和人类辅助视觉。一个关键要素是提取和选择作为行动选择基础的像素空间的相关特征,机学技术非常适合这些特征。然而,在模拟中培训的深强化学习代理人员在部署于现实世界时,由于认知差异被称为$\textit{Reality差距,结果往往不尽如人意。为弥合这一差距而有待探索的方法是自省。在本文中,我们(1) 系统地探索三维环境自留空间的自留空间和从不同超参数组中观察到的定性评估行为,包括它们的一般能力;(2) 提出改进代理人的普及能力和导航行为的战略;(3) 展示模拟培训模型如何能够在实时中有意义地处理真实世界图像。据我们所知,这是在3D行动空间上成功培训的自留空间首次演示,使用不到4000参数。