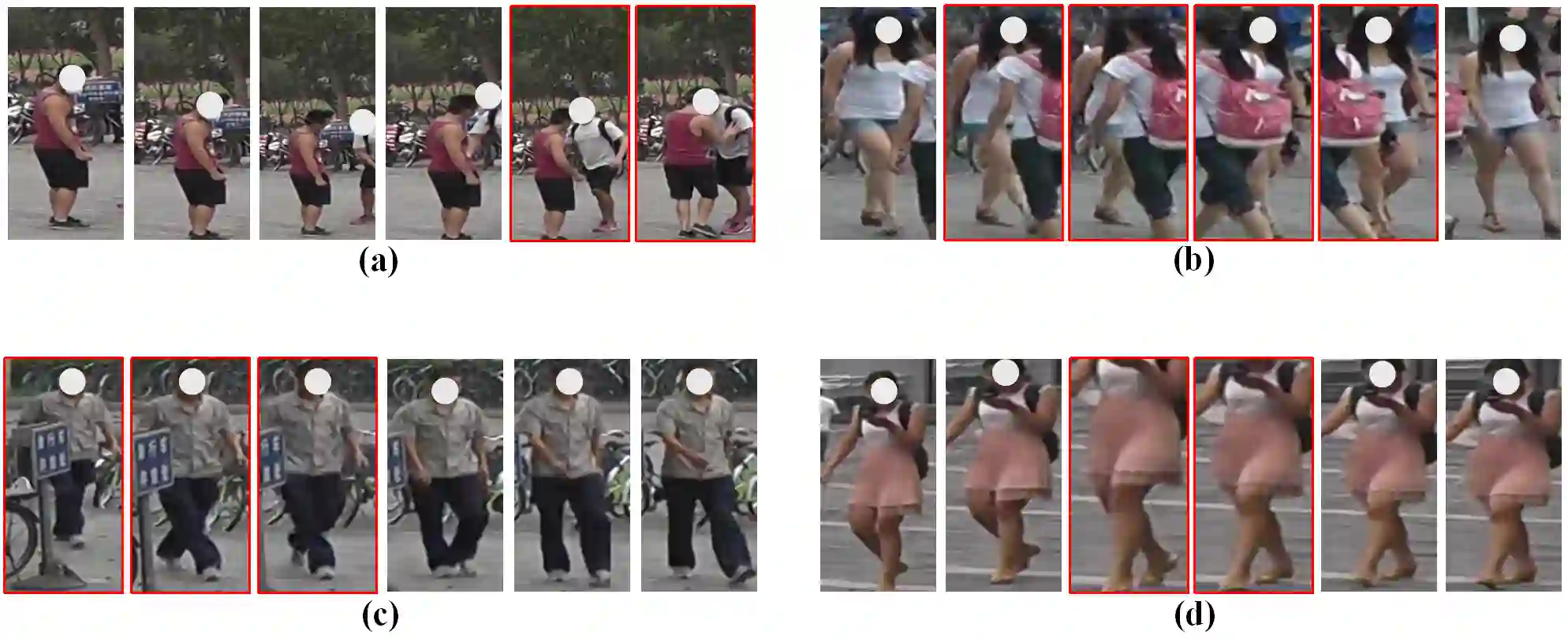

Video-based person re-identification (ReID) is challenging due to the presence of various interferences in video frames. Recent approaches handle this problem using temporal aggregation strategies. In this work, we propose a novel Context Sensing Attention Network (CSA-Net), which improves both the frame feature extraction and temporal aggregation steps. First, we introduce the Context Sensing Channel Attention (CSCA) module, which emphasizes responses from informative channels for each frame. These informative channels are identified with reference not only to each individual frame, but also to the content of the entire sequence. Therefore, CSCA explores both the individuality of each frame and the global context of the sequence. Second, we propose the Contrastive Feature Aggregation (CFA) module, which predicts frame weights for temporal aggregation. Here, the weight for each frame is determined in a contrastive manner: i.e., not only by the quality of each individual frame, but also by the average quality of the other frames in a sequence. Therefore, it effectively promotes the contribution of relatively good frames. Extensive experimental results on four datasets show that CSA-Net consistently achieves state-of-the-art performance.

翻译:由于视频框中存在各种干扰,基于视频的人的重新识别(ReID)具有挑战性,因为视频框中存在各种干扰。最近采用的方法使用时间汇总战略处理这一问题。在这项工作中,我们提议建立一个新的“环境遥感注意网络”,改进框架特征提取和时间汇总步骤。首先,我们引入了“环境遥感频道注意”模块,该模块强调每个框架的信息渠道的反应。这些信息渠道的识别不仅参考了每个框架,而且还参考了整个序列的内容。因此,CSCA探索了每个框架的个性和该序列的全球背景。第二,我们提出了“环境遥感注意网络”模块,该模块预测了时间汇总的框架权重。在这里,每个框架的权重以对比的方式确定:即不仅根据每个框架的质量,而且根据其他框架的顺序的平均质量。因此,它有效地促进了相对良好的框架的贡献。关于四个数据集的广泛实验结果显示,CSA网络持续实现状态的性能。