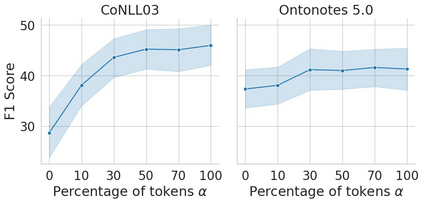

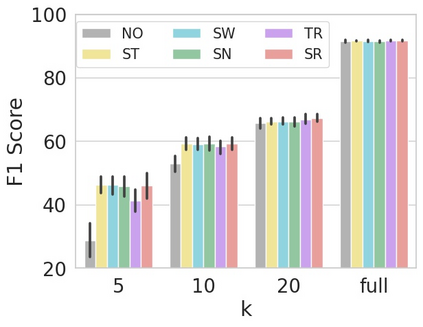

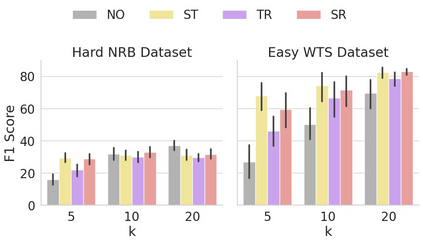

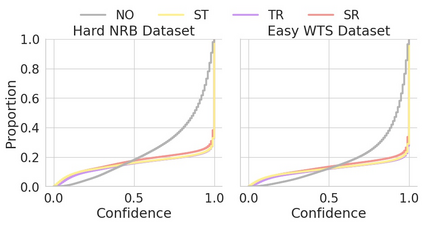

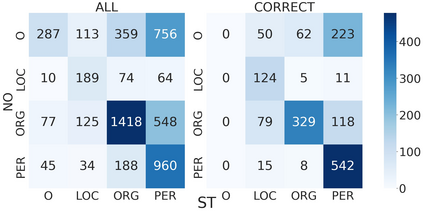

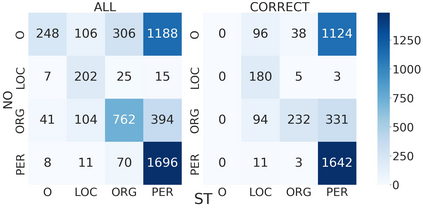

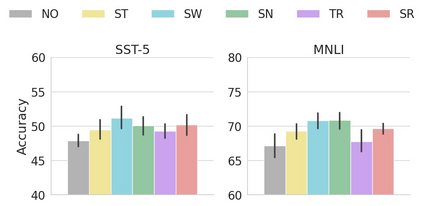

Demonstration-based learning has shown great potential in stimulating pretrained language models' ability under limited data scenario. Simply augmenting the input with some demonstrations can significantly improve performance on few-shot NER. However, why such demonstrations are beneficial for the learning process remains unclear since there is no explicit alignment between the demonstrations and the predictions. In this paper, we design pathological demonstrations by gradually removing intuitively useful information from the standard ones to take a deep dive of the robustness of demonstration-based sequence labeling and show that (1) demonstrations composed of random tokens still make the model a better few-shot learner; (2) the length of random demonstrations and the relevance of random tokens are the main factors affecting the performance; (3) demonstrations increase the confidence of model predictions on captured superficial patterns. We have publicly released our code at https://github.com/SALT-NLP/RobustDemo.

翻译:在有限的数据假设下,示范性学习在刺激预先培训的语言模型能力方面显示出巨大的潜力。只要以一些演示来补充投入,就可以大大改善少数NER的性能。然而,为什么这种演示对学习过程有益,因为示威与预测之间没有明确的一致,所以仍然不清楚。在本文中,我们设计病理学示范,方法是逐步从标准标准中清除直觉有用的信息,以深入地淡化示范性序列标签的稳健性,并表明:(1)由随机标牌组成的演示仍然使模型更好地成为少见的学习者;(2)随机演示的长度和随机标牌的相关性是影响业绩的主要因素;(3)演示提高了对所捕取表面模式模型预测的信心。我们在https://github.com/SALT-NLP/RobustDemo上公开发布了我们的代码。