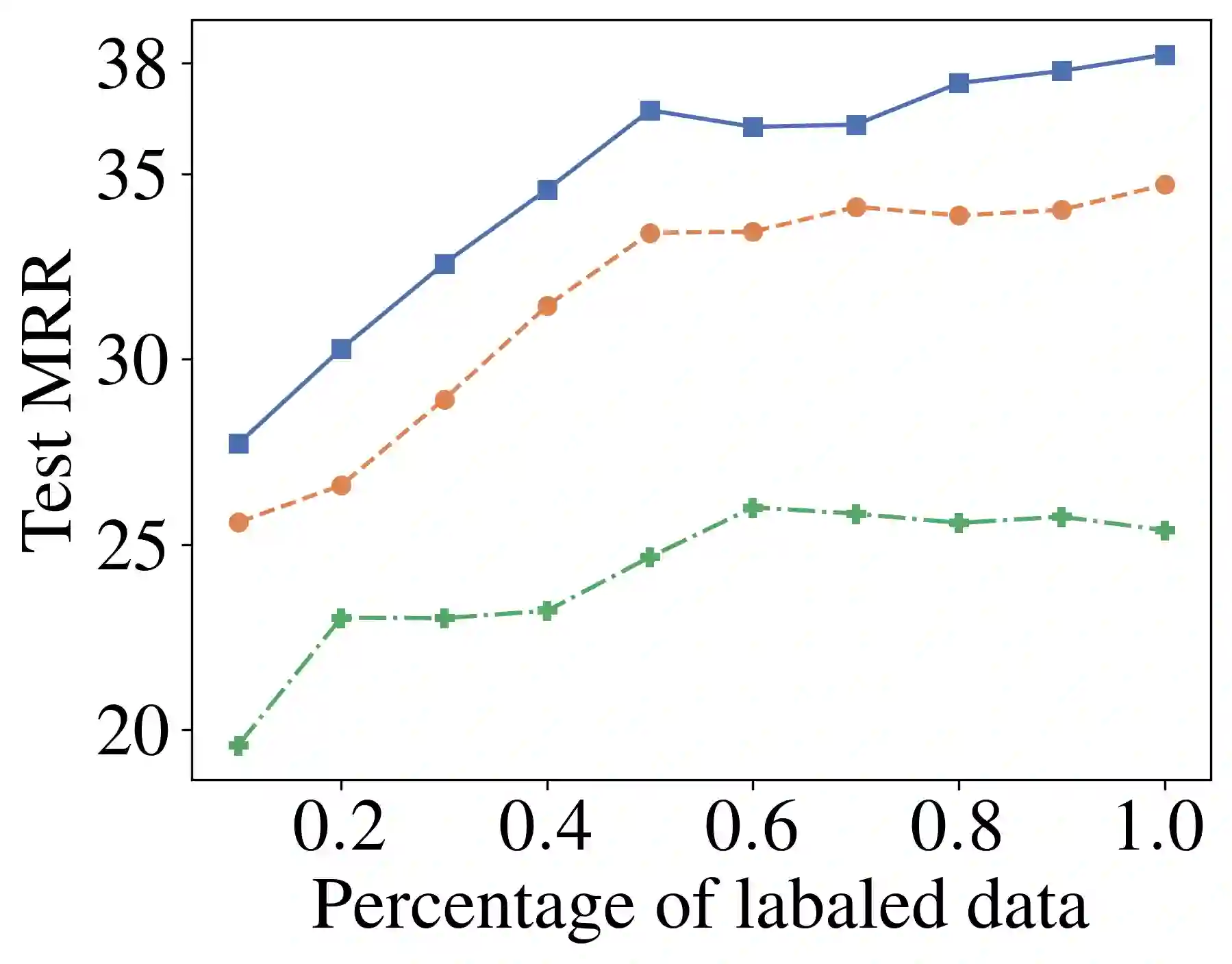

Graph neural network (GNN) pre-training methods have been proposed to enhance the power of GNNs. Specifically, a GNN is first pre-trained on a large-scale unlabeled graph and then fine-tuned on a separate small labeled graph for downstream applications, such as node classification. One popular pre-training method is to mask out a proportion of the edges, and a GNN is trained to recover them. However, such a generative method suffers from graph mismatch. That is, the masked graph inputted to the GNN deviates from the original graph. To alleviate this issue, we propose DiP-GNN (Discriminative Pre-training of Graph Neural Networks). Specifically, we train a generator to recover identities of the masked edges, and simultaneously, we train a discriminator to distinguish the generated edges from the original graph's edges. In our framework, the graph seen by the discriminator better matches the original graph because the generator can recover a proportion of the masked edges. Extensive experiments on large-scale homogeneous and heterogeneous graphs demonstrate the effectiveness of the proposed framework.

翻译:已经提出了提高GNNs能力的预培训方法。 具体地说, GNN首先在大型无标签图形上进行预培训,然后对下游应用( 如节点分类) 的单独小标签图形进行微调。 一个流行的预培训方法是遮盖部分边缘, 并训练GNN来回收这些边缘。 然而, 这种基因化方法存在图形不匹配的问题。 也就是说, 输入GNNs的遮盖图偏离了原始图表。 为了缓解这一问题, 我们建议 DP- GNN( 图形神经网络的区别性前培训) 。 具体地说, 我们训练一个生成器来恢复遮盖边缘的身份, 同时, 我们训练一个导师来区分生成的边缘与原始图形边缘的区别。 在我们的框架中, 由导师所看到的图表比原始图表更符合原始图表, 因为发电机可以恢复蒙面边缘的一部分。 在大型的同质和多质图上进行的广泛实验, 显示了拟议框架的有效性 。