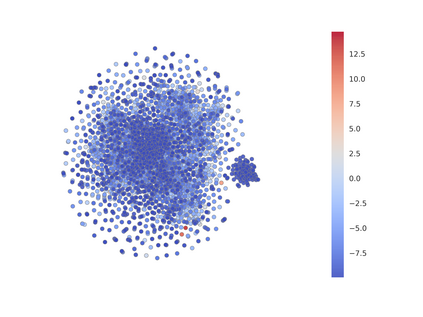

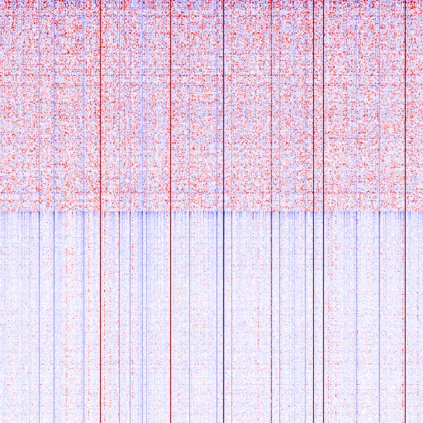

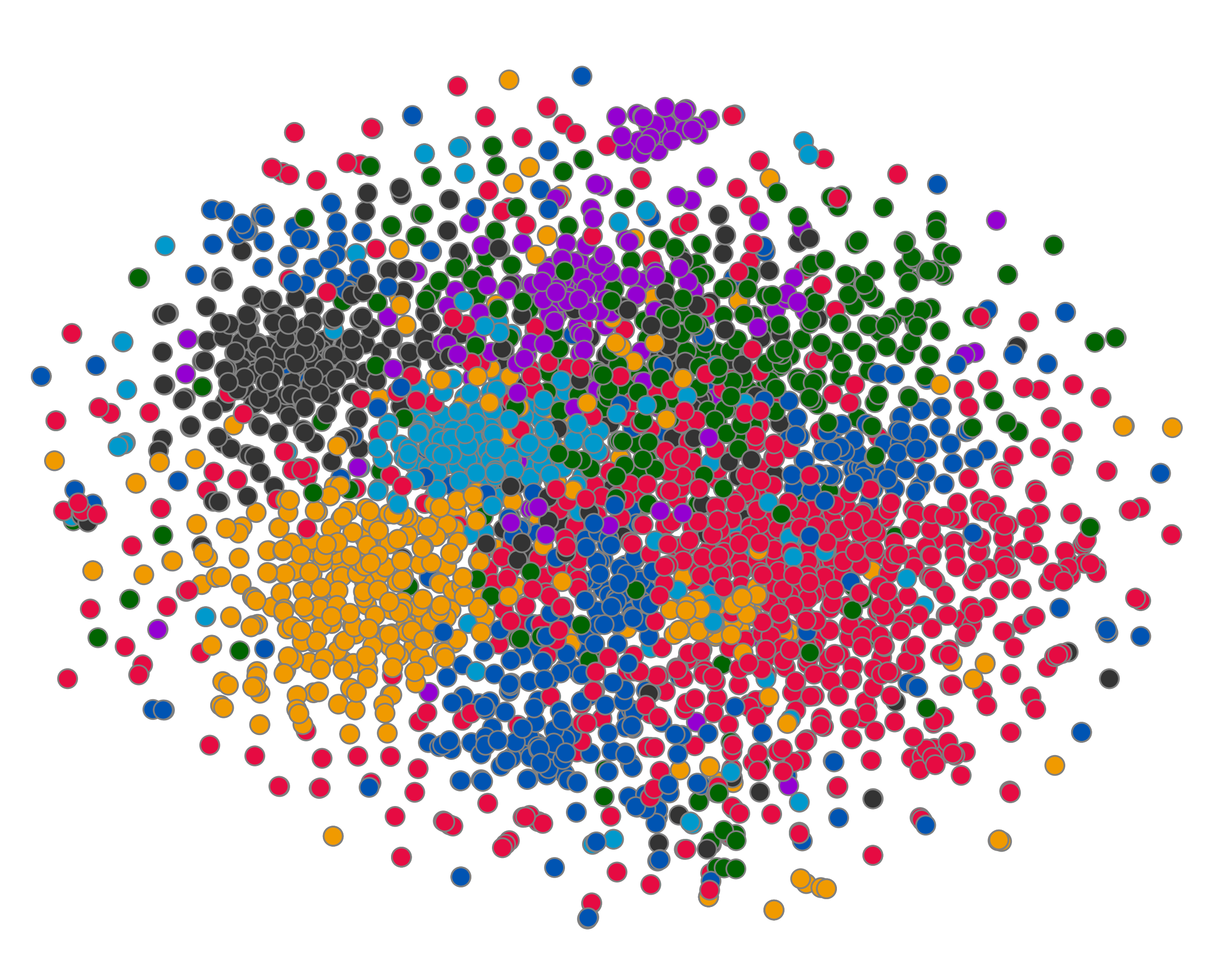

We present Deep Graph Infomax (DGI), a general approach for learning node representations within graph-structured data in an unsupervised manner. DGI relies on maximizing mutual information between patch representations and corresponding high-level summaries of graphs---both derived using established graph convolutional network architectures. The learnt patch representations summarize subgraphs centered around nodes of interest, and can thus be reused for downstream node-wise learning tasks. In contrast to most prior approaches to unsupervised learning with GCNs, DGI does not rely on random walk objectives, and is readily applicable to both transductive and inductive learning setups. We demonstrate competitive performance on a variety of node classification benchmarks, which at times even exceeds the performance of supervised learning.

翻译:我们展示了深图信息max(DGI),这是以不受监督的方式在图形结构数据中学习节点表示的一种通用方法。 DGI依靠的是利用固定的图形革命网络结构,在补丁表示和相应的图表高级摘要之间最大限度地相互提供信息。 学习的补丁表示概括了以相关节点为核心的子集,因此可用于下游节点学习任务。 与大多数以前采用未受监督的GCN学习方法相比,DGI并不依赖随机步行目标,而且很容易适用于感化和感化学习组合。 我们展示了各种节点分类基准的竞争性表现,这些基准有时甚至超过监督学习的绩效。