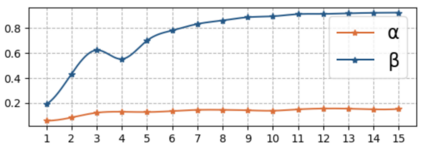

The goal of visual answering localization (VAL) in the video is to obtain a relevant and concise time clip from a video as the answer to the given natural language question. Early methods are based on the interaction modelling between video and text to predict the visual answer by the visual predictor. Later, using the textual predictor with subtitles for the VAL proves to be more precise. However, these existing methods still have cross-modal knowledge deviations from visual frames or textual subtitles. In this paper, we propose a cross-modal mutual knowledge transfer span localization (MutualSL) method to reduce the knowledge deviation. MutualSL has both visual predictor and textual predictor, where we expect the prediction results of these both to be consistent, so as to promote semantic knowledge understanding between cross-modalities. On this basis, we design a one-way dynamic loss function to dynamically adjust the proportion of knowledge transfer. We have conducted extensive experiments on three public datasets for evaluation. The experimental results show that our method outperforms other competitive state-of-the-art (SOTA) methods, demonstrating its effectiveness.

翻译:视频中视觉解答本地化( VAL) 的目标是从视频中获取一个相关且简明的时间剪辑,作为给定自然语言问题的答案。 早期方法基于视频和文本之间的互动建模, 以预测视觉预测器的视觉解答。 稍后, 使用文本预测器为 VAL 提供字幕证明更为精确。 但是, 这些现有方法仍然具有与视觉框架或文字字幕的交互模式知识偏差。 在本文中, 我们提出一种跨模式的相互知识传输方法, 以降低知识偏差。 共同 SL拥有视觉预测器和文本预测器, 我们期望两者的预测结果一致, 从而推动跨模式之间的语义知识理解。 在此基础上, 我们设计了单向的动态损失功能, 以动态地调整知识传输的比例。 我们在三个用于评估的公共数据集上进行了广泛的实验。 实验结果表明, 我们的方法比其他竞争性的艺术( SOTA) 方法要好, 展示其有效性 。