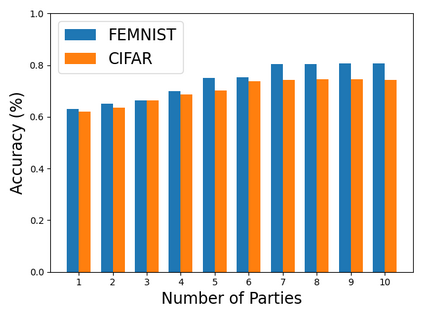

Conventional federated learning directly averaging model weights is only possible if all local models have the same model structure. Naturally, it poses a restrictive constraint for collaboration between models with heterogeneous architectures. Sharing prediction instead of weight removes this obstacle and eliminates the risk of white-box inference attacks in conventional federated learning. However, the predictions from local models are sensitive and would leak private information to the public. Currently, there is no theoretic guarantee that sharing prediction is private and secure. To address this issue, one naive approach is adding the differentially private random noise to the predictions like previous privacy works related to federated learning. Although the privacy concern is mitigated with random noise perturbation, it brings a new problem with a substantial trade-off between privacy budget and model performance. In this paper, we fill in this gap by proposing a novel framework called FedMD-NFDP, which applies the new proposed Noise-Free Differential Privacy (NFDP) mechanism into a federated model distillation framework. NFDP can effectively protect the privacy of local data with the least sacrifice of the model utility. Our extensive experimental results on various datasets validate that FedMD-NFDP can deliver not only comparable utility, communication efficiency but also provide a noise-free differential privacy guarantee. We also demonstrate the feasibility of our FedMD-NFDP by considering both IID and non-IID setting, heterogeneous model architectures, and unlabelled public datasets from a different distribution.

翻译:只有当所有本地模型都具有相同的模型结构时,才有可能直接平均模型权重。自然,它会限制不同结构模型之间合作的限制性限制。分享预测而不是权重可以消除这一障碍,并消除传统联合会式学习中白箱推断攻击的风险。然而,地方模型的预测是敏感的,会向公众泄露私人信息。目前,没有理论保证共享预测是私人和安全的。为解决这一问题,一种天真的方法正在将不同私人随机噪音添加到预测中,如以前与联合学习有关的隐私工程等预测中。尽管隐私关切通过随机噪音渗透来缓解,但它带来了一个新问题,在隐私预算与模型性业绩之间产生了巨大的交易。在本文中,我们填补了这一空白,提出了一个名为FDMD-NFDP的新框架,将新的拟议的无节能差异隐私机制应用到一个联合化的模式蒸馏中。 NFDDP能够有效地保护本地数据的隐私,而模型的效用则最小的牺牲。我们有关隐私关切的隐私问题,我们广泛的实验结果是隐私预算预算与模式之间的重大交换。