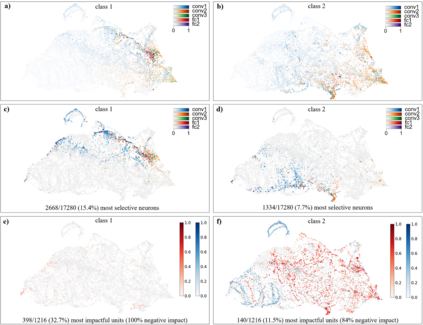

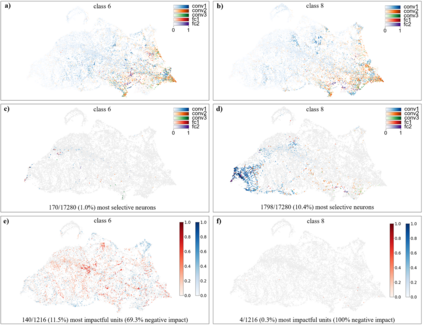

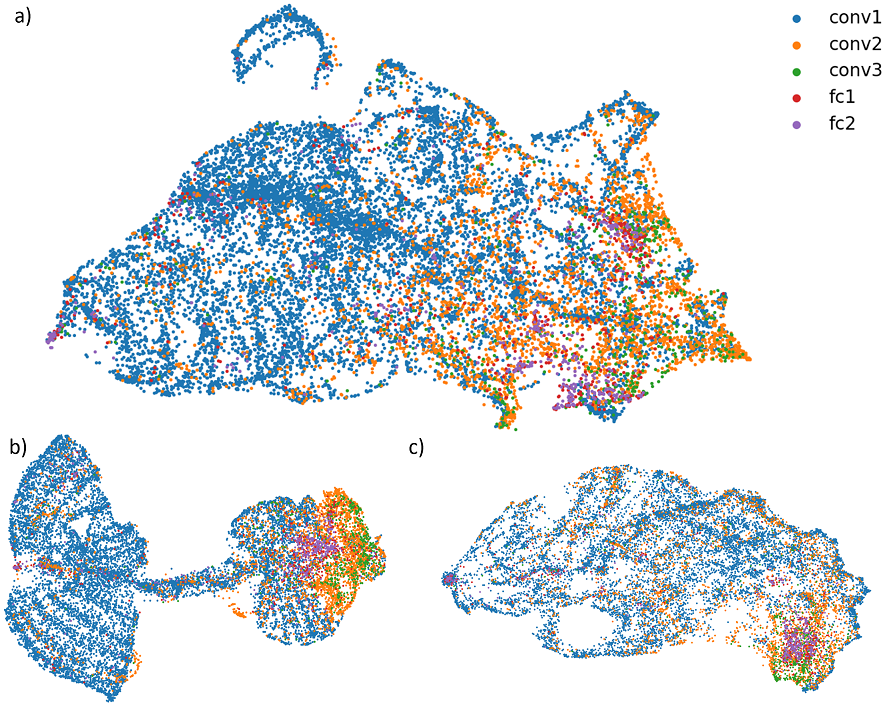

The need for more transparency of the decision-making processes in artificial neural networks steadily increases driven by their applications in safety critical and ethically challenging domains such as autonomous driving or medical diagnostics. We address today's lack of transparency of neural networks and shed light on the roles of single neurons and groups of neurons within the network fulfilling a learned task. Inspired by research in the field of neuroscience, we characterize the learned representations by activation patterns and network ablations, revealing functional neuron populations that a) act jointly in response to specific stimuli or b) have similar impact on the network's performance after being ablated. We find that neither a neuron's magnitude or selectivity of activation, nor its impact on network performance are sufficient stand-alone indicators for its importance for the overall task. We argue that such indicators are essential for future advances in transfer learning and modern neuroscience.

翻译:人造神经网络的决策过程需要更加透明,这由这些网络在安全、关键和道德挑战领域,如自主驱动或医学诊断的应用所驱动。我们解决了今天神经网络缺乏透明度的问题,并阐明了网络中单一神经元和神经组在完成一项学习任务中的作用。在神经科学领域的研究的启发下,我们通过激活模式和网络渗透来描述所学表现,揭示了功能性神经组群:(a) 应对特定刺激或(b) 在被削弱后对网络的性能产生类似影响。我们发现,神经元的激活规模或选择性,以及其对网络性能的影响,都不足以成为其对整个任务重要性的独立指标。我们主张,这些指标对于未来转移学习和现代神经科学的进展至关重要。