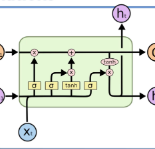

Sequence labeling tasks require the computation of sentence representations for each word within a given sentence. With the rise of advanced pretrained language models; one common approach involves incorporating a BiLSTM layer to enhance the sequence structure information at the output level. Nevertheless, it has been empirically demonstrated (P.-H. Li, 2020) that BiLSTM's potential for generating sentence representations for sequence labeling tasks is constrained, primarily due to the integration of fragments from past and future sentence representations to form a complete sentence representation. In this study, we observed that the entire sentence representation, found in both the first and last cells of BiLSTM, can supplement each cell's sentence representation. Accordingly, we devised a global context mechanism to integrate entire future and past sentence representations into each cell's sentence representation within BiLSTM, leading to a significant improvement in both F1 score and accuracy. By embedding the BERT model within BiLSTM as a demonstration, and conducting exhaustive experiments on nine datasets for sequence labeling tasks, including named entity recognition (NER), part of speech (POS) tagging and End-to-End Aspect-Based sentiment analysis (E2E-ABSA). We noted significant improvements in F1 scores and accuracy across all examined datasets.

翻译:暂无翻译