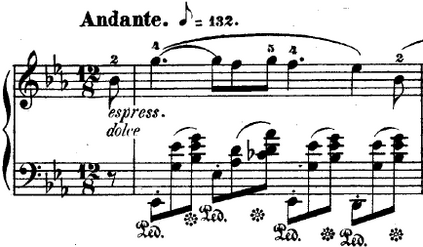

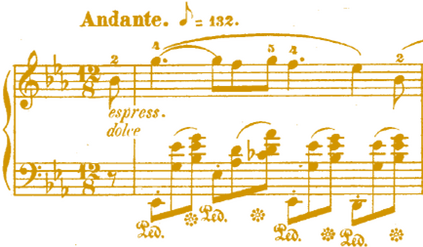

We introduce CLaMP: Contrastive Language-Music Pre-training, which learns cross-modal representations between natural language and symbolic music using a music encoder and a text encoder trained jointly with a contrastive loss. To pre-train CLaMP, we collected a large dataset of 1.4 million music-text pairs. It employed text dropout as a data augmentation technique and bar patching to efficiently represent music data which reduces sequence length to less than 10%. In addition, we developed a masked music model pre-training objective to enhance the music encoder's comprehension of musical context and structure. CLaMP integrates textual information to enable semantic search and zero-shot classification for symbolic music, surpassing the capabilities of previous models. To support the evaluation of semantic search and music classification, we publicly release WikiMusicText (WikiMT), a dataset of 1010 lead sheets in ABC notation, each accompanied by a title, artist, genre, and description. In comparison to state-of-the-art models that require fine-tuning, zero-shot CLaMP demonstrated comparable or superior performance on score-oriented datasets.

翻译:我们引入了CLaMP:对比语言-音乐预训练,它通过使用音乐编码器和文本编码器,以对比损失联合训练来学习自然语言和符号音乐之间的跨模态表示。为了预训练CLaMP,我们收集了140万个音乐-文本对的大型数据集。它采用文本丢失作为数据增强技术,以及条形补丁技术来有效表示音乐数据,将序列长度缩短到小于10%。此外,我们开发了一个遮盖音乐模型预训练目标,以增强音乐编码器对音乐上下文和结构的理解。CLaMP集成了文本信息,以使得符号音乐具有语义搜索和零-shot分类的能力,超越了先前模型的能力。为了支持语义搜索和音乐分类的评估,我们公开发布WikiMusicText(WikiMT),这是一个由1010个以ABC记谱法表示的主旋律歌谱组成的数据集,每个歌谱都附有标题,艺术家,流派和描述。与需要微调的最新模型相比,零-shot CLaMP在面向音乐评分的数据集上展示出可比或更优异的表现。