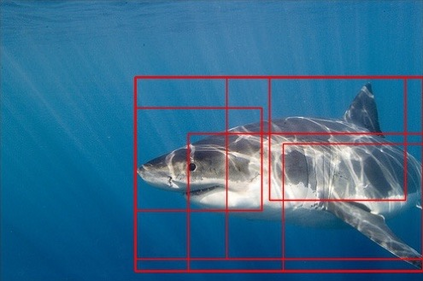

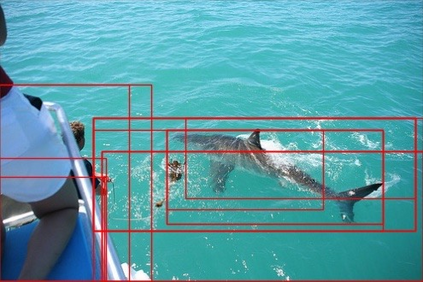

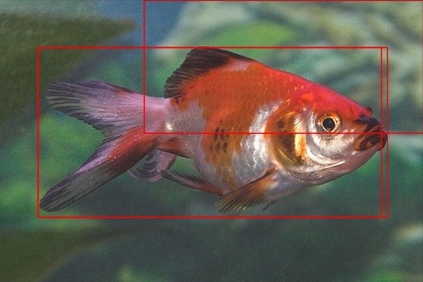

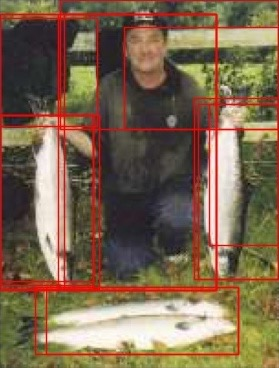

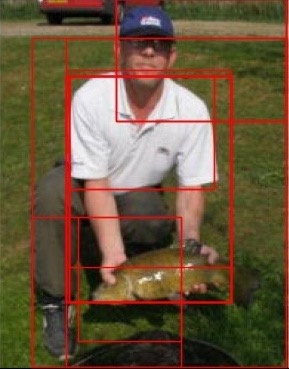

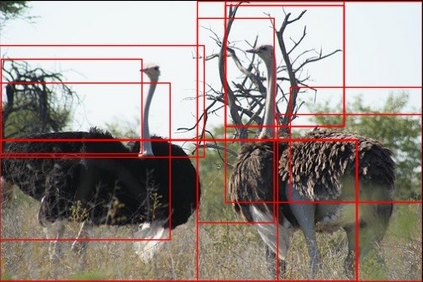

Image-level contrastive representation learning has proven to be highly effective as a generic model for transfer learning. Such generality for transfer learning, however, sacrifices specificity if we are interested in a certain downstream task. We argue that this could be sub-optimal and thus advocate a design principle which encourages alignment between the self-supervised pretext task and the downstream task. In this paper, we follow this principle with a pretraining method specifically designed for the task of object detection. We attain alignment in the following three aspects: 1) object-level representations are introduced via selective search bounding boxes as object proposals; 2) the pretraining network architecture incorporates the same dedicated modules used in the detection pipeline (e.g. FPN); 3) the pretraining is equipped with object detection properties such as object-level translation invariance and scale invariance. Our method, called Selective Object COntrastive learning (SoCo), achieves state-of-the-art results for transfer performance on COCO detection using a Mask R-CNN framework. Code is available at https://github.com/hologerry/SoCo.

翻译:对比图像代表性学习已证明作为转让学习的通用模式非常有效。但是,这种转让学习的通用性,如果我们对某一下游任务感兴趣,则会牺牲特定性。我们争辩说,这可能是次优的,因而倡导了鼓励自我监督的托辞任务与下游任务之间保持一致的设计原则。在本文件中,我们采用专门为物体探测任务设计的培训前方法来遵循这一原则。我们在以下三个方面取得了一致:1)通过选择性搜索捆绑框作为目标提议,引入目标层面的表述;2)培训前网络结构包含探测管道中使用的相同专门模块(如FPN);3)预培训前配备了目标水平变异和变异规模等物体探测特性。我们的方法称为选择性物体凝固学习(SoCoo),在使用Mask R-CNN框架进行CO探测的转移性能上取得了最先进的结果。代码见https://github.com/hologerry/Soco。