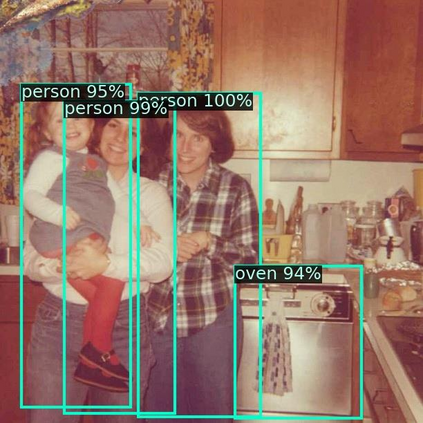

We introduce MixTraining, a new training paradigm for object detection that can improve the performance of existing detectors for free. MixTraining enhances data augmentation by utilizing augmentations of different strengths while excluding the strong augmentations of certain training samples that may be detrimental to training. In addition, it addresses localization noise and missing labels in human annotations by incorporating pseudo boxes that can compensate for these errors. Both of these MixTraining capabilities are made possible through bootstrapping on the detector, which can be used to predict the difficulty of training on a strong augmentation, as well as to generate reliable pseudo boxes thanks to the robustness of neural networks to labeling error. MixTraining is found to bring consistent improvements across various detectors on the COCO dataset. In particular, the performance of Faster R-CNN \cite{ren2015faster} with a ResNet-50 \cite{he2016deep} backbone is improved from 41.7 mAP to 44.0 mAP, and the accuracy of Cascade-RCNN \cite{cai2018cascade} with a Swin-Small \cite{liu2021swin} backbone is raised from 50.9 mAP to 52.8 mAP. The code and models will be made publicly available at \url{https://github.com/MendelXu/MixTraining}.

翻译:我们引入了Mix培训,这是物体探测的新培训范例,可以提高现有免费探测器的性能。Mix培训通过利用不同强项的增强来增强数据增强数据,同时排除可能不利于培训的某些培训样本的强大增强,此外,它通过纳入能弥补这些错误的假箱来解决本地化噪音和人体说明中缺失的标签。这两个Mix培训能力都是通过在探测器上安装靴子而得以实现的,可以用来预测在强力增强能力培训方面的困难,以及由于神经网络的坚固和标签错误而生成可靠的假箱。发现Mix培训可以使COCO数据集的各种探测器得到一致的改进。特别是,将R-CNN\cite{cite{cite{ren2015faster} 的性能与Res-50\cite{he2016deept} 骨干从41.7 mAP提高到44.0 mAP,并用Swin-Small/Mus20}Swin-Small\cite{CainAP@Mliu_20} 将Syal-2012/Mgru2021的代码提升为50/Mqual。