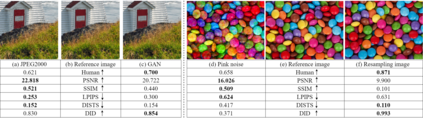

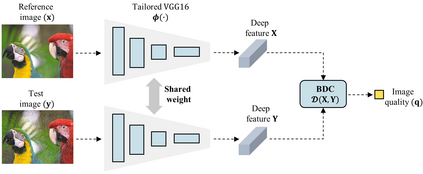

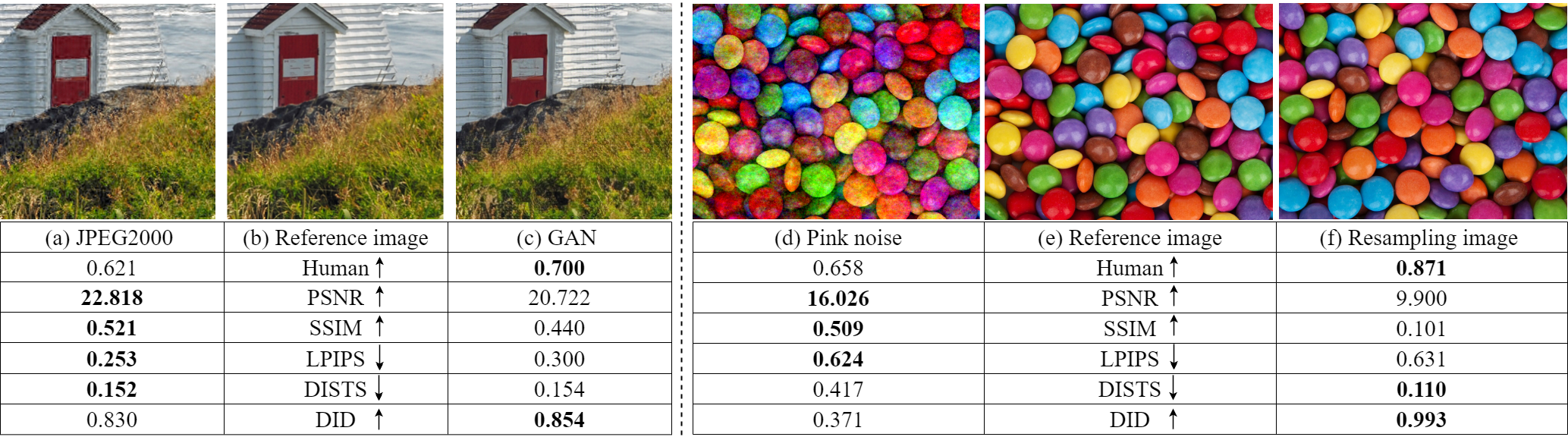

Deep learning-based full-reference image quality assessment (FR-IQA) models typically rely on the feature distance between the reference and distorted images. However, the underlying assumption of these models that the distance in the deep feature domain could quantify the quality degradation does not scientifically align with the invariant texture perception, especially when the images are generated artificially by neural networks. In this paper, we bring a radical shift in inferring the quality with learned features and propose the Deep Image Dependency (DID) based FR-IQA model. The feature dependency facilitates the comparisons of deep learning features in a high-order manner with Brownian distance covariance, which is characterized by the joint distribution of the features from reference and test images, as well as their marginal distributions. This enables the quantification of the feature dependency against nonlinear transformation, which is far beyond the computation of the numerical errors in the feature space. Experiments on image quality prediction, texture image similarity, and geometric invariance validate the superior performance of our proposed measure.

翻译:深入学习的完整参考图像质量评估模型通常依赖于参考和扭曲图像之间的特征距离,然而,这些模型的基本假设是,深特征域的距离可以量化质量降解,这在科学上与变化式纹理的认知不一致,特别是当图像是由神经网络人工生成时。在本文中,我们用学习的特征来推断质量,并提出了基于深图像依赖性(DID)的FR-IQA模型。特征依赖性有利于以高顺序方式将深度学习特征与布朗氏距离变量进行比较,后者的特点是参考和测试图像特征及其边际分布的共同分布。这使得特征依赖性与非线性转变的量化,远远超出了对地貌空间数字错误的计算。关于图像质量预测、文本图像相似性和几何偏差的实验证实了我们拟议措施的优异性。