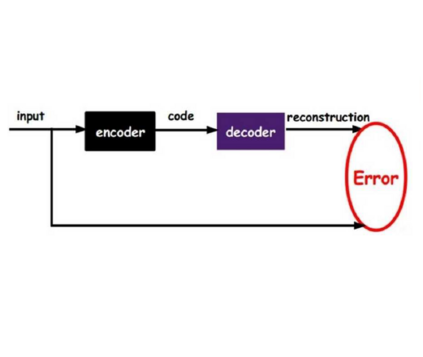

A network supporting deep unsupervised learning is presented. The network is an autoencoder with lateral shortcut connections from the encoder to decoder at each level of the hierarchy. The lateral shortcut connections allow the higher levels of the hierarchy to focus on abstract invariant features. While standard autoencoders are analogous to latent variable models with a single layer of stochastic variables, the proposed network is analogous to hierarchical latent variables models. Learning combines denoising autoencoder and denoising sources separation frameworks. Each layer of the network contributes to the cost function a term which measures the distance of the representations produced by the encoder and the decoder. Since training signals originate from all levels of the network, all layers can learn efficiently even in deep networks. The speedup offered by cost terms from higher levels of the hierarchy and the ability to learn invariant features are demonstrated in experiments.

翻译:展示了一个支持深层未经监督的学习的网络。 网络是一个自动编码器, 具有从编码器到分解器的横向捷径连接。 横向快捷键连接使高层能够关注抽象的变异特征。 虽然标准的自动编码器类似于具有单一层随机变量的潜在变量模型, 拟议的网络类似于等级潜伏变量模型。 学习结合了取消自动编码器和分解源分离框架。 网络的每个层都有助于成本函数, 用于测量编码器和分解器所生成的表达方式的距离。 由于培训信号来自网络的各级, 即使在深层网络中, 所有的层都能有效地学习。 实验中可以证明, 高层次的成本条件和学习变量的能力带来的加速。