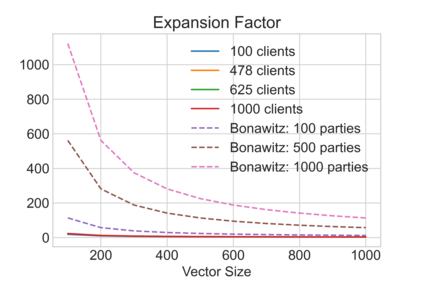

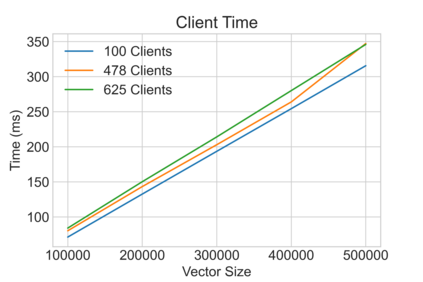

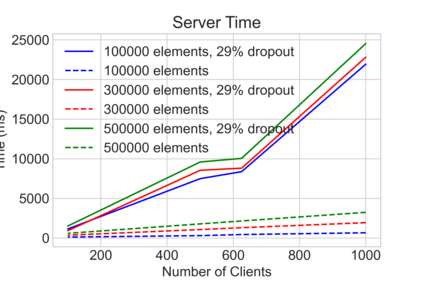

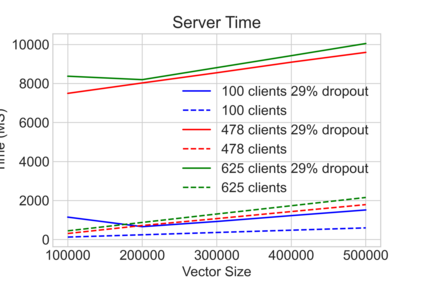

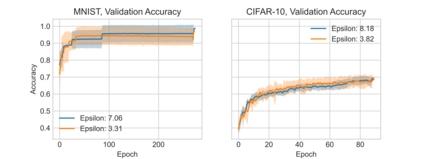

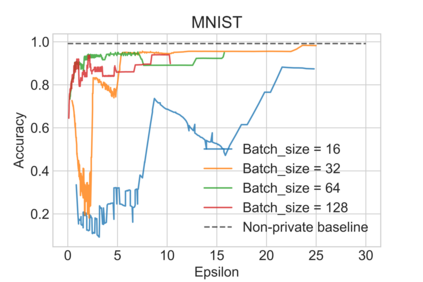

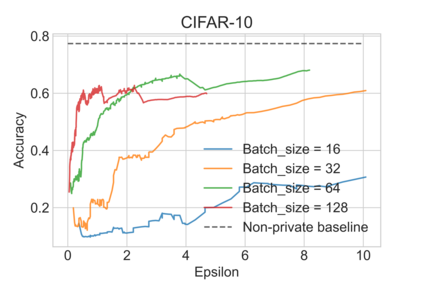

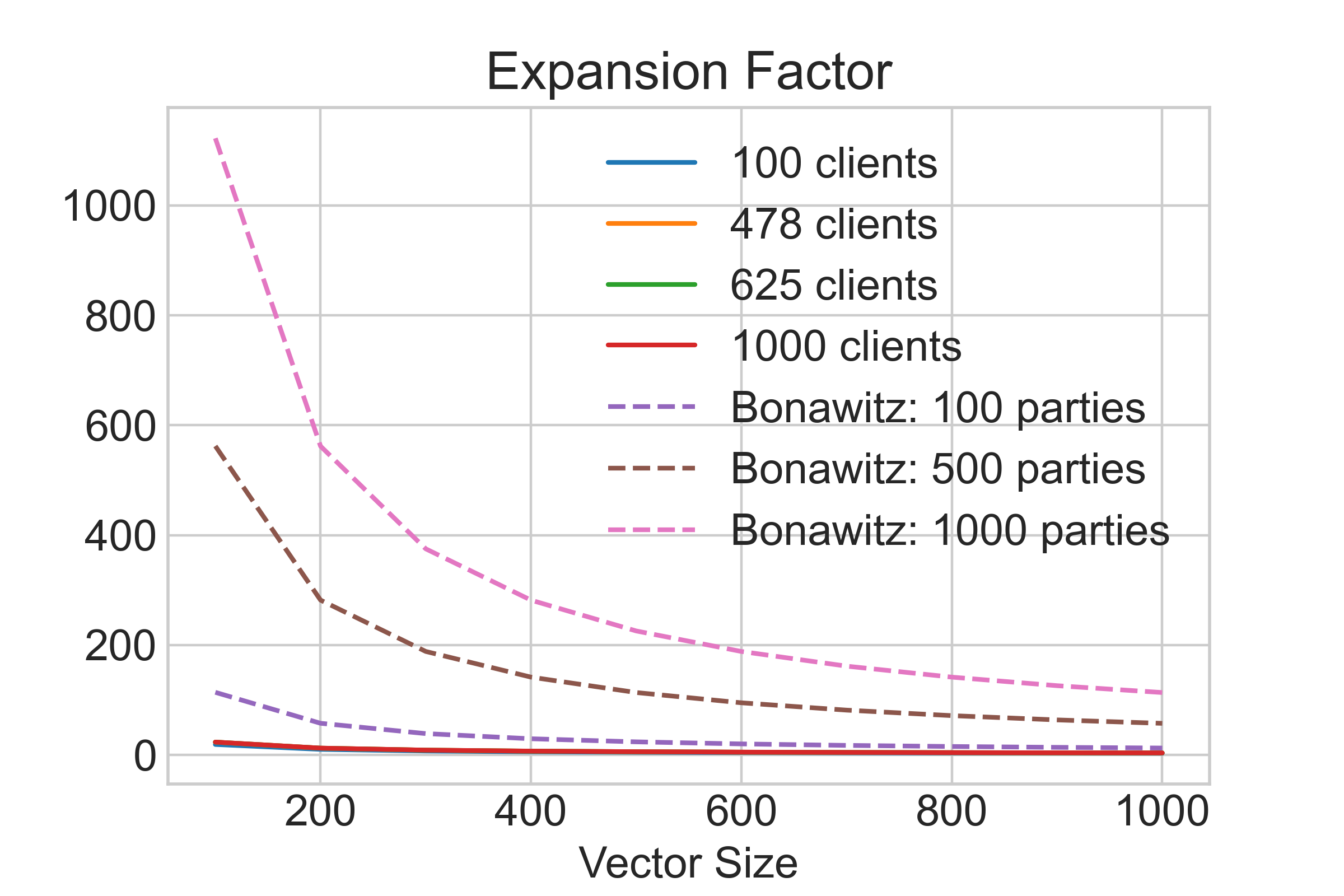

Federated machine learning leverages edge computing to develop models from network user data, but privacy in federated learning remains a major challenge. Techniques using differential privacy have been proposed to address this, but bring their own challenges -- many require a trusted third party or else add too much noise to produce useful models. Recent advances in \emph{secure aggregation} using multiparty computation eliminate the need for a third party, but are computationally expensive especially at scale. We present a new federated learning protocol that leverages a novel differentially private, malicious secure aggregation protocol based on techniques from Learning With Errors. Our protocol outperforms current state-of-the art techniques, and empirical results show that it scales to a large number of parties, with optimal accuracy for any differentially private federated learning scheme.

翻译:联邦机器学习联盟利用边际计算开发网络用户数据模型,但联邦学习中的隐私仍然是一个重大挑战。 已经提出了使用不同隐私的技术来解决这个问题,但是也带来了自己的挑战 — — 许多技术需要信任的第三方或者增加太多的噪音来生成有用的模型。 最近在使用多党计算法的eemph{security control}方面的进展消除了对第三方的需求,但在计算上特别昂贵。 我们提出了一个新的联邦学习协议,利用基于学习错误技术的新颖的、有区别的、恶意的、安全的私人聚合协议。 我们的协议比目前的最新艺术技术要强, 经验结果显示它比很多政党规模, 对任何差别的私人联邦学习计划来说都是最准确的。