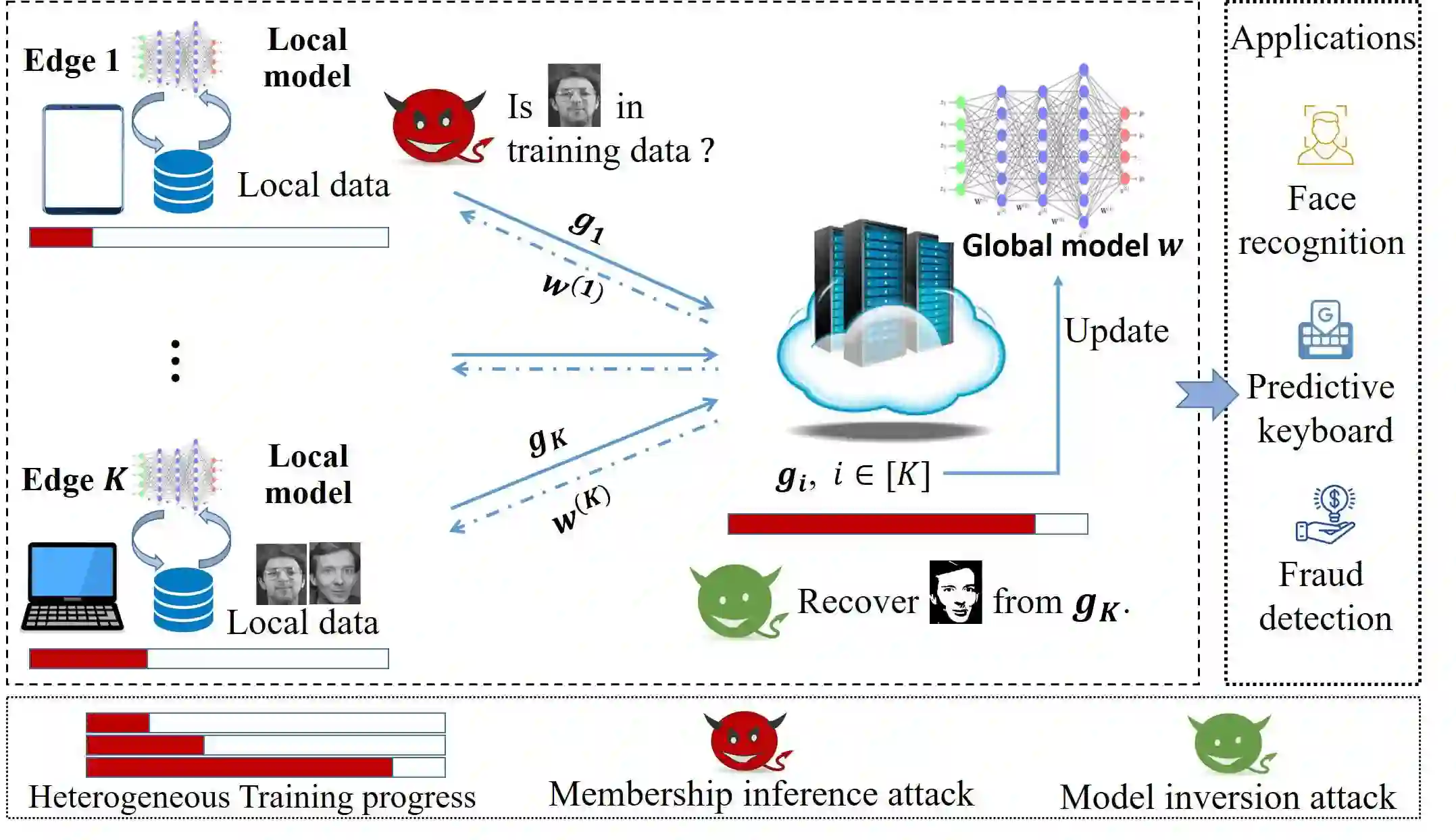

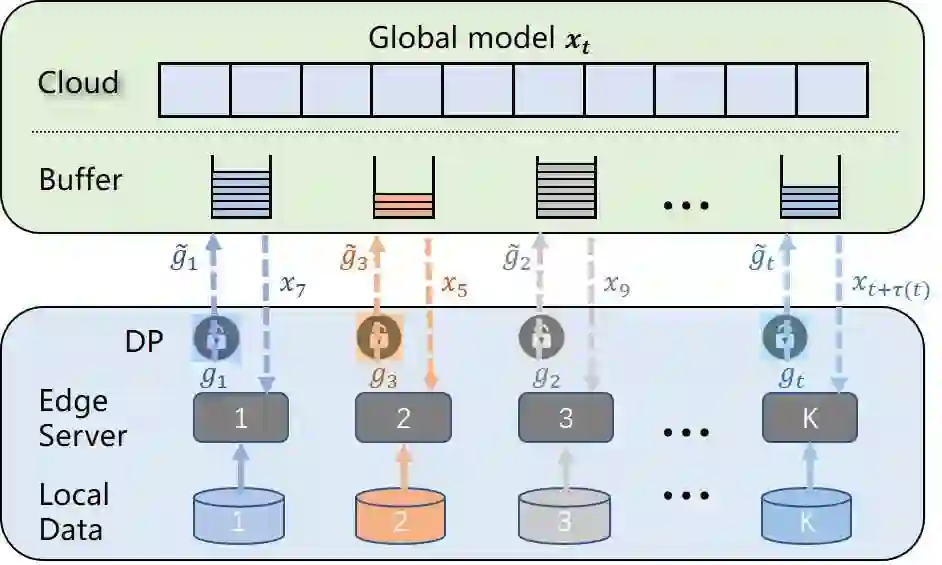

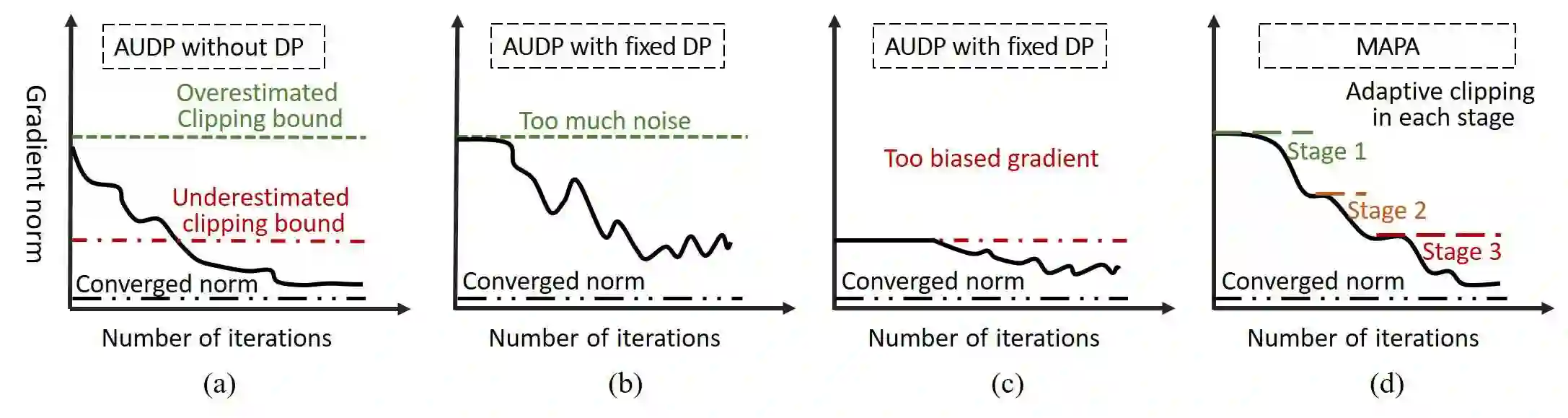

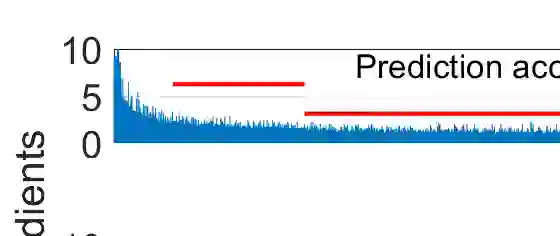

Federated learning has been showing as a promising approach in paving the last mile of artificial intelligence, due to its great potential of solving the data isolation problem in large scale machine learning. Particularly, with consideration of the heterogeneity in practical edge computing systems, asynchronous edge-cloud collaboration based federated learning can further improve the learning efficiency by significantly reducing the straggler effect. Despite no raw data sharing, the open architecture and extensive collaborations of asynchronous federated learning (AFL) still give some malicious participants great opportunities to infer other parties' training data, thus leading to serious concerns of privacy. To achieve a rigorous privacy guarantee with high utility, we investigate to secure asynchronous edge-cloud collaborative federated learning with differential privacy, focusing on the impacts of differential privacy on model convergence of AFL. Formally, we give the first analysis on the model convergence of AFL under DP and propose a multi-stage adjustable private algorithm (MAPA) to improve the trade-off between model utility and privacy by dynamically adjusting both the noise scale and the learning rate. Through extensive simulations and real-world experiments with an edge-could testbed, we demonstrate that MAPA significantly improves both the model accuracy and convergence speed with sufficient privacy guarantee.

翻译:联邦学习表明,在铺平最后一英里人工智能方面,联邦学习是一个很有希望的办法,因为它在大规模机器学习中具有解决数据孤立问题的巨大潜力。特别是,考虑到实用边缘计算机系统中的异质性,以非同步边球协作为基础的联合学习能够通过大幅降低分流效应,进一步提高学习效率。尽管没有原始数据共享,开放的架构和无同步联邦学习的广泛协作仍然给一些恶意参与者提供大量机会来推断其他缔约方的培训数据,从而导致对隐私的严重关切。为了实现严格的隐私保障,我们调查如何确保无同步边边缘协作联合学习,并采用不同的隐私,侧重于不同隐私对亚远离子体融合模式的影响。形式上,我们首先分析DP下亚远离子球模型的趋同,并提议一个多阶段可调整的私人算法(MAPA),以便通过动态地调整噪音尺度和学习率来改进模型的利弊端。我们通过广泛的模拟和真实的精确度实验,大大地改进了我们的精确度。