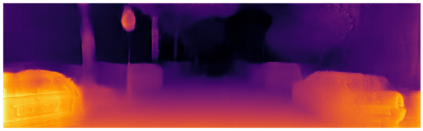

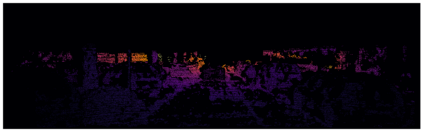

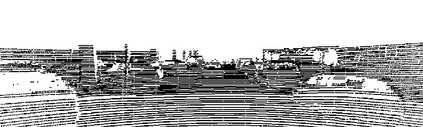

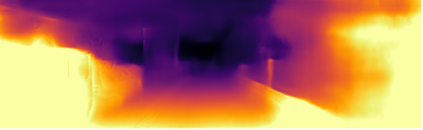

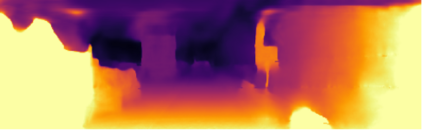

Vision-based depth estimation is a key feature in autonomous systems, which often relies on a single camera or several independent ones. In such a monocular setup, dense depth is obtained with either additional input from one or several expensive LiDARs, e.g., with 64 beams, or camera-only methods, which suffer from scale-ambiguity and infinite-depth problems. In this paper, we propose a new alternative of densely estimating metric depth by combining a monocular camera with a light-weight LiDAR, e.g., with 4 beams, typical of today's automotive-grade mass-produced laser scanners. Inspired by recent self-supervised methods, we introduce a novel framework, called LiDARTouch, to estimate dense depth maps from monocular images with the help of ``touches'' of LiDAR, i.e., without the need for dense ground-truth depth. In our setup, the minimal LiDAR input contributes on three different levels: as an additional model's input, in a self-supervised LiDAR reconstruction objective function, and to estimate changes of pose (a key component of self-supervised depth estimation architectures). Our LiDARTouch framework achieves new state of the art in self-supervised depth estimation on the KITTI dataset, thus supporting our choices of integrating the very sparse LiDAR signal with other visual features. Moreover, we show that the use of a few-beam LiDAR alleviates scale ambiguity and infinite-depth issues that camera-only methods suffer from. We also demonstrate that methods from the fully-supervised depth-completion literature can be adapted to a self-supervised regime with a minimal LiDAR signal.

翻译:以视觉为基础的深度估算是自主系统的一个关键特征,这种系统往往依赖于单一的相机或若干独立的相机。在这种单一的设置中,从一种或数种昂贵的激光雷达(例如64光束)或只摄像的方法中获得更多投入,例如64个光束,或只摄像的方法,这些方法都存在规模模糊性和无限深度问题。在本文中,我们提出一种新的选择,即通过将单镜相机与轻量级激光雷达(LiDAR)的透明度(例如,4个比目)相结合,进行密集的深度估算,这是当今汽车级大规模生产激光扫描的典型。根据最近自我监督的方法,我们引入了一个新的框架,称为激光雷达(LiDAR)的光束深度,用单镜图像来估计深度的深度图密度,而LiDAR的深度,不需要厚度深度。在我们的设置中,最小的激光雷达(LiDAR)的精确度输入有助于三个不同的层次:作为额外的模型输入,我们从自我监督的激光雷达(LiDAR)的高级信号(OIAR)的信号)的深度构建目标的深度测深处功能,从而显示我们自我的自我的深度的自我的深度的深度的深度的深度的深度的深度,从而显示我们的自我的自我的深度的深度的深度的深度的深度的深度,从而显示我们能够的自我的自我的深度的自我的自我的自我的自我的深度的深度的深度的深度的自我的深度的深度,从而显示的深度的深度的自我的自我的深度的深度的深度,从而显示我们的数据的深度的深度的自我的深度的自我的自我的自我的深度的深度,从而显示我们能够显示我们的数据结构结构的自我的自我的深度的自我的深度的深度的自我的深度的自我的自我的深度的深度的深度的自我的深度的自我的深度。