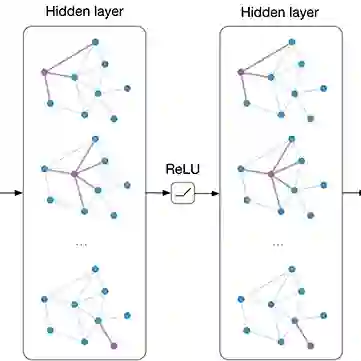

Graph convolutional network (GCN) has been successfully applied to many graph-based applications; however, training a large-scale GCN remains challenging. Current SGD-based algorithms suffer from either a high computational cost that exponentially grows with number of GCN layers, or a large space requirement for keeping the entire graph and the embedding of each node in memory. In this paper, we propose Cluster-GCN, a novel GCN algorithm that is suitable for SGD-based training by exploiting the graph clustering structure. Cluster-GCN works as the following: at each step, it samples a block of nodes that associate with a dense subgraph identified by a graph clustering algorithm, and restricts the neighborhood search within this subgraph. This simple but effective strategy leads to significantly improved memory and computational efficiency while being able to achieve comparable test accuracy with previous algorithms. To test the scalability of our algorithm, we create a new Amazon2M data with 2 million nodes and 61 million edges which is more than 5 times larger than the previous largest publicly available dataset (Reddit). For training a 3-layer GCN on this data, Cluster-GCN is faster than the previous state-of-the-art VR-GCN (1523 seconds vs 1961 seconds) and using much less memory (2.2GB vs 11.2GB). Furthermore, for training 4 layer GCN on this data, our algorithm can finish in around 36 minutes while all the existing GCN training algorithms fail to train due to the out-of-memory issue. Furthermore, Cluster-GCN allows us to train much deeper GCN without much time and memory overhead, which leads to improved prediction accuracy---using a 5-layer Cluster-GCN, we achieve state-of-the-art test F1 score 99.36 on the PPI dataset, while the previous best result was 98.71 by [16].

翻译:已经成功地应用到许多基于图形的应用程序;然而,培训大型 GCN 仍然具有挑战性。目前基于 SGD 的算法要么是高计算成本,随着GCN层数的增加而成倍增长,要么是保持整张图形和将每个节点嵌入记忆中所需的大量空间。在本文中,我们提议了一个新的GCN 算法,它适合通过利用图形组合结构进行 SGD 培训。 分组GCN 工作如下:每一步,它抽样一组节点,与由图形组合算法确定的密集子阵列相联,并限制本子图内的周边搜索。这一简单而有效的战略可以大大改善记忆和计算效率,同时能够与以前的算法取得可比的测试准确性。为了检验我们的算法的可缩放性,我们创建了一个新的Amazon2M 数据,其发行量为200万个节点和6100万分端,这比以往最大的公开数据集(Redd)要大5倍多。为了在3级GCN 的G- GG- GG- GG- Chem-xxxxxxx 上, 之前的训练可以比以往的升级更快。