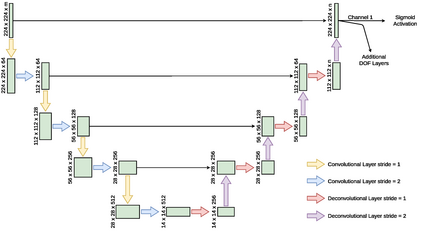

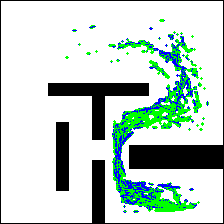

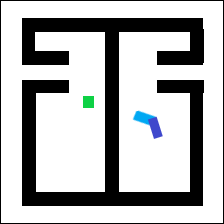

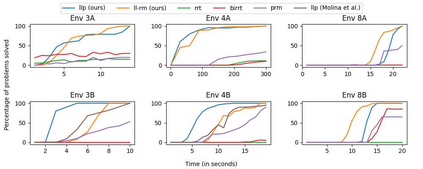

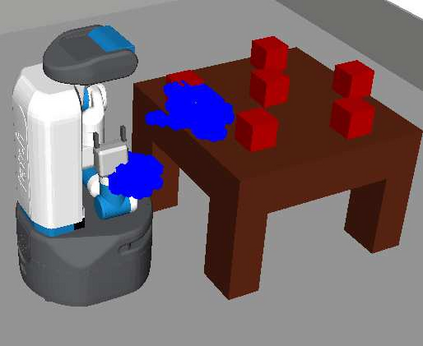

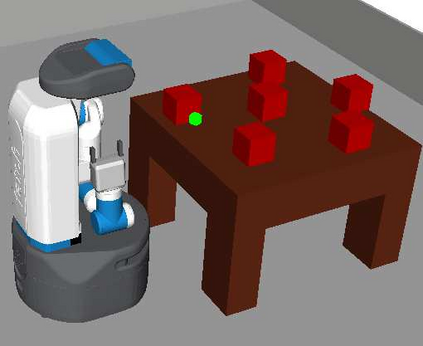

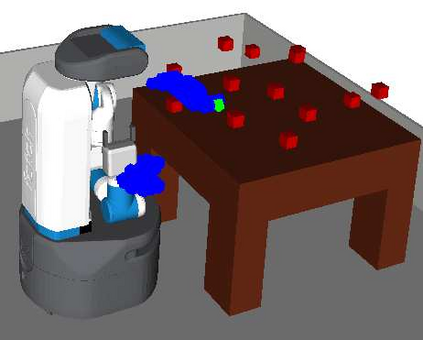

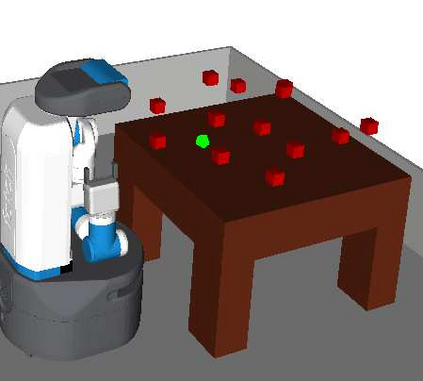

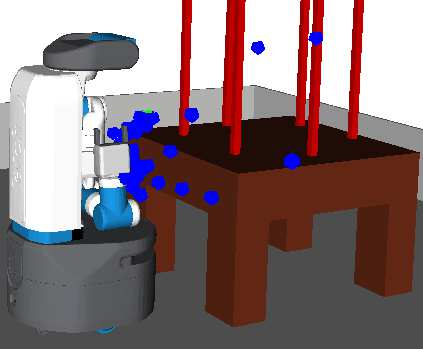

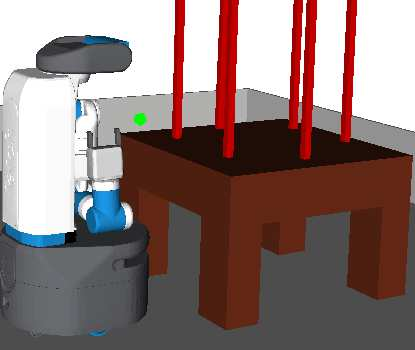

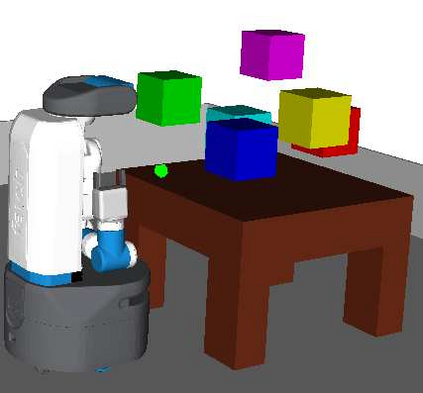

Robot motion planning involves computing a sequence of valid robot configurations that take the robot from its initial state to a goal state. Solving a motion planning problem optimally using analytical methods is proven to be PSPACE-Hard. Sampling-based approaches have tried to approximate the optimal solution efficiently. Generally, sampling-based planners use uniform samplers to cover the entire state space. In this paper, we propose a deep-learning-based framework that identifies robot configurations in the environment that are important to solve the given motion planning problem. These states are used to bias the sampling distribution in order to reduce the planning time. Our approach works with a unified network and generates domain-dependent network parameters based on the environment and the robot. We evaluate our approach with Learn and Link planner in three different settings. Results show significant improvement in motion planning times when compared with current sampling-based motion planners.

翻译:机器人运动规划包括计算一系列有效的机器人配置,将机器人从最初状态转向目标状态。 优化使用分析方法解决运动规划问题被证明是PSPACE-Hard。 以抽样为基础的方法试图有效地接近最佳解决方案。 一般来说, 抽样规划者使用统一的取样器覆盖整个国家空间。 在本文中, 我们提议了一个基于深层次学习的框架, 用以确定环境中的机器人配置, 这对于解决特定运动规划问题非常重要。 这些国家用来偏向抽样分布, 以减少规划时间。 我们的方法是使用一个统一的网络, 并产生基于环境和机器人的基于域的网络参数。 我们用三种不同的环境来评估我们与学习和联系规划者的方法。 结果显示, 与目前基于取样的运动规划者相比, 运动规划时间有显著改善。