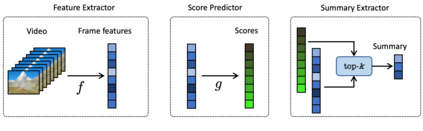

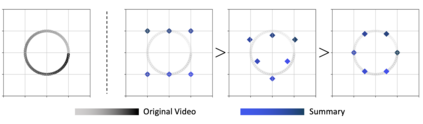

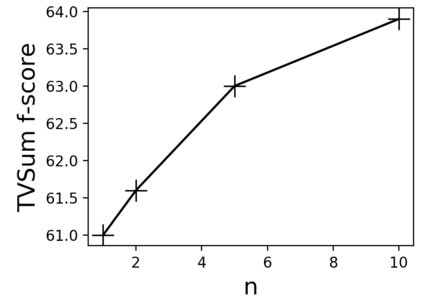

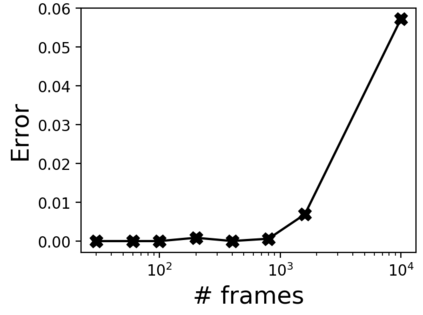

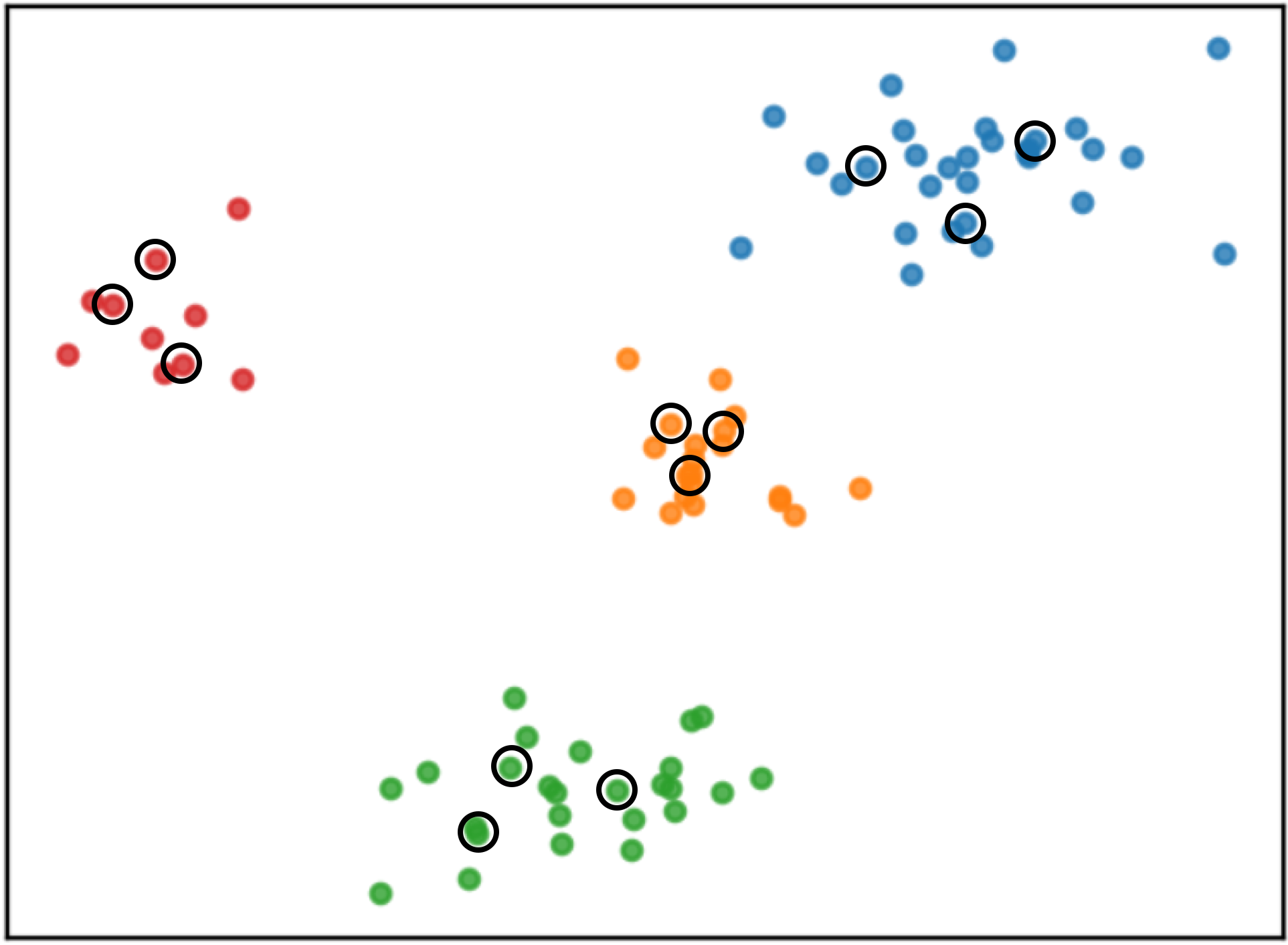

Video summarization aims at choosing parts of a video that narrate a story as close as possible to the original one. Most of the existing video summarization approaches focus on hand-crafted labels. se As the number of videos grows exponentially, there emerges an increasing need for methods that can learn meaningful summarizations without labeled annotations. In this paper, we aim to maximally exploit unsupervised video summarization while concentrating the supervision to a few, personalized labels as an add-on. To do so, we formulate the key requirements for the informative video summarization. Then, we propose contrastive learning as the answer to both questions. To further boost Contrastive video Summarization (CSUM), we propose to contrast top-k features instead of a mean video feature as employed by the existing method, which we implement with a differentiable top-k feature selector. Our experiments on several benchmarks demonstrate, that our approach allows for meaningful and diverse summaries when no labeled data is provided.

翻译:视频摘要旨在选择一部视频的片段, 尽可能将故事讲述在最初的片段上。 大多数现有的视频摘要方法都侧重于手工制作的标签。 随着视频数量成倍增长, 出现了一种越来越需要的方法来学习有意义的总结, 而不贴标签的注释。 在本文中, 我们的目标是尽量利用未经监督的视频摘要, 同时将监督集中在少数个性化标签上, 将其作为附加内容。 为了这样做, 我们为信息化视频摘要制定了关键要求。 然后, 我们提出对比性学习作为这两个问题的答案。 为了进一步提升对比性视频摘要( CSUM), 我们提议将顶部特征比对, 而不是用现有方法所使用的一个平均视频特征, 我们用不同的顶部特征选择器执行。 我们用几个基准进行的实验表明, 我们的方法允许在没有提供标签数据时进行有意义和多样的提要 。